Homelab V5

My Homelab Journey: From Unraid Beginnings to Version 5

1490 Words // ReadTime 6 Minutes, 46 Seconds

2024-12-14 02:00 +0100

My Homelab Journey: From Unraid Beginnings to Version 5

Building and optimizing a homelab has always been a passion of mine. Since its inception, my homelab has gone through several iterations, constantly evolving to meet my goals of achieving maximum performance while minimizing power consumption, noise, and physical space requirements. Here is a snapshot of my journey, culminating in the current Version 5 of my homelab.

The Beginning: Unraid with Custom Hardware

My homelab journey began with a custom-built Unraid server featuring an Intel i3 11th Generation processor and 64 GB of RAM. This setup acted as an all-in-one solution for storage, virtualization, and container workloads. I even conducted simple nested vSphere tests on this server during its early days. Today, the server is still in use as a storage and Docker host, although I have replaced the underlying hardware four times to keep up with evolving requirements.

My first Homelab

The rack that I used from Version 1 through Version 4 of my homelab housed only 2 switches, a Pi3 and an old HP Elitedesk Client in V1, but it had to be replaced to accommodate the changes in Version 5.

Evolution to Version 5

Over the years, I continuously refined and upgraded the homelab. With my role at Evoila GmbH, my expectations for both myself and my homelab grew significantly. It quickly became clear that I needed different hardware to meet these new demands, especially as I aimed to conduct more extensive labs with NSX.

To start, I added a simple 3-node NUC cluster using 11th Generation Intel i5 processors. Additionally, I replaced the switches in my setup with multiple multispeed switches from Zyxel and QNAP. At the time, there were limited options on the market for 2.5 Gbps switches with management capabilities, resulting in a somewhat heterogeneous configuration.

Each iteration brought new hardware, better software configurations, and more ambitious goals. Over time, more technologies found their way into my lab, including a Fortinet FortiGate F40, BGP routing, and 10G switches. These advancements eventually culminated in Version 4 of my lab. However, as the lab grew, the rack ran out of space for further development, prompting the need for a complete rebuild, which led to the creation of Version 5. Now, in its fifth version, the lab has transformed into a powerful and efficient setup comprising.

My Lab Philosophy

My primary goal has always been to achieve the best possible performance with minimal power consumption, noise, and space requirements. To this end, I have standardized my homelab on Intel’s 13th Generation CPUs, which strike a great balance between power efficiency and computational capability.

Lab Overview

In Lab Version 5, I have three clusters:

Management Cluster:

This cluster is powered by an Intel NUC i3 13th Generation, which serves as the always-on management node. The ESXi server in this cluster hosts several key VMs:

- A Windows 11 VM with tools like Hugo, Go, and GitHub for managing this blog.

- LogInsight and FortiAnalyzer.

- A vCenter server.

- An mDNS Repeater for Smart Home integration.

- Homebridge for managing smart devices.

- NetBox for network documentation.

- A Veeam server for backups.

Additionally, two VMs on my Unraid server contribute to the management cluster:

- A Root CA.

- A Domain Controller, which primarily supports the labs.

The Unraid server also runs several containers, including:

- DNS servers.

- An Excalidraw instance.

- Various other tools.

For redundancy, I run a backup DNS server on a Raspberry Pi 3 to ensure DNS functionality during storage maintenance. I use AdGuard Home as my primary DNS server, which blocks ads and forwards DNS queries to my lab.home domain managed by the Active Directory server.

Compute Cluster:

My compute cluster consists of three Intel NUCs of the 13th Generation, each equipped with 64 GB of RAM and a 2TB NVMe drive. Due to the P/E core architecture, these NUCs do not support Hyperthreading but offer 12 cores (4P + 8E). Based on my experience, the performance with E cores enabled is better than using 4 P cores with Hyperthreading. Each NUC features dual 2.5G network interfaces and is connected to my iSCSI storage. This cluster runs standard nested labs, such as my NSX lab and AVI load balancer labs. The performance is sufficient for many labs, making it a reliable and frequently used part of my setup.

The Compute Cluster in Lab Version 5 has the following total resources:

- 192 GB RAM across 3 NUCs

- 6 TB NVMe storage (2 TB per NUC)

- 8 TB shared storage

- 36 CPU cores (12 cores per NUC)

Performance Cluster:

My performance cluster consists of four MinisForum MS-01 units, each featuring an Intel i9 processor with 14 cores (6P + 8E), 64 GB of physical RAM, and a 400% memory tiering configuration. Each MS-01 includes 2 TB of local NVMe storage and an additional 1 TB PCIe4 NVMe drive for memory tiering. With onboard dual 10GbE networking, the MS-01 units are ideal for demanding labs, such as a complete VCF deployment including an HCX proof of concept where I live-migrated VMs between my NSX lab and the VCF lab. The MS-01 units are also used for vSAN labs. Additionally, Intel vPro support allows for efficient remote management. This cluster provides:

- 56 CPU cores (14 cores per MS-01).

- 1280 GB of RAM (64 GB physical per unit with memory tiering).

- 8 TB of local NVMe storage (2 TB per unit).

- 4 TB of NVMe storage for memory tiering (1 TB per unit).

- 8 TB shared Storage

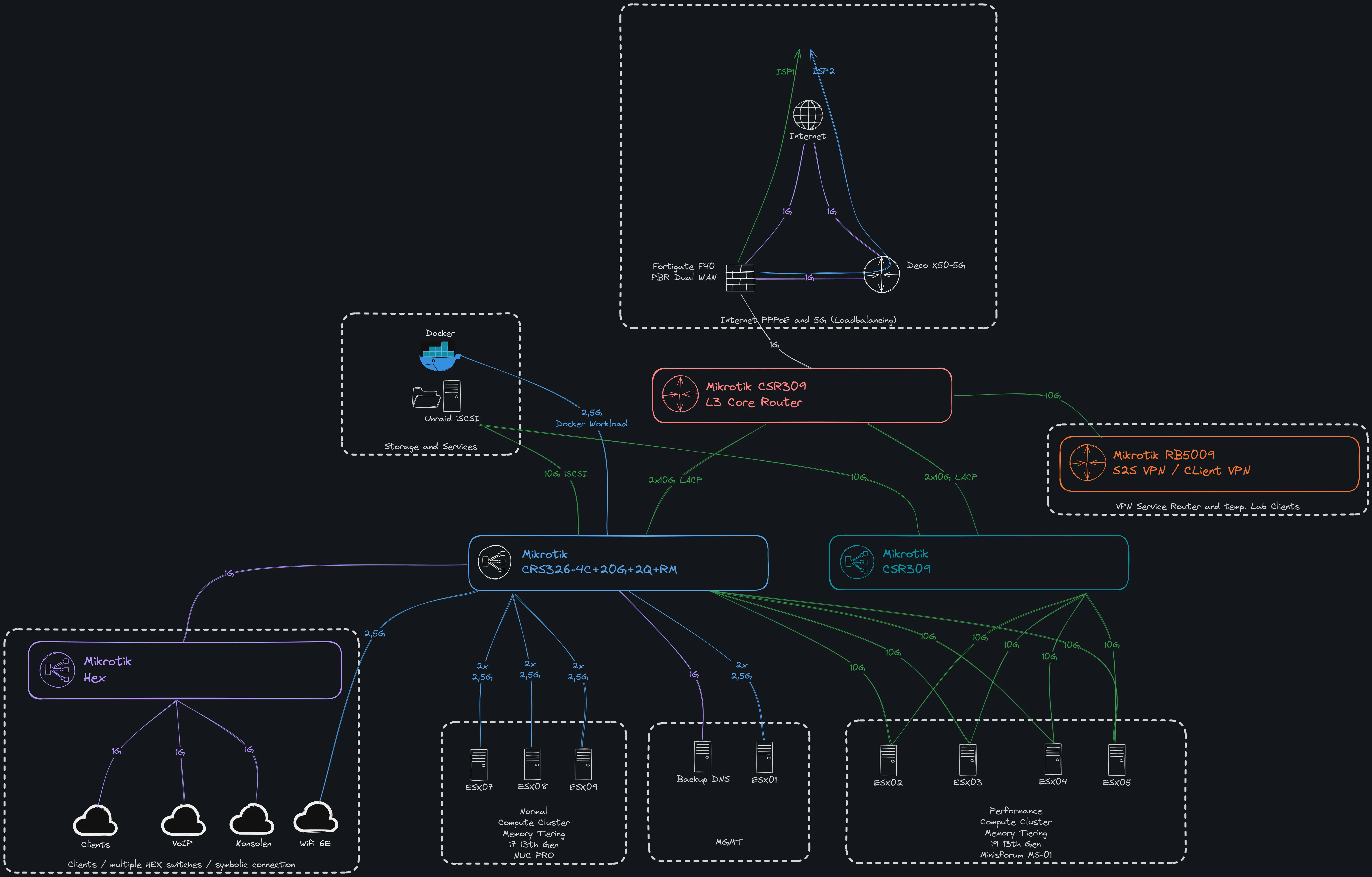

Network

My network consists of multiple MikroTik switches. The centerpiece is my ToR (Top of Rack) switch, the MikroTik CRS309, which can route at line speed thanks to hardware offloading. This switch hosts all lab-relevant gateways and networks, ensuring they don’t need to be routed through my Fortinet FortiGate F40. The servers themselves are connected to two access switches: the NUCs via dual 2.5Gbps connections, and the MS-01 units via 10Gbps connections per switch. I also have a service router (RB5009) that establishes a VPN tunnel to a fellow homelabber. Through this connection, I can utilize his Kubernetes resources, and we’ve even tested the NSX Application Platform (NAPP) together. Visit marschall.systems

Network setup (click to enlarge)

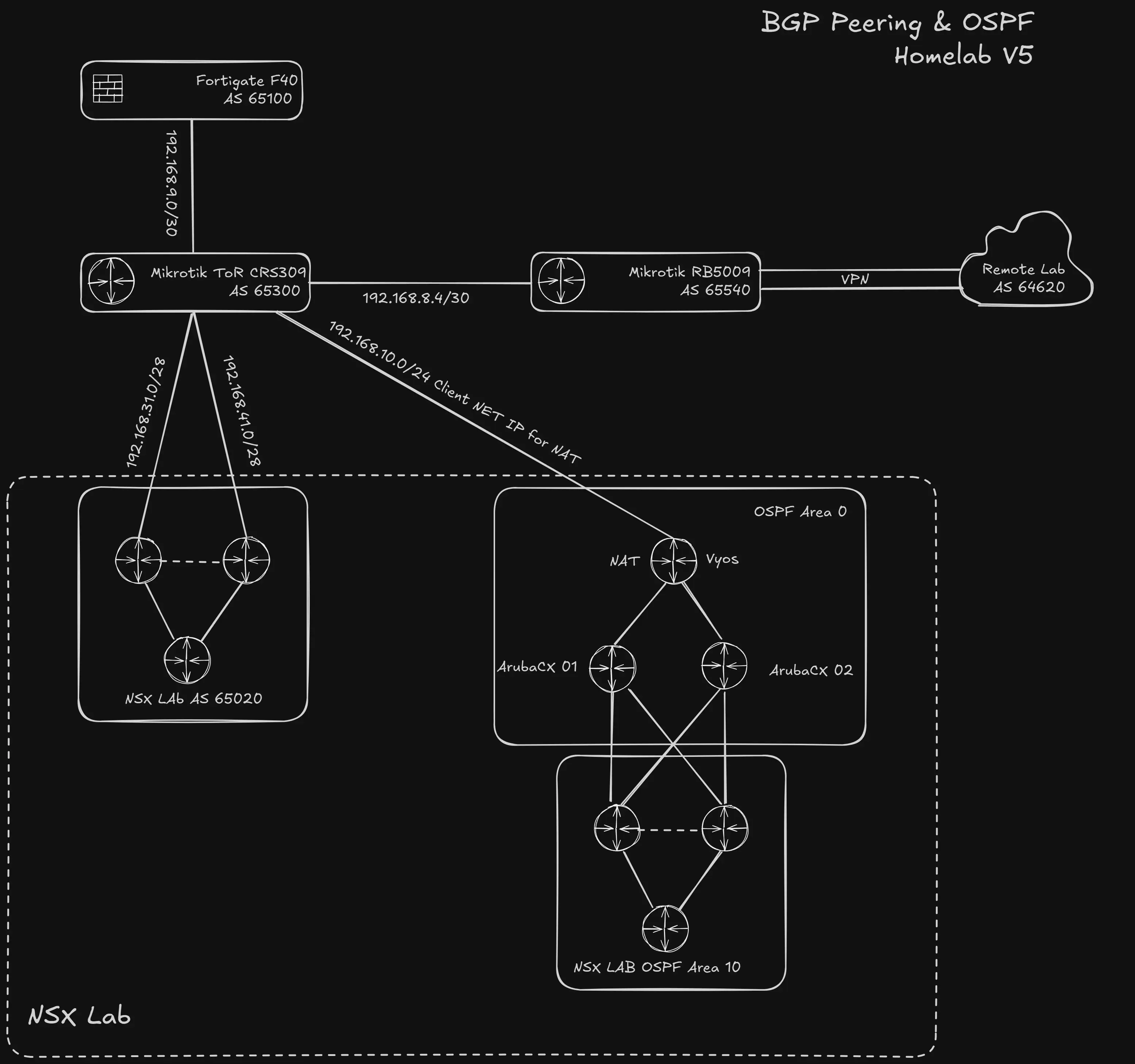

My network employs dynamic routing, with eBGP as the primary protocol peering all critical components. My BGP NSX lab peers directly with my ToR switch, ensuring high efficiency and seamless integration with the rest of the network. For my labs, I utilize both OSPF and BGP. My OSPF lab runs on two virtual ArubaCX switches and a VyOS router, which has an IP in my standard client network and provides internet access to the OSPF lab via NAT.

BGP setup (click to enlarge)

Storage

As my primary storage solution, I use my Unraid server. After implementing several iSCSI optimizations (my iSCSI Blog post) and installing the iSCSI Target plugin (my Unraid Blog post), the server provides a performant iSCSI storage capable of achieving around 2000 MB/s for both read and write operations.

Firewall

As my firewall, I use a Fortinet FortiGate F40, which I’ve had for two years. I am fortunate to have access to an NFR/LAB license through my employer at an affordable price. The FortiGate F40 handles both firewalling and IDS/IPS functionality. Additionally, I operate it in a dual-stack configuration and leverage its SD-WAN feature to load balance two WAN connections: 5G and DSL.

Lessons Learned and Future Goals

-

Performance vs. Efficiency: Achieving the right balance between performance and efficiency requires meticulous planning and experimentation. Each hardware choice and configuration tweak contributes to the overall success of the setup.

-

Automation: In the future, I plan to incorporate more automation into my homelab. To achieve this, I have started experimenting with Terraform to streamline deployments and configurations.

-

Scaling Smartly: As my lab has grown, managing power, cooling, and network configurations has become increasingly important.

-

Continuous Improvement: My homelab is a perpetual work in progress. With each iteration, I discover new ways to optimize and expand its capabilities.

Current Setup: Bill of Materials (BOM)

| Quantity | Component |

|---|---|

| Server | |

| 4 | Minisforum MS-01 i9 13.Gen |

| 3 | Asus NUC Pro i7 13.Gen |

| 1 | Asus NUC Pro i3 13.Gen |

| Network | |

| 2 | Mikrotik CRS309-1G-8S+IN |

| 1 | MikroTik L009UiGS-RM |

| 1 | Mikrotik CRS326-4C +20G+2Q |

| 1 | Mikrotik RB5009UG+S+IN |

| Storage | |

| 1 | Intel NUC Extreme i7 11.Gen |

| USV | |

| 1 | APC Back-UPS Pro 1300VA BR1300MI |

| Firewall | |

| 1 | Fortinet Fortigate F40 |

| Other | |

| 1 | 21U Rack |

| 1 | DAC Cable / Ethernet Cable |

| 2 | Cable Management |

| 2 | Rack Mount MS-01 |

| 1 | Rack Mount NUC |

| 2 | Air Vent |

| 4 | Rack PSU |

You can also find the detailed BOM with prices here, which I update regularly to reflect any changes in my setup.

Final result

Rackmount MS-01 Rackmount MS-01 Rack view top Rack view