Homelab V6 - It’s not just Taylor Swift who has different eras, my home lab does too

A quick update on what's been happening in my lab over the last few weeks.

vcfnsxhomelabminisforumintelamd

1245 Words // ReadTime 5 Minutes, 39 Seconds

2025-06-20 17:00 +0200

Introduction

I’ve had an exciting few weeks. I was part of the VCF9 beta and ran several VCF instances in parallel, which meant I kept running into performance bottlenecks. On top of that, the Minisforum A2 was finally available. But first things first.

2.5 Gb/s LAN - and what I have learned

Well, where should I start? I bought the Mikrotik CRS326-4C+20G+2Q for my NUCs, which offered me 20x 2.5 GB/s ports and wasn’t exactly cheap at around 800 euros. In hindsight, I can say that it wasn’t a good investment. Not that the switch is bad, it’s not, but as of today, I only use exactly 2 2.5G ports. For this purpose, I utilize all 10G and 40G ports (with brakeout) as 10G ports are now in short supply. Following the recent upgrade, I have exactly one 10G port remaining in the entire lab. That’s why I’m actually considering selling the Switch again. But hey, that’s part of homelabbing. Sometimes you just make bad investments.

Another thing that bothers me about 2.5G is that I constantly have problems with autospeed. Sooner or later, I lose a network port when the switch or server is set to autospeed. This isn’t just a problem with Mikrotik switches; I’ve had the same issue with other manufacturers as well. It also affects my TP-Link access points. As a workaround, I just set everything to fixed speed, then Wakeup on LAN doesn’t work because the nucs can’t handle 2.5 Gb in power save mode – there’s always something. That’s why I decided three weeks ago to sell my beloved nuc cluster and say goodbye to the 2.5 GB LAN experiment. And because I spent a little too much money at Minisforum. But more on that later.

Post NUC era

It’s not just Taylor Swift who has different eras, my home lab does too. Does that mean I’ve now said goodbye to NUCs altogether? A resounding yes and no. I won’t be building any more NUC-based workloads, simply because although the ratio between CPU performance and RAM is good again with the 14th generation, the network is just too slow, especially with VCF9, vSAN ESA and other things I want to test.

However, the NUC 14 with Ultra 7 155H CPU, 16 threads, and a maximum of 128 GB RAM is the perfect management NUC, and since I got it used for around €310, it will be my last remaining NUC with ESX. For management, the single 2.5 Gb/s adapter is sufficient, as it is not an H version (high) and therefore no second network card can be installed.

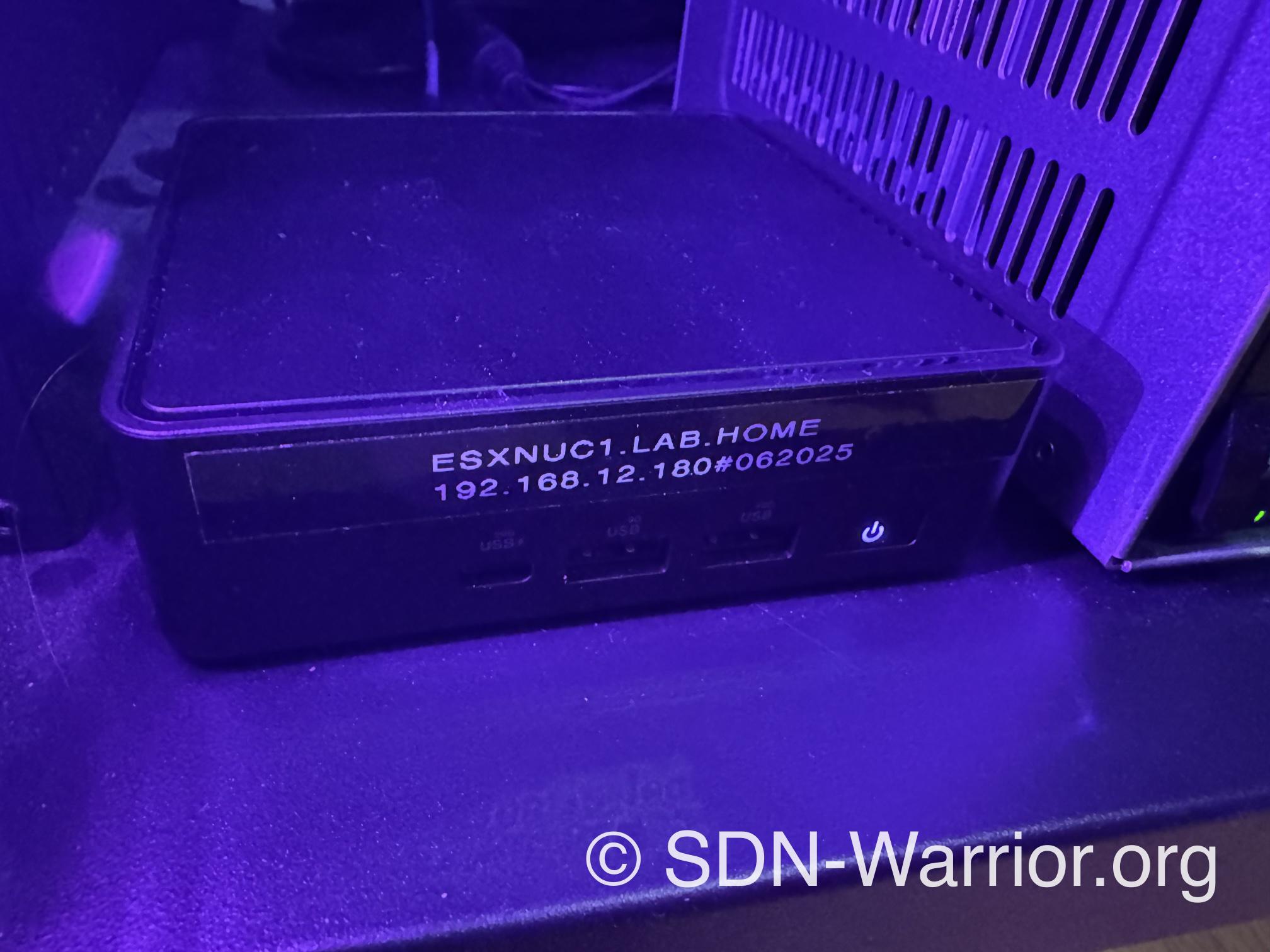

Dusty NUC 14 (click to enlarge)

Yes, I know that the NUC is dusty, but that’s hard to avoid with an open rack.

What’s new?

Now for the obvious: I bought two MS-A2s from Minisforum. These form my new AMD cluster with 32 cores, 64 threads, and 2x 128 GB RAM. In addition, everything on the network side has now been converted to 2x 10 GB per ESXi server.

MS-A2 Powerhouse

Since the housing dimensions are completely identical to the MS-01, I was able to use the thingsINrack custom rackmount solution again. Unfortunately, they have become significantly more expensive than last time. You now have to pay around 90 euros for the 3D-printed bracket. The front is molded plastic and therefore has a nice finish. Of all the solutions on the market, this is still the cheapest and most flexible solution. I have had my MS-01 in a rackmount like this from the beginning and have not had any changes or problems with the rackmount so far (I am not being paid for this, I am just very happy with it).

In addition, two more MS-01s have been added. I posted this on LinkedIn weeks ago, but never got around to documenting it here on the blog. The four existing MS-01s have been upgraded to 96 GB of RAM. Now you might ask why we didn’t go straight to 128 GB – well, what can I say? Stupid decisions or bad timing. Shortly after I received the 96 GB RAM for all my MS-01s, the 128 GB RAM was announced. But since everything was already installed, I didn’t want to send the RAM back.

That’s life as an early adopter sometimes.

The lab is finished now! … The lab is finished now?

Please insert the Star Wars Anakin and Padmé meme here. I would put it in the blog, but I’m afraid Disney would sue me :D At least one update is still pending. My management NUC urgently needs 128 GB of RAM, and I think it will get that next month. Otherwise, I’m still thinking about memory tiering on the AMD servers, as they have a lot of CPU power. However, I would have to buy more NVMes for this, as only 2 TB per MS-A2 is currently installed and I don’t have any fast NVMes left in stock.

Another consideration is to run MS-01 on Proxmox – has anyone here ever called Jehovah? Don’t panic, I’m staying loyal to VMware. The idea is to use Proxmox and deploy a thick nested ESX VM on it. Proxmox can do hyperthreading with E/P cores, which unfortunately neither ESXi8 nor ESXi9 can do at the moment. But of course, ESXi is designed to run on enterprise server hardware, and there are currently no asymmetric cores available. In any case, the MS-01 would then have 20 threads and I could deploy nested servers with 16 vCores. But this is currently nothing more than a thought experiment. I’ve done this technically before, but without measuring performance. I could well imagine that a nested ESXi would run better on a physical ESXi than on a Proxmox server. Maybe a future experiment.

Pictures or it didn’t happen

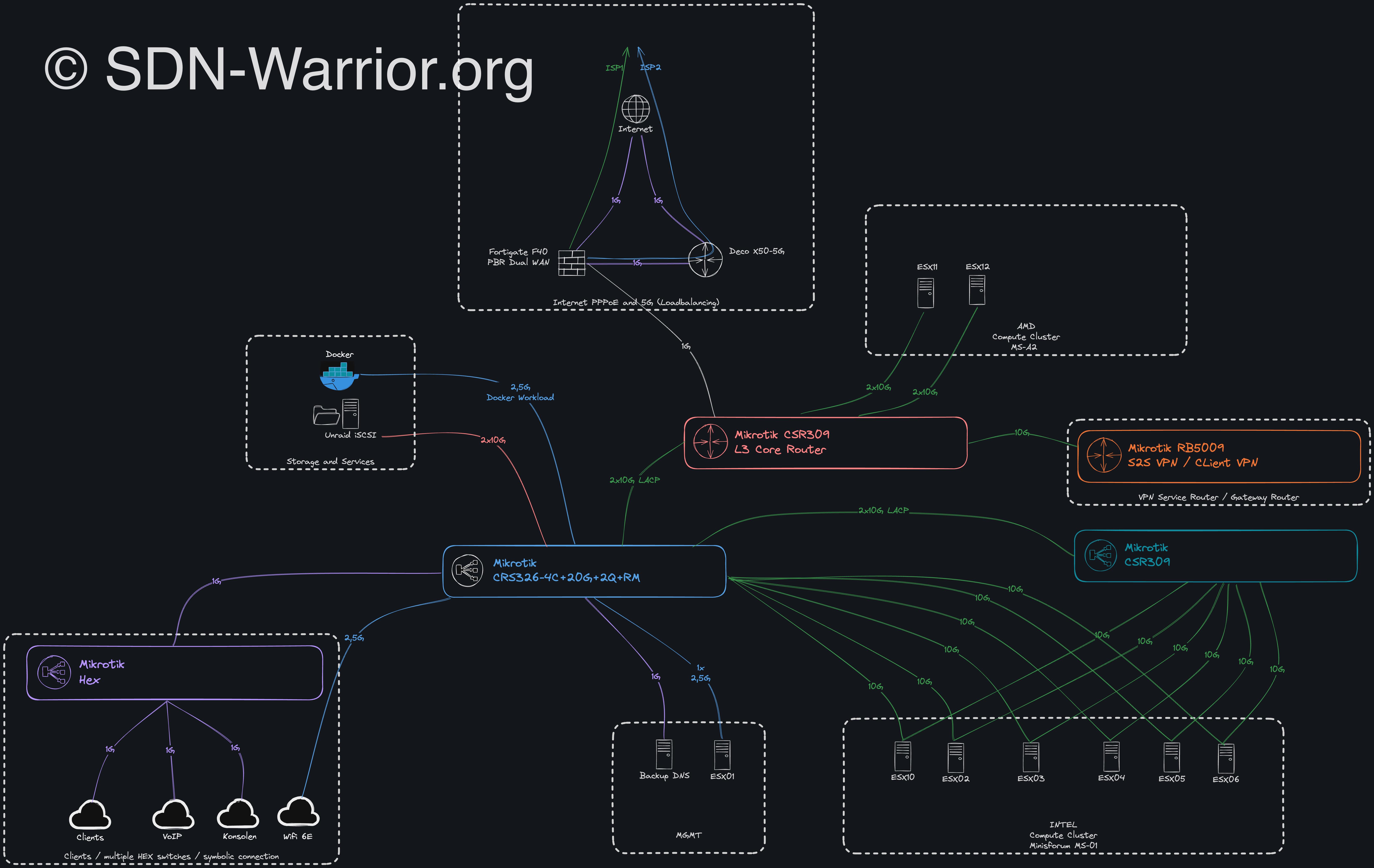

Network setup (click to enlarge)

Here is my network diagram, as I have often been asked which tool I use to draw these beautiful diagrams – it is Excalidraw. The whole thing runs as a docker container on my Unraid server.

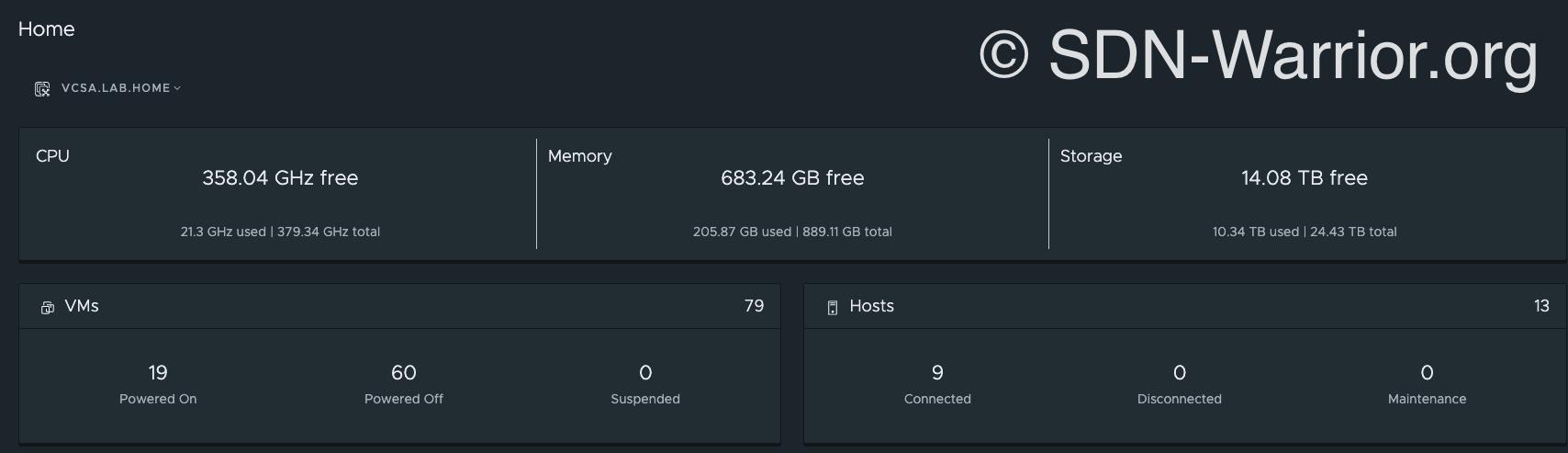

Lab capacity (click to enlarge)

Here you can see the lab capacity, but you should treat the GHz capacity with caution, as it is not accurate due to the E/P cores. vSphere calculates the capacity based on the first core and the maximum non-boosted frequency of the core, and then multiplies this by the number of cores in the server. This is why the display is never accurate for consumer CPUs, at least for the newer Intel generation.

In total, my lab now has 132 physical cores, including E and P cores. However, this does not matter when creating a VM. If, as with VCF Automation, there is a minimum number of vCores (24 vCPUs), then at the end of the day, 24 vCPUs must be executable on an ESX server, regardless of whether they ultimately run on E/P cores. This also explains why I bought two AMD boxes. I want to test VCF9 with Automation. I currently have 896 GB of RAM. I could upgrade the MS-01 to 128 GB, but that would cost another €1,800, which is too expensive for me at the moment – cost-benefit analysis and all that.

Lab v6 (click to enlarge)

Yes, there is another NUC extreme, and it will remain. However, that is my self-built NAS and does not run any VMware workloads. It is also connected with 2x10G. Thus, it has never really been considered part of the NUC era.