iSCSI Tuning

Optimizing iSCSI Performance in an Unraid Environment

1084 Words // ReadTime 4 Minutes, 55 Seconds

2024-12-08 12:21 +0100

In my setup, I use iSCSI in combination with Unraid to create a DIY block storage solution. Unraid, with its flexibility, serves as the foundation, and I utilize the Linux iSCSI implementation installed via a plugin to enable block-level storage.

For my setup, I use an Intel NUC of the 13th generation, equipped with two 2.5G network adapters. These provide the necessary connectivity for storage traffic. I configured two VMkernel (VMK) adapters specifically for iSCSI traffic, ensuring redundancy and optimized throughput.

To further enhance performance, I’ve implemented several optimizations, including fine-tuning settings on my ESXi servers.

Optimize MaxIoSizeKB

One such optimization involves adjusting the maximum I/O size for iSCSI traffic.

While this is sufficient for many setups, it may not be optimal for environments like mine, where jumbo frames are enabled across the network. Larger packets perform better in such a configuration, reducing overhead and increasing throughput.

To take advantage of my network’s capabilities, I increased the MaxIoSizeKB parameter to 512 KB

To configure this, I ran the following command on my ESXi host:

esxcli system settings advanced set -o /ISCSI/MaxIoSizeKB -i 512

This change allows the iSCSI initiator to send larger I/O requests, improving data transfer efficiency in my jumbo-frame-enabled network. With this configuration, I noticed a significant improvement in performance, as the network could handle larger blocks of data more effectively.

After the reboot, you can verify that the change has been successfully applied by running the following command:

esxcli system settings advanced list -o /ISCSI/MaxIoSizeKB

The output should look like this:

[root@esxnuc1:~] esxcli system settings advanced list -o /ISCSI/MaxIoSizeKB

Path: /ISCSI/MaxIoSizeKB

Type: integer

Int Value: 512

Default Int Value: 128

Min Value: 128

Max Value: 512

String Value:

Default String Value:

Valid Characters:

Description: Maximum Software iSCSI I/O size (in KB) (REQUIRES REBOOT!)

Host Specific: false

Impact: reboot

Optimize multipathing

To optimize performance, I configured Round Robin as the multipathing policy for my iSCSI volumes on the ESXi server. This ensures better load distribution and failover capabilities. The configuration can be applied via the ESXi CLI as follows:

- List all connected storage devices to identify the target naa or eui identifier:

esxcli storage nmp device list

- Output

naa.60014058f1117188efe49cb8b5de2273

Device Display Name: LIO-ORG iSCSI Disk (naa.60014058f1117188efe49cb8b5de2273)

Storage Array Type: VMW_SATP_ALUA

Storage Array Type Device Config: {implicit_support=on; explicit_support=on; explicit_allow=on; alua_followover=on; action_OnRetryErrors=on; {TPG_id=0,TPG_state=AO}}

Path Selection Policy: VMW_PSP_MRU

Path Selection Policy Device Config: {policy=iops,iops=1000,bytes=10485760,useANO=0; lastPathIndex=0: NumIOsPending=0,numBytesPending=0}

Path Selection Policy Device Custom Config: policy=iops;iops=1000;bytes=10485760;samplingCycles=16;latencyEvalTime=180000;useANO=0;

Working Paths: vmhba64:C1:T0:L1, vmhba64:C0:T0:L1

Is USB: false

- Set the multipathing policy for the desired iSCSI device to RoundRobin:

esxcli storage nmp device set --device <DeviceIdentifier> --psp VMW_PSP_RR

- Verify that the policy has been successfully applied:

esxcli storage nmp device list | grep <DeviceIdentifier>

- Output after chages

naa.60014058f1117188efe49cb8b5de2273

Device Display Name: LIO-ORG iSCSI Disk (naa.60014058f1117188efe49cb8b5de2273)

Storage Array Type: VMW_SATP_ALUA

Storage Array Type Device Config: {implicit_support=on; explicit_support=on; explicit_allow=on; alua_followover=on; action_OnRetryErrors=on; {TPG_id=0,TPG_state=AO}}

Path Selection Policy: VMW_PSP_RR

Path Selection Policy Device Config: {policy=iops,iops=1000,bytes=10485760,useANO=0; lastPathIndex=0: NumIOsPending=0,numBytesPending=0}

Path Selection Policy Device Custom Config: policy=iops;iops=1000;bytes=10485760;samplingCycles=16;latencyEvalTime=180000;useANO=0;

Working Paths: vmhba64:C1:T0:L1, vmhba64:C0:T0:L1

Is USB: false

To further optimize path usage and load distribution, I adjusted the IOPS parameter for the Round Robin policy. By default, ESXi switches storage paths after 1000 I/O operations, but I used the following command snippet to change this behavior to switch after every single I/O:

for i in `esxcfg-scsidevs -c |awk '{print $1}' | grep naa.xxxx`; do

esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=1 --device=$i

done

Where .xxxx matches the first few characters of your NAA IDs. Reducing the IOPS value from 1000 to 1 means the ESXi host will alternate between available paths much more frequently. In practice, this can help evenly distribute the workload across all paths, potentially improving overall responsiveness and performance.

However, when combined with changes like increasing MaxIoSizeKB, the outcome can vary. In some cases, this adjustment may yield better results, while in others it could degrade performance. Therefore, it’s crucial to test these parameters individually for each storage environment to determine the most effective configuration.

Why Jumbo Frames Matter (bonus)

Jumbo frames allow Ethernet frames larger than the standard 1500 bytes to be transmitted, reducing the total number of frames required to send the same amount of data. This results in lower CPU overhead and better performance, particularly in high-bandwidth and storage-intensive environments. However, it’s essential to ensure that every device in the network path—NICs, switches, and storage systems—supports and is configured for jumbo frames for optimal performance.

To verify that jumbo frames are functioning correctly in your environment, you can use the vmkping command on your ESXi host:

vmkping -I vmk1 -s 8973 -d 192.168.67.250

If you see the error: sendto() failed (Message too long) this indicates that the packet size is too large to be transmitted without fragmentation. For a setup with an MTU of 9000 configured on the distributed or standard switch, a packet size of 8972 bytes should work correctly. If the error occurs, check your vswitch settings, your physical switches and your iSCSI target.

Conclusion

By increasing the MaxIoSizeKB to 512 KB, verifying jumbo frame functionality with vmkping, and enabling Round Robin, I optimized my iSCSI setup to leverage the full potential of my 2.5G network.

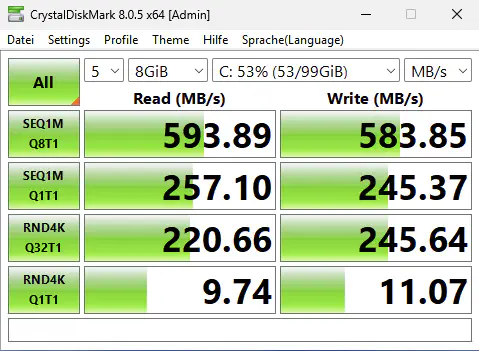

CriytalDiskMark Benchmark

In my tests with CrystalDiskMark, I observed that both network adapters showed significant performance improvements as a result of these optimizations. These adjustments allow my Unraid and iSCSI configuration to deliver a robust and high-performance block storage solution tailored to my workloads.

Disclaimer

The settings and configurations described in this article are specific to my environment and were tested extensively within my setup. While these adjustments significantly improved performance for my use case, they may not be universally applicable. It’s essential to test these settings in your environment before implementing them, as results may vary depending on hardware, network, and workload specifics. These optimizations are not intended as a blanket recommendation.