Tails from the Lab – Lab setup

A brief blog about setting up my nested lab.

vcfnsxhomelabminisforumintelamd

1431 Words // ReadTime 6 Minutes, 30 Seconds

2025-09-12 17:00 +0200

Introduction

I have written a lot about the actual lab setup in the past, but it was always about the hardware and not how I manage my labs or, more recently, how I have built up my vcf fleet. I want to change that here now, as I have received an increasing number of requests for more information.

So let’s jump right in. First things first, let’s take a look at the physical lab infrastructure. That’s my basis for everything else.

Physical lab infrastructure

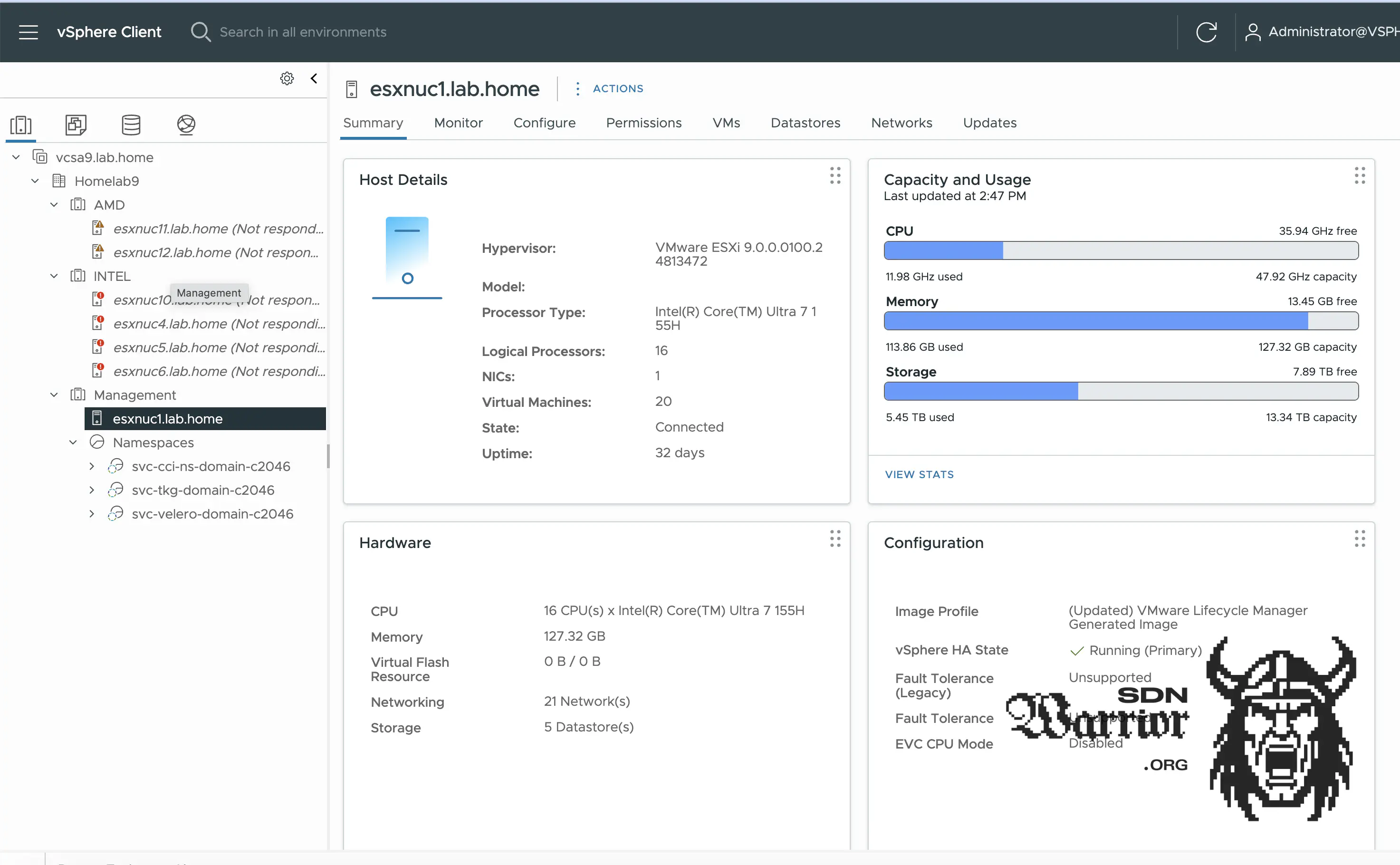

I currently own eight Minisforum servers and one NUC Ultra 7. The Minisforum servers are for workloads and are divided into two clusters, as some of the hardware has AMD CPUs and the other servers have Intel CPUs. My NUC is its own cluster and runs my always-on workload, as the Minisforum servers generate too much power and heat for 24/7 operation.

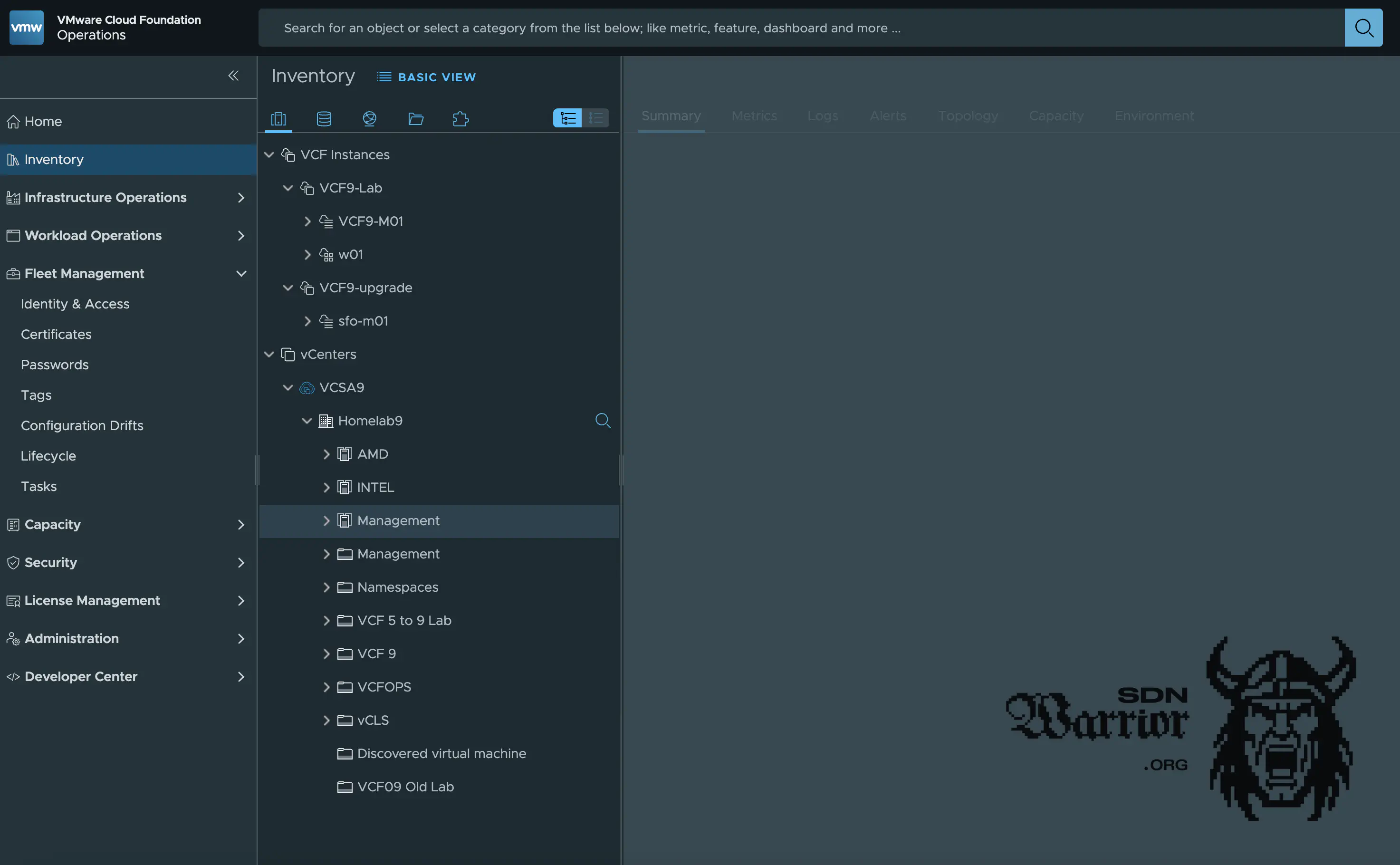

Lab vCenter (click to enlarge)

As you can see in the image, two Minisforum servers are currently not part of my cluster for licensing reasons. This will change in the coming weeks, but Proxmox is currently running on them for testing purposes.

Management NUC

This setup is the foundation for my entire lab. Most of my fleet runs on my small NUC, including a VKS Supervisor cluster as a single deployment. Important services provided by the NUC are:

- Active Directory (Microsoft)

- Veeam Backup Server

- VCF Operations

- VCF Operations for Logs

- VCF Cloud Proxy

- VCF Fleetmanager

- Fortigate Logserver (for my Fortigate 40F)

- VKS Supervisor with Foundation Loadbalancer

- VCF Offline Repository

- vCenter for the Lab

- Netbox

- mDNS Repeater (It’s not relevant for the lab, but it is for my number one service owner, because without it, you can’t turn on the TV with Siri and watch Netflix.)

- Windows 11 Client (There are things I can’t do with my Mac.)

Of course, the NUC is pretty busy, and I’m considering getting a second one, because the boxes don’t cost that much (used) and don’t take up much space. Bonus points for the fact that every NUC is certified for 24/7 operation.

Alternatively, I could use memory tiering, but that would require reinstalling the little guy, and somehow I’m too lazy to do that.

My self-built storage (which I’ve described here before) runs another domain controller, my root CA and my Ansible host.

I obtain NTP globally from my ToR switch, as Mikrotik, the old Swiss Army knife, can do this wonderfully.

Storage

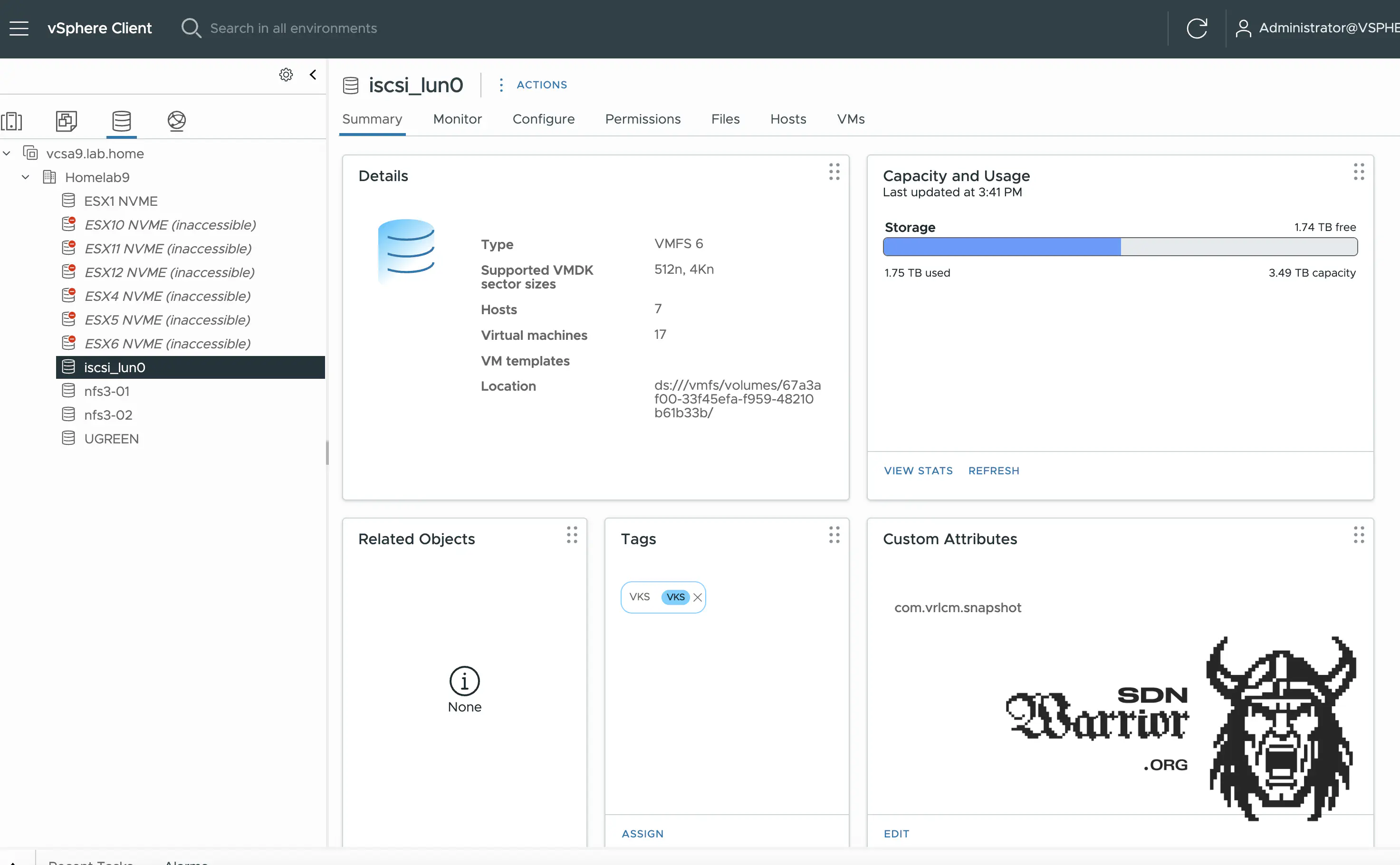

Let’s move on to the exciting topic of storage. Here, I have a combination of local and shared storage. Each server has a 2 TB local NVMe installed on which the hypervisor itself is located, and the rest of the NVMe is provided as VMFS storage. On my Unraid server, I provide 4 TB as iSCSI storage and 2x 4 TB as NFS storage. The storage on Unraid is implemented on NVMes, but without Raid. If a disk fails, I just lose a share.

Lab Storage (click to enlarge)

Now you might ask yourself why I did it this way. Well, iSCSI has the advantage that I can implement a multi-path fabric and is basically my fastest storage, since I have 2x 10 Gb/s iSCSI and can utilize it to its full capacity. All my servers are equipped with 2x10 Gb/s networks (except for my small management NUC). My iSCSI storage runs VMs that require maximum storage performance. I use NFS for VCF nested labs where I don’t want to use vSAN, and most of the time I don’t want to use vSAN in my lab for resource reasons. Fortunately, this is now supported without any problems with VCF9. But why did I build two NFS shares and not just one large share?

Well, that’s because my storage only supports NFS3 (NFS 4 keeps causing problems with Unraid, and VCF 9 requires NFS3 anyway if you want to use it as primary storage), which means multipathing is not possible. For maximum performance, the first NFS share uses the first physical adapter of my storage system and the second NFS share uses the second physical adapter. This means that the two shares do not interfere with each other. In addition, the PCIe bus to which the NVMes are connected does not exceed 10 Gb/s anyway.

If I want to use VCF with vSAN, I use the local storage of my hypervisors, as I have had problems with vSAN and nested ESXi on my shared storage from time to time.

DRS and Network

Let’s move on to my DRS and network settings. This is relatively simple. My DRS is set to fully automated but in conservative mode, as I don’t want any “unnecessary” DRS actions from nested ESX servers. As a rule, I think about where to place my VMs beforehand and then they should stay there.

Since I don’t always test nested VCF labs in my lab, DRS is generally still enabled, as there are scenarios where it is checked whether DRS is enabled or not. For example, with VKS.

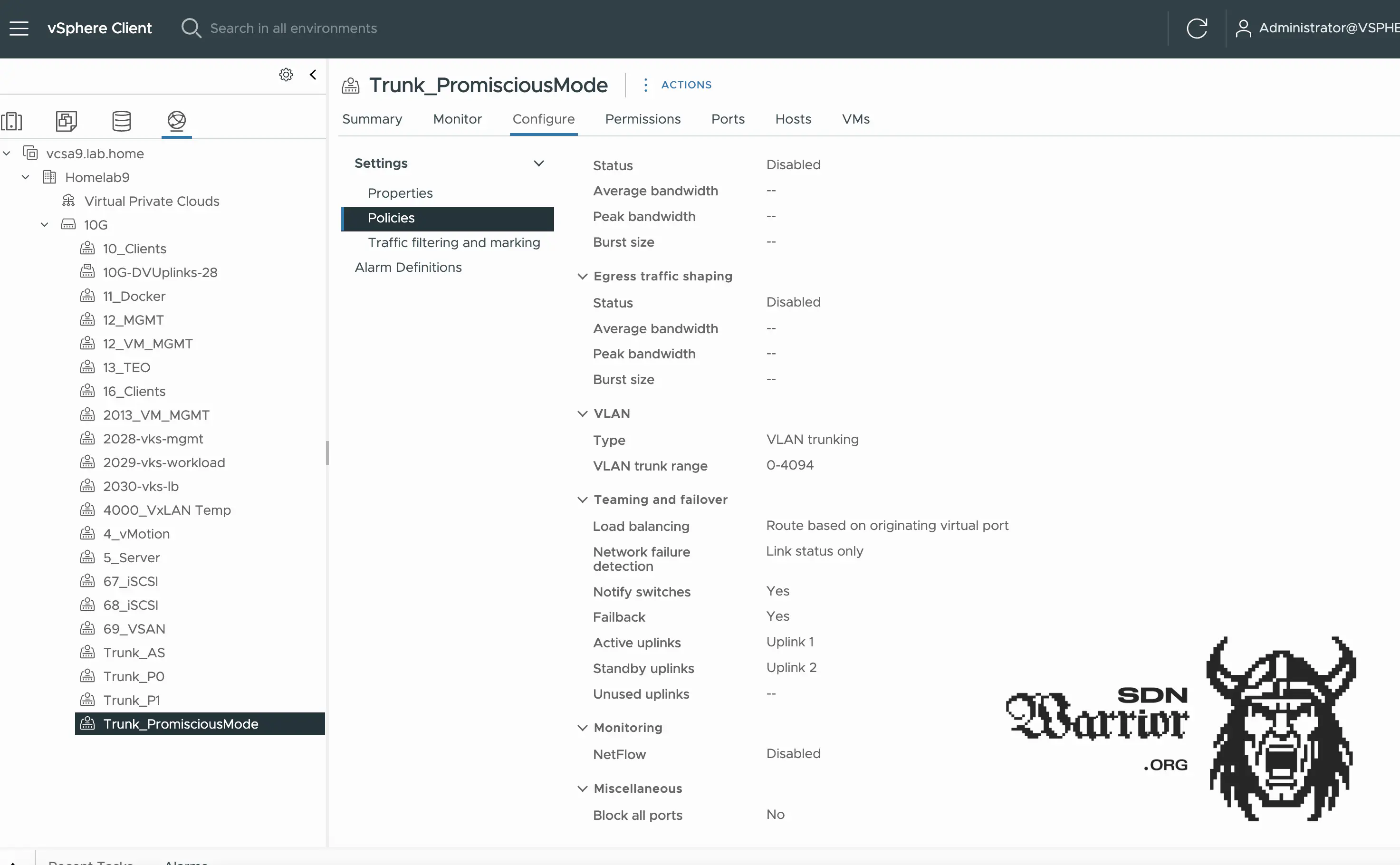

My network settings are currently still under construction. At the moment, I have a distributed switch for all three clusters, but last year showed that this is not the best setup when hosts are frequently down. My new plan is to have a separate vDS for each cluster. This is more flexible and I don’t constantly have the problem that my vDS is no longer in sync when I need a new network on my management NUC.

My port groups are usually active/active on both uplinks. There are three exceptions: my trunk port groups with MacLearning. Here, I have a trunk that only uses uplink 1 of the vDS and a trunk port group that uses uplink 2 of the vDS. This is important for NSX Labs if you want to test traffic steering of the NSX Teaming Policy. Another exception is my port group on which promiscuous mode is enabled. Here, I use active/standby, as otherwise I would have duplicated packets and performance losses.

Lab Network (click to enlarge)

If you want to know more about this topic, you can read my article on Mac Learning. Here, I address the problem and also show the differences in performance. For VCF 9 installation, promiscuous mode is required, otherwise the deployment will fail when the VCF installer attempts to migrate the ESX servers to the distributed switch.

VCF Fleet

My fleet setup is a bit of a hodgepodge. Since I need VCF Operations for licensing, this is already running on my management NUC, as mentioned, but my VCF Automation is on an MS-A2 as a VM and is only turned on when I really need it, as it is a real resource hog. In addition, a VKS cluster has recently been running for testing purposes. I want to use automation to automatically deploy VMs that are running in the vsphere Namespace - fancy new things.

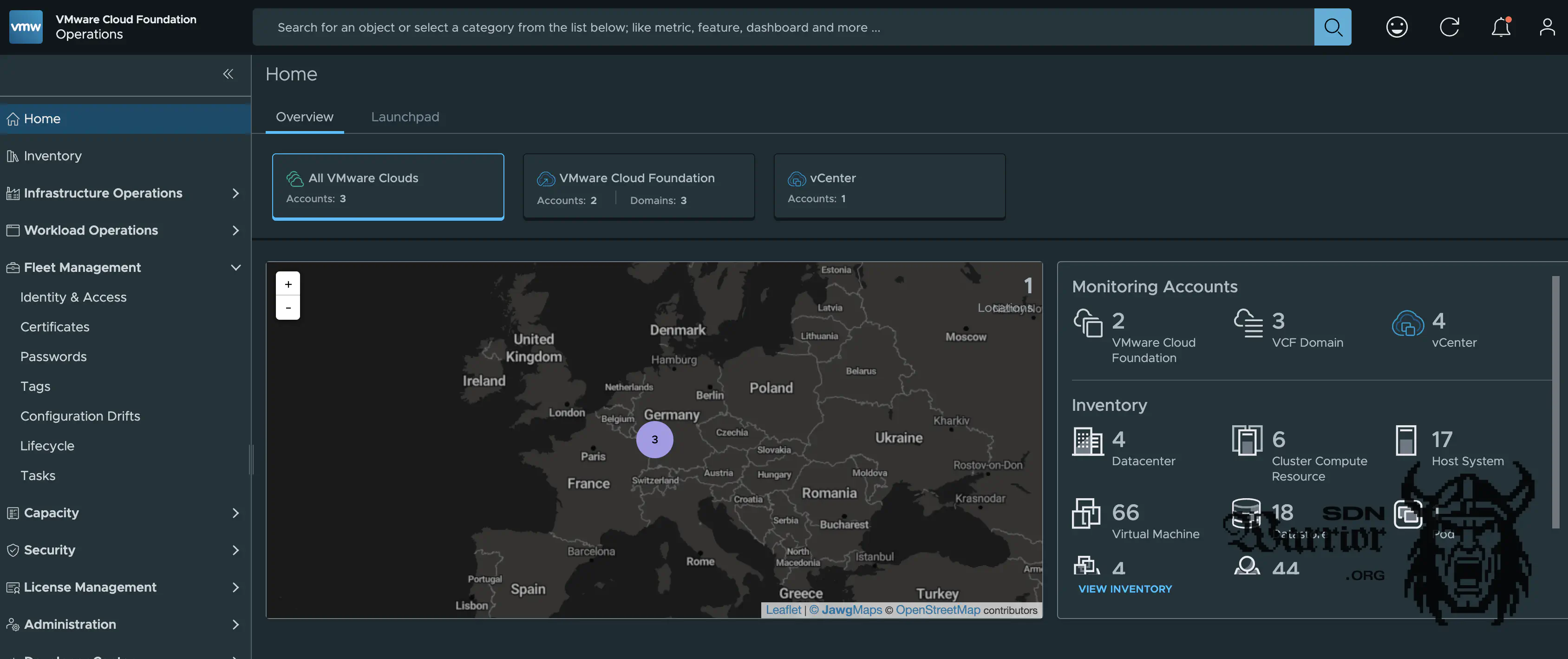

VCF Operations (click to enlarge)

I have several VCF instances onboarded in my central VCF Operations. Since I only have a certain number of licenses, I delete Labs in VCF Operations that are not currently needed. This is a bit annoying, but with the current licensing model, there is unfortunately no other way to do this.

VCF Operations for Network is currently no longer deployed because my vDS kept getting out of sync, which caused problems with IPFIX. I will address this issue when I rebuild the vDS. After that, I will try again to get netflow running on my Mikrotik so that I can get useful network insights. However, this is still a work in progress.

VCF Operations Overview (click to enlarge)

Final thoughts

My setup isn’t particularly complicated, and everything is designed so that I can save resources and minimize 24/7 use without sacrificing too much comfort—anyone who has ever looked at the procedure for shutting down a VKS-enabled cluster knows what I mean. But not everything is perfect, and there is certainly room for improvement. My Ansible playbooks need to be revised because they no longer run properly with the latest version. In the future, I would also like to deploy much more automatically, i.e., I would prefer to deploy my standard VCF deployments fully automatically.

My monitoring with uptime Kuma with Discord bot and notifications is a good start, but it was never really finished. I’m also not quite sure where I want to go with my VCF automation setup, but I think the next few weeks and months will show. If you have any suggestions, questions, or improvements, please feel free to contact me. Homelab thrives on exchange.