VCF9 - Automation in All Apps mode

A quick introduction to VCF 9 Automation in All Apps mode

4994 Words // ReadTime 22 Minutes, 42 Seconds

2026-01-12 02:00 +0100

Introduction

Ah Sh*t, Here We Go Again - Happy New Year! What was I thinking, letting you guys vote on what my next topic would be? But okay, I guess I only have myself to blame. Anyway, over 60% wanted me to cover VCF9 automation in All Apps mode. As most people know, there are two modes in VCF Automation: the so-called legacy mode, which is nothing more than VCF Automation 8.18, and the new and much more exciting All Apps mode.

The differences in brief: Legacy Mode follows a classic IaaS approach, focusing on the provision of individual VMs. All Apps Mode is application-centric. The aim is to deploy an application as a whole, with VMs, containers, network, and storage being merely consumable resources. VMs are consumed as a service in a “Kubernetes-native” manner. This is why VKS and NSX (with VPCs) are also mandatory components of the automation solution in VCF9.

Prerequisites

But okay, let’s get to the prerequisites for using automation: We need a VCF9 deployment. In my lab, I have deployed a management design (formerly consolidated design) with two vSphere clusters. The second cluster is not necessary, even though the current VCF 9 documentation claims otherwise.

NSX with a T0 router in active/standby mode is required (VPCs with stateful services such as auto snat still need this, as in VCF9.0.1 the transit gateway inherits the HA mode from the T0) and a VKS supervisor cluster.

Phew, that’s quite a lot. The article will not cover VCF9 deployment and NSX VPC setup. You can find the basics of VPCs in my blog here, here, and here.

But here I will show you VCF Automation Deployment, a basic VKS deployment (with VPCs), setting up an organization in Automation, and creating a VM in All Apps Mode.

VCF Automation Deployment

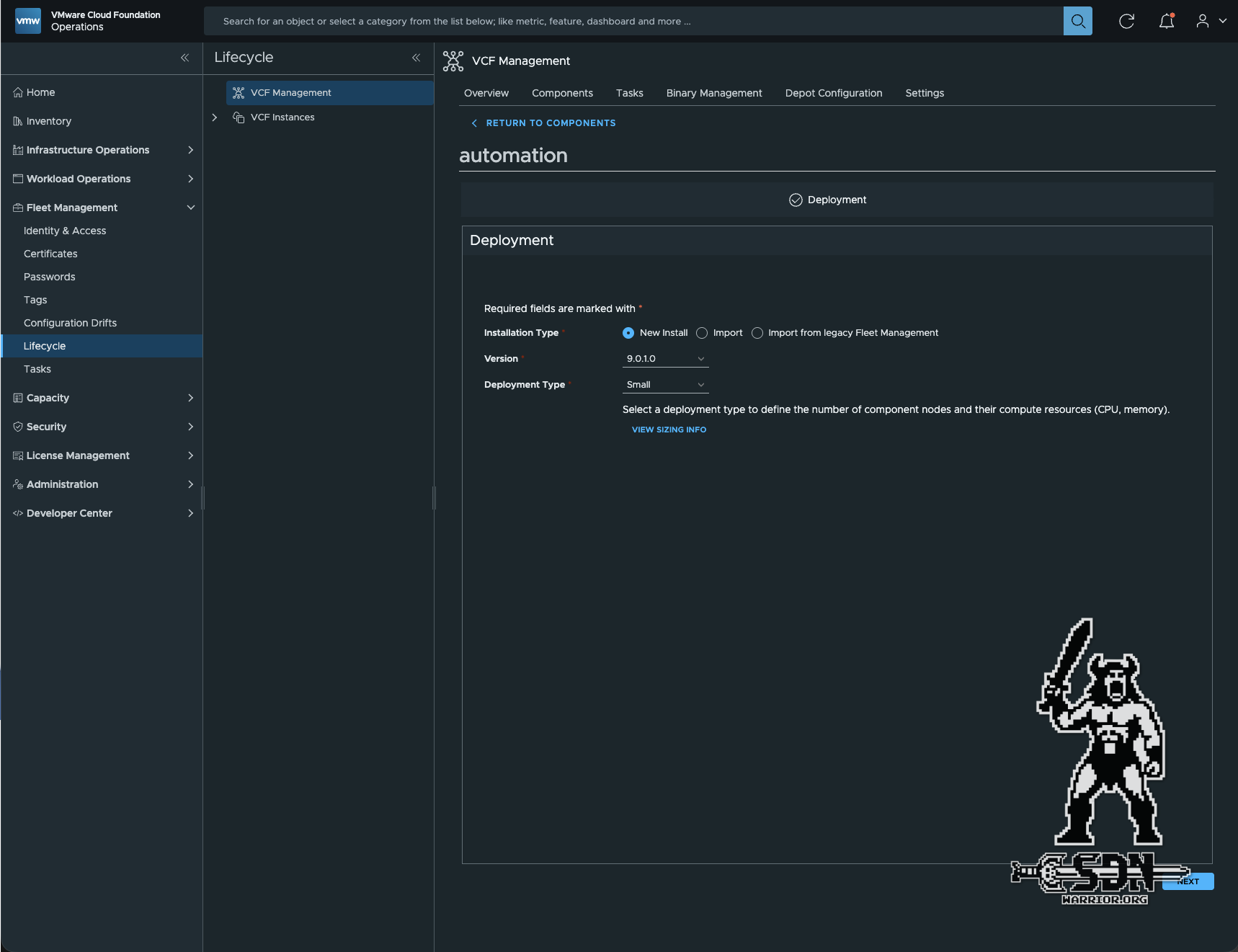

If automation was not provided via the VCF Installer, it can be deployed retrospectively as a Day 2 operation via VCF Operations.

To deploy automation in small form factor, we need 1 FQDN/IP that serves as VIP and 2 additional IP addresses that are used as cluster node IP pool. I use the internal load balancer in my setup.

The actual setup is fairly straightforward. The component is installed via Operations Fleet Manager under Lifecycle.

Automation Deployment (click to enlarge)

I won’t go through every single step here, just the most important ones. When creating the certificate, I always create a wildcard certificate in the lab. It is important that the VIP IP address and the FQDN are entered in the SANs. In my lab, the SAN entries would be *.lab.vcf, 10.28.13.12.

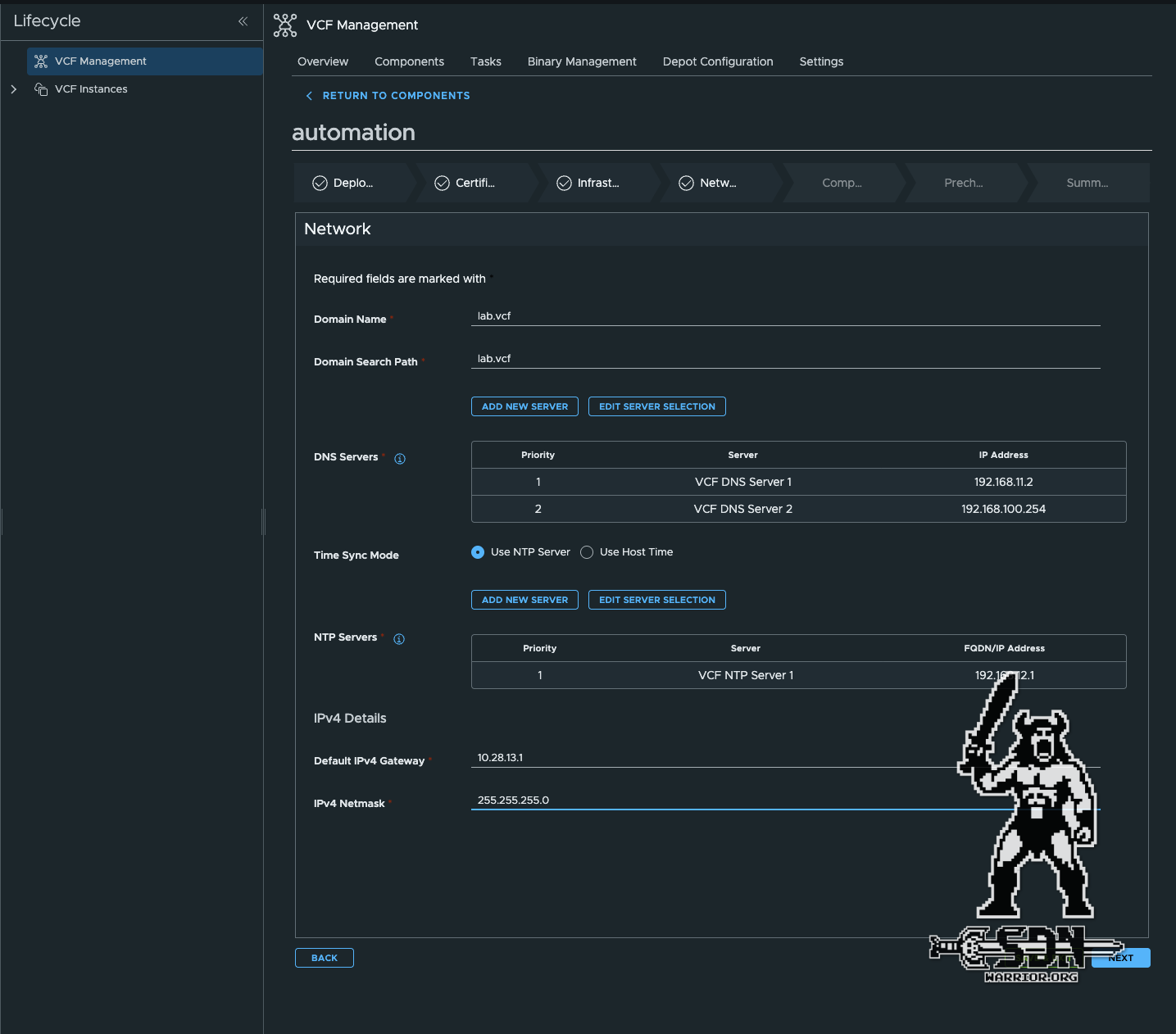

The network settings are also relatively self-explanatory. I deploy my automation to the VM MGMT Network, which was automatically created by VCF. I use the servers specified during the VCF installation as DNS and NTP servers. You don’t have to enter these again separately; the Edit button gives you a selection of all DNS and NTP servers known to VCF. This is somewhat counterintuitive.

Automation Network settings (click to enlarge)

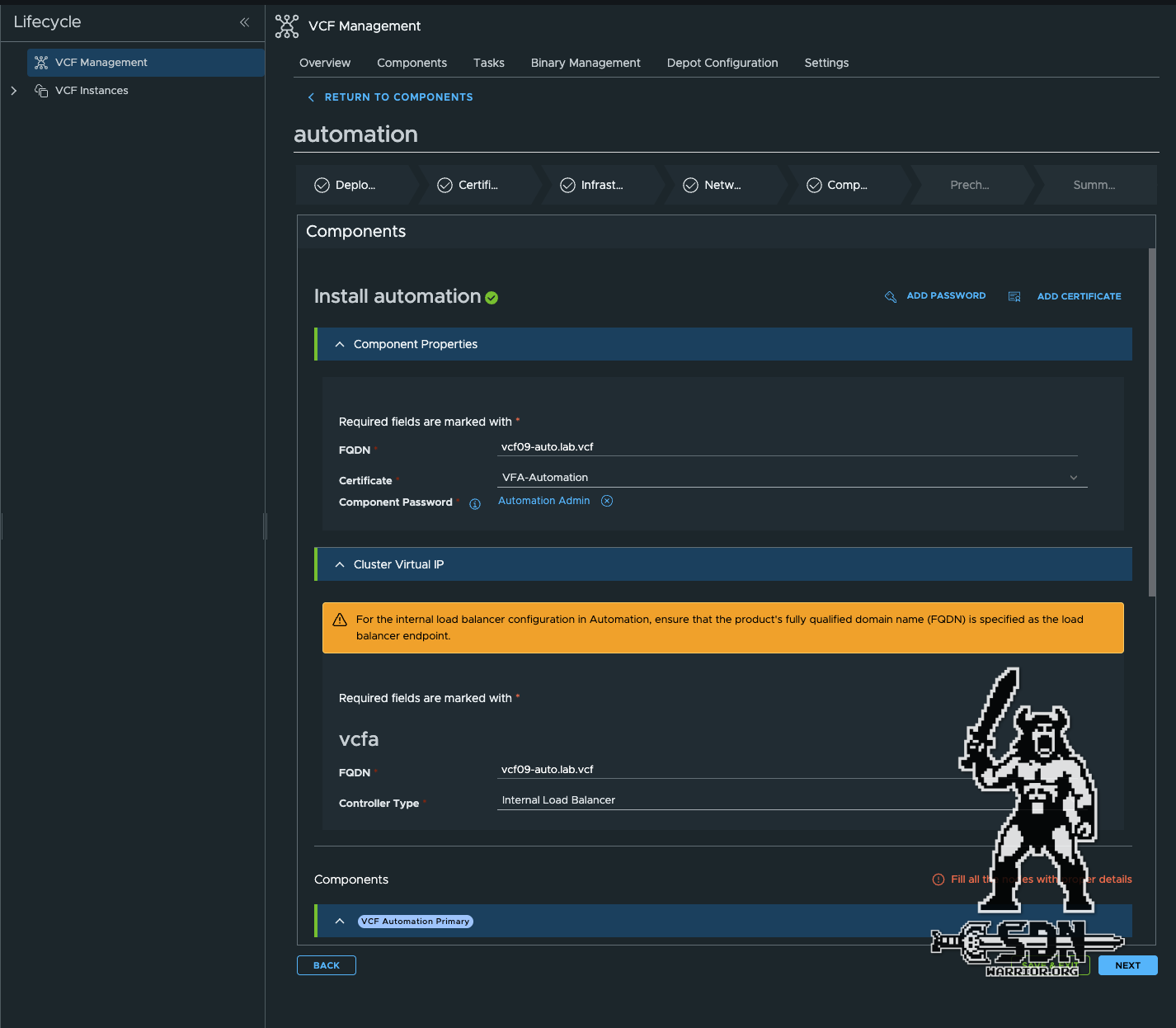

Now comes the component part, where many (like me) are probably a little confused at first. I don’t find the description here very clear. Since the internal load balancer is used, the FQDN of the component and the cluster VIP must be identical.

Automation Comnponents (click to enlarge)

The IP address created for the FQDN is entered under Primary VIP. The Internal Cluster CIDR is for internal communication and is 198.18.0.0/15 by default and should not overlap with existing networks. The range is from the reserved network and is not usually used in any network. The Cluster CIDR cannot be changed afterwards.

Additional VIPs are not required in the standard setup and can be left blank. The two additional IP addresses I mentioned at the beginning are entered in the Cluster Node IP Pool. These are also from the VM MGMT Lan. Each automation node is assigned an IP address from this pool. A spare IP is required for upgrades, so the minimum size of the pool is always the number of automation nodes + 1.

If everything has been done correctly, the precheck will run without any problems. The deployment takes some time. With my hardware, it takes just over an hour, but I have seen deployments that take up to 3 hours.

After one or two cups of VCF Admin fuel, aka coffee, the installation should now be successfully complete and we can focus on the VKS deployment.

VKS Deployment with VPCs

First of all, VKS is not my area of expertise, so I’m going to do the simplest VKS deployment possible here. I’m using the NSX load balancer instead of AVI, and I’m using VPCs. I also use the simple installation and not the HA cluster of the supervisor. If you want to read more about VKS, I recommend my colleague Christian, who does interesting things with Antrea, VPCs, and VKS.

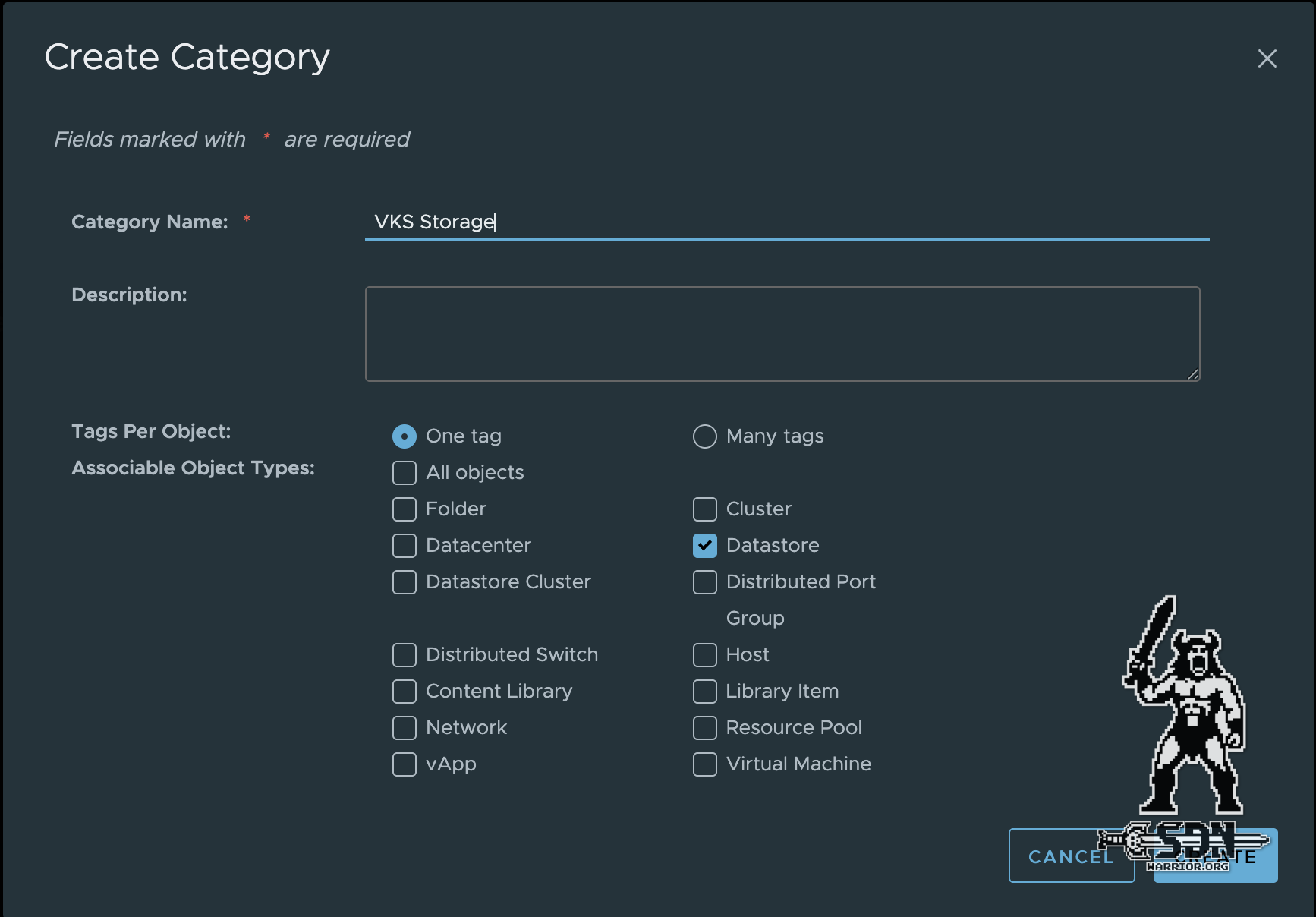

To install VKS, you must first create a storage policy. To do this, you must first create a tag and a tag category. The quickest way to do this is via vCenter by clicking on Storage and selecting Assign Tag. Select Add Tag and then Create New Category to create a datastore tag category.

TAG Category (click to enlarge)

It is important to select One Tag and Datastore here. Once the category has been created, a tag can be created and assigned to the storage.

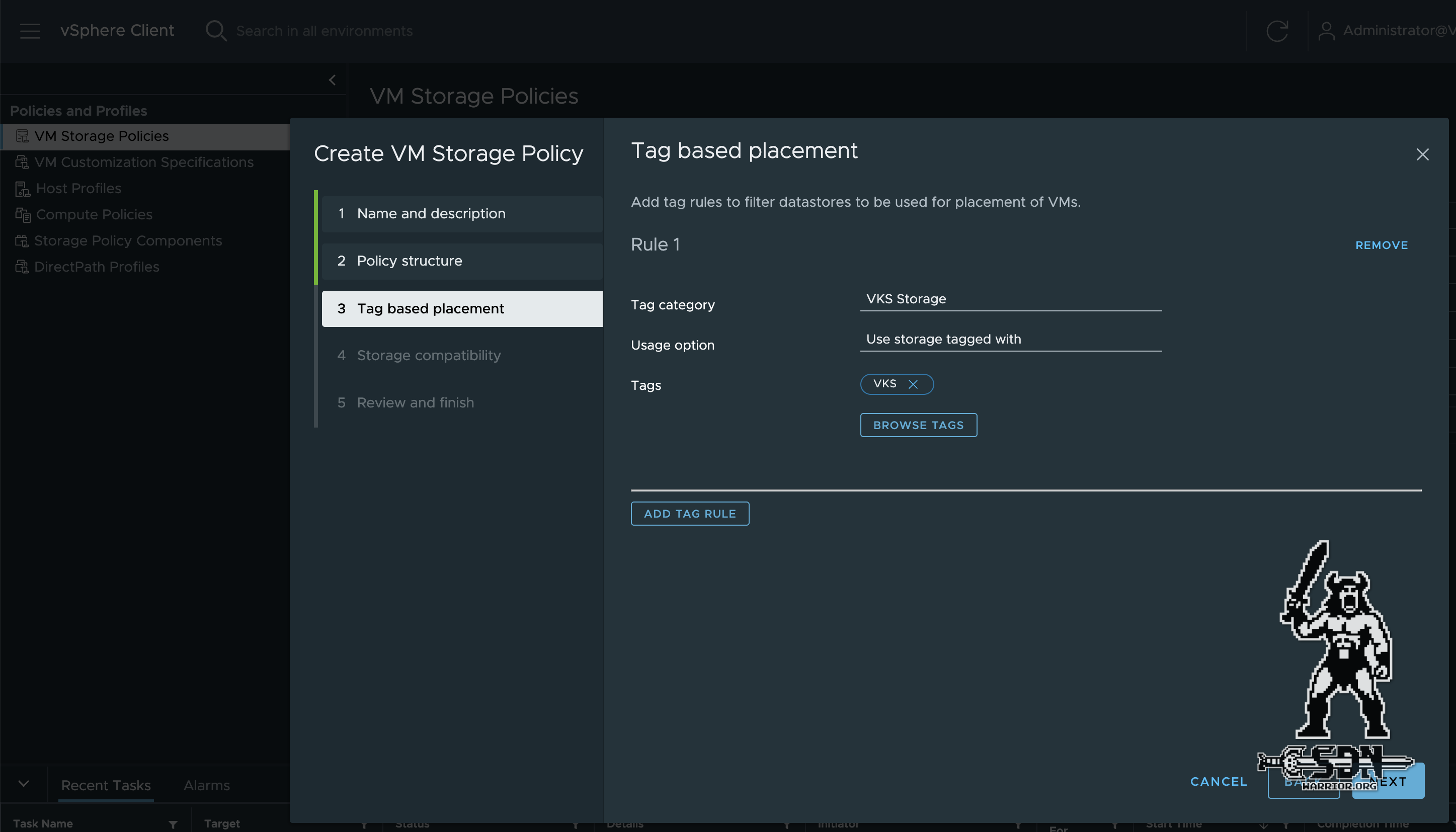

Then I create the appropriate storage policy under Policies and Profiles -> VM Storage Policies. This is necessary to ensure that the correct storage is selected during VKS deployment. The storage policy is TAG-based and uses the previously created TAG.

Storage Policy (click to enlarge)

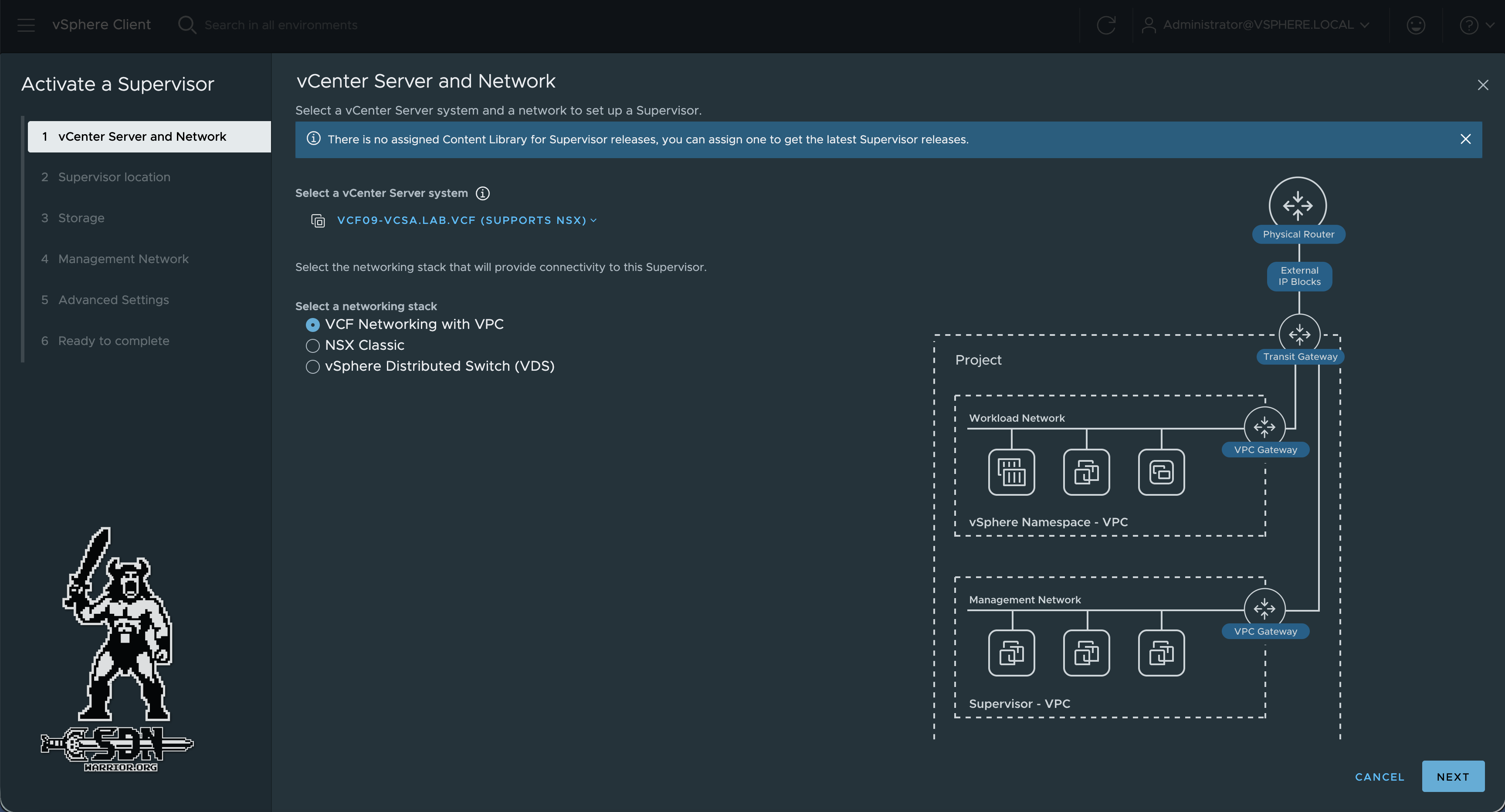

Next, we start the VKS deployment via the vCenter Burger Menu and the Supervisor Management menu item. During deployment, a content library is automatically created on the VKS Storage Policy data store. If you want, you can also do this in advance, but since it should be as simple as possible, I use the automatically generated content library.

VKS deployment (click to enlarge)

In my opinion, deployment via VPCs is the simplest method, as it requires the fewest parameters to be set during deployment. A new VPC must be created in advance for the supervisor cluster and a public VPC subnet for management.

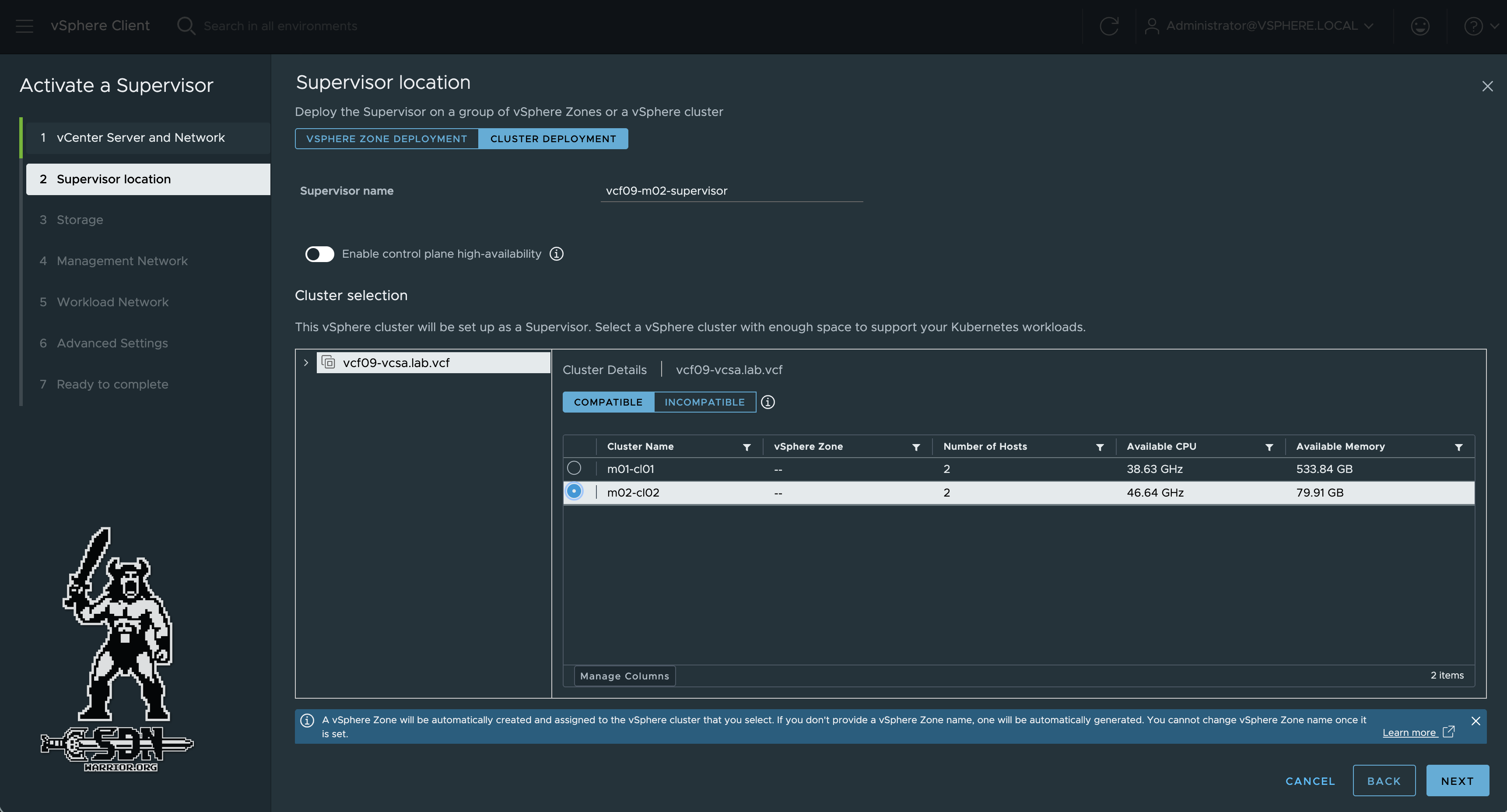

Next, the supervisor location is determined. Since this is a normal cluster deployment and not a vSphere with Zones setup, Cluster Deployment must be selected. In addition, a supervisor name is assigned (in lowercase letters) and, since this is to be a single node cluster, Control Plane HA remains disabled. As mentioned, I have two vSphere clusters in my setup and I want my supervisor to be in cluster m02-cl02.

VKS Supervisor location (click to enlarge)

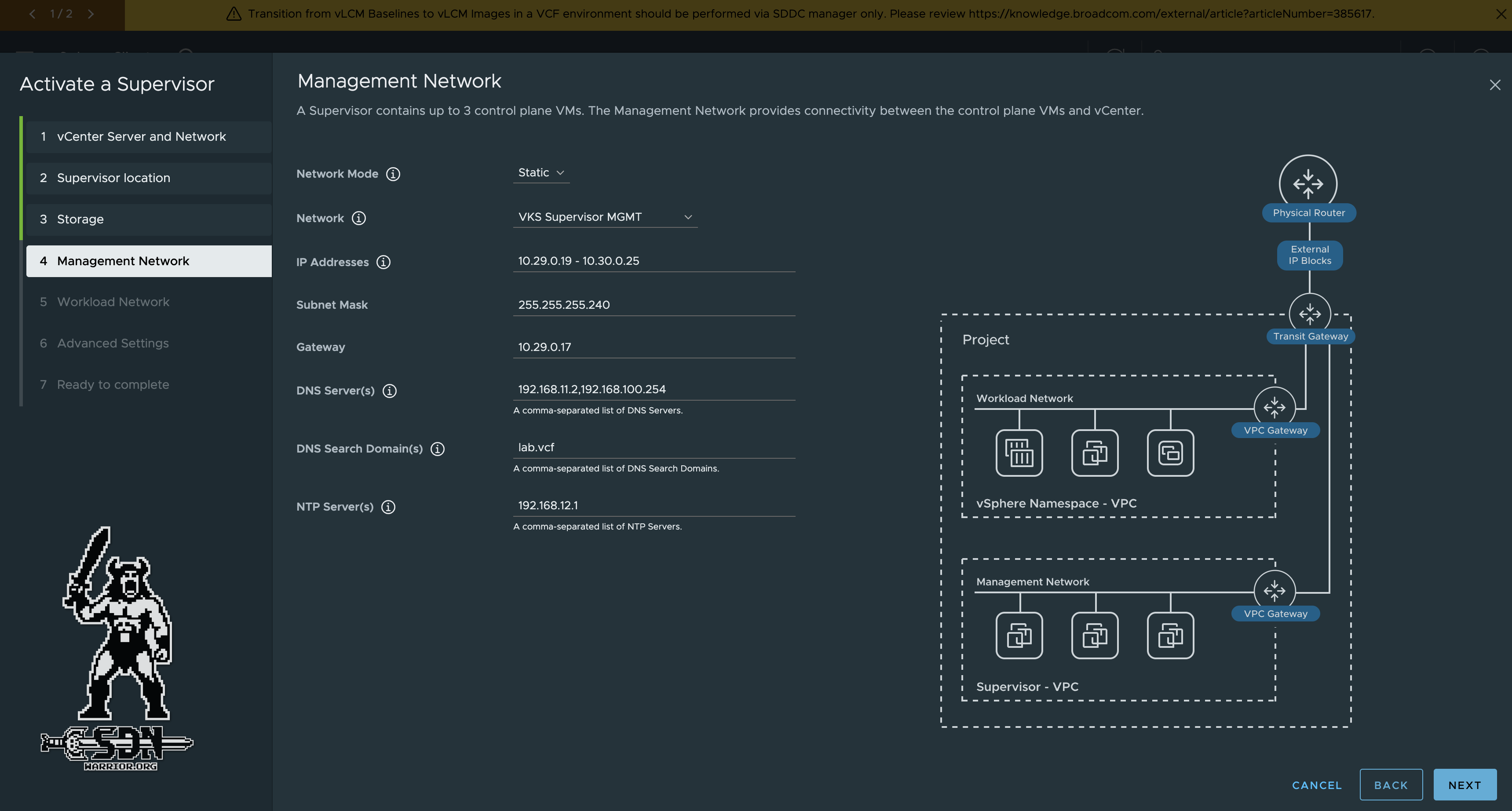

After that, the storage policy is assigned and you can proceed to the management network setup. Normally, a pool of at least 4 IP addresses (1 VIP, 3 supervisor nodes) is sufficient. In a simple deployment, even fewer IP addresses are required, but I have specified a larger range to enable scale-out in the future. The remaining details are fairly self-explanatory.

VKS Supervisor Managment Network (click to enlarge)

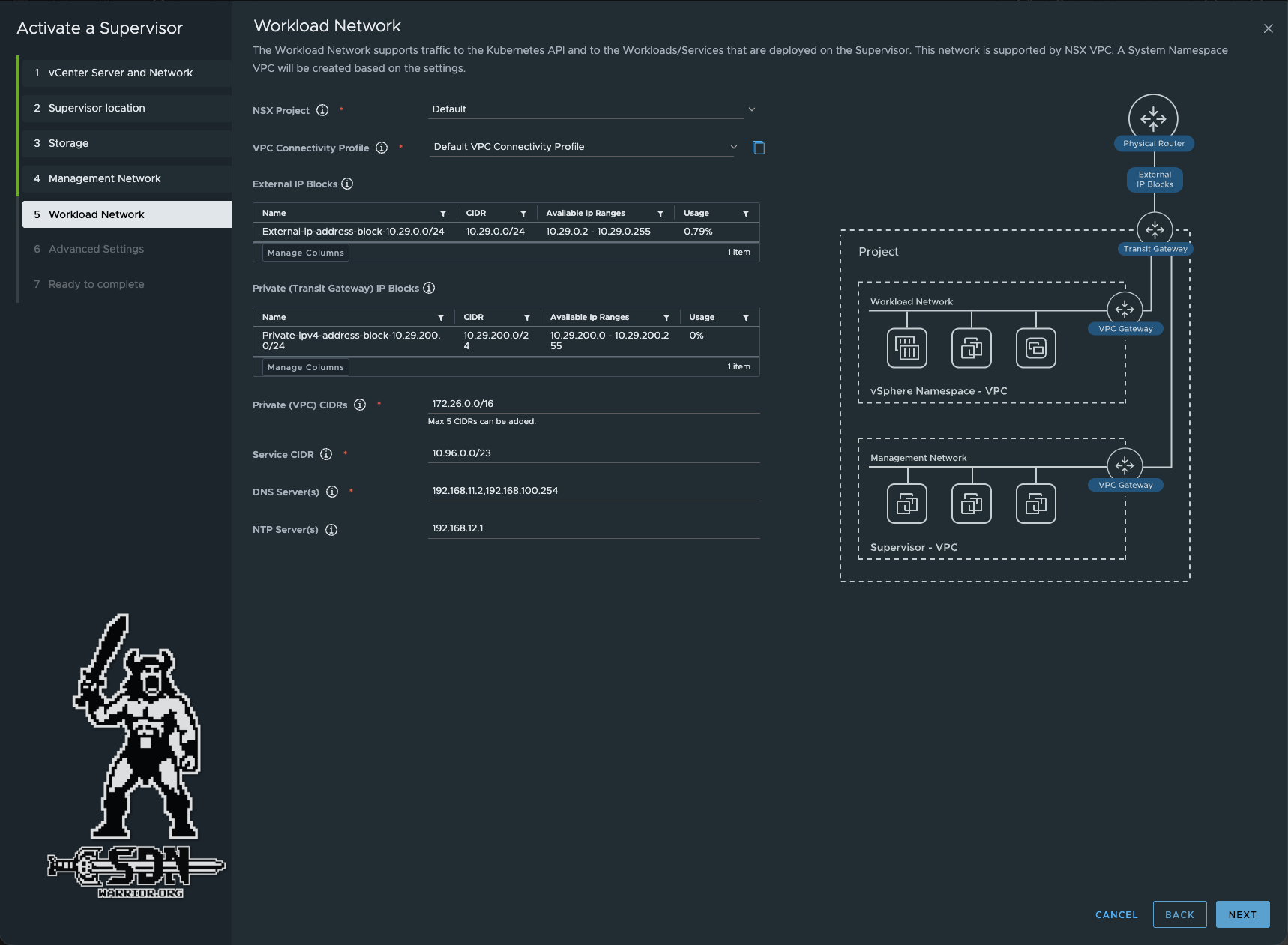

The next setting is the workload network, and the nice thing about the VPC setup is that most of it is already pre-filled and was specified during the NSX setup of the VPCs. Since I want to keep this example simple, I am using the default project from NSX. It would of course also be possible to put the supervisor in its own NSX project with its own T0.

The private (VPC) CIDRs can be freely assigned, as it is a non-routable network, so overlapping IP ranges are possible and permissible. Nevertheless, you should be careful not to enter any of your routable networks as private CIDRs. The service CIDR is preselected and usually does not need to be adjusted, and again comes from a privately reserved range.

DNS and NTP can be customized for the workers here, but this is not necessary in my case. These are addressed from the VPC with Auto SNAT, as a worker node cannot communicate directly via the private VPC CIDRs.

VKS Supervisor Workload Network (click to enlarge)

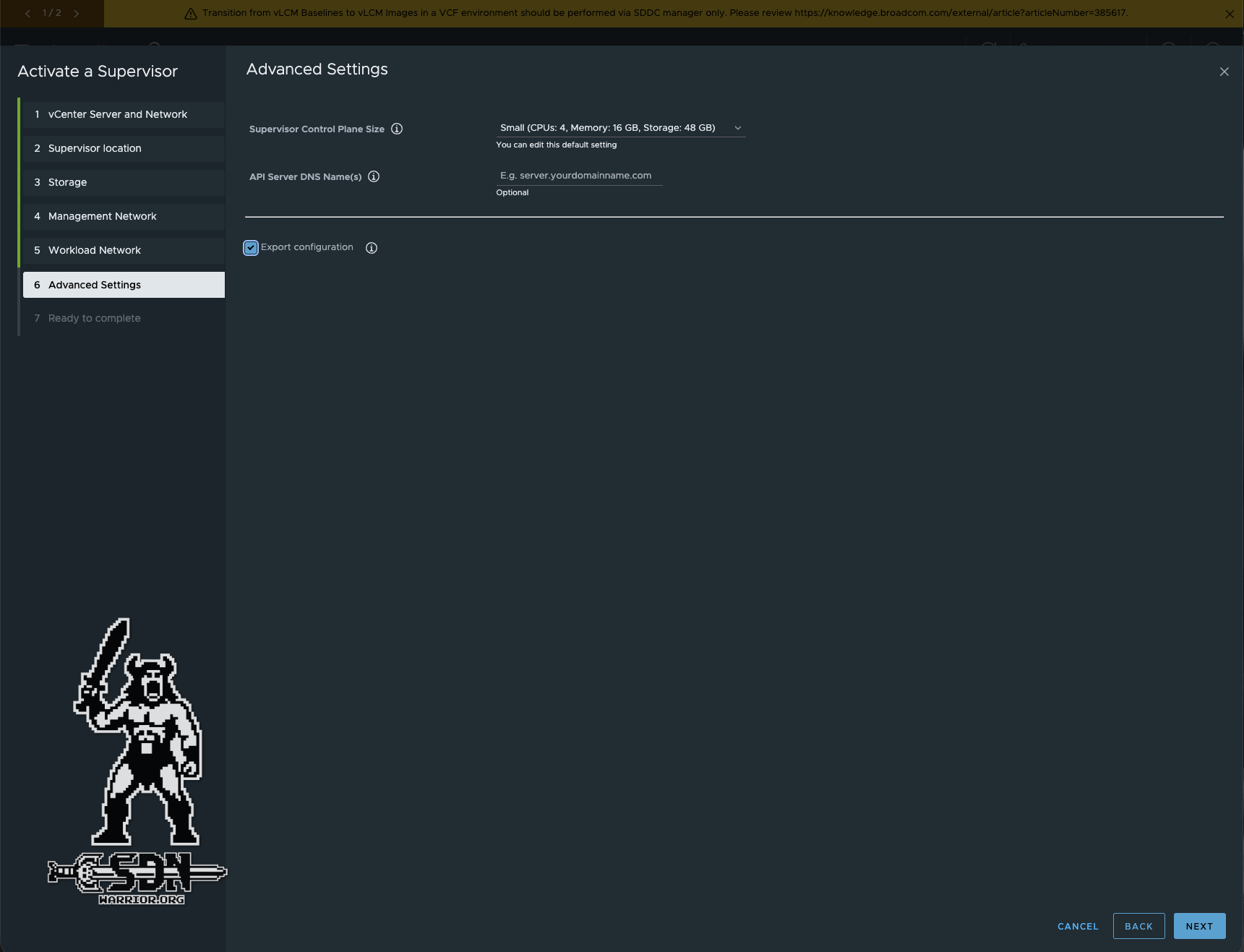

Finally, the supervisor control plane size is determined. I am using Small (4 vCPUs and 16 GB RAM per supervisor control node) here.

VKS Supervisor Control Plane (click to enlarge)

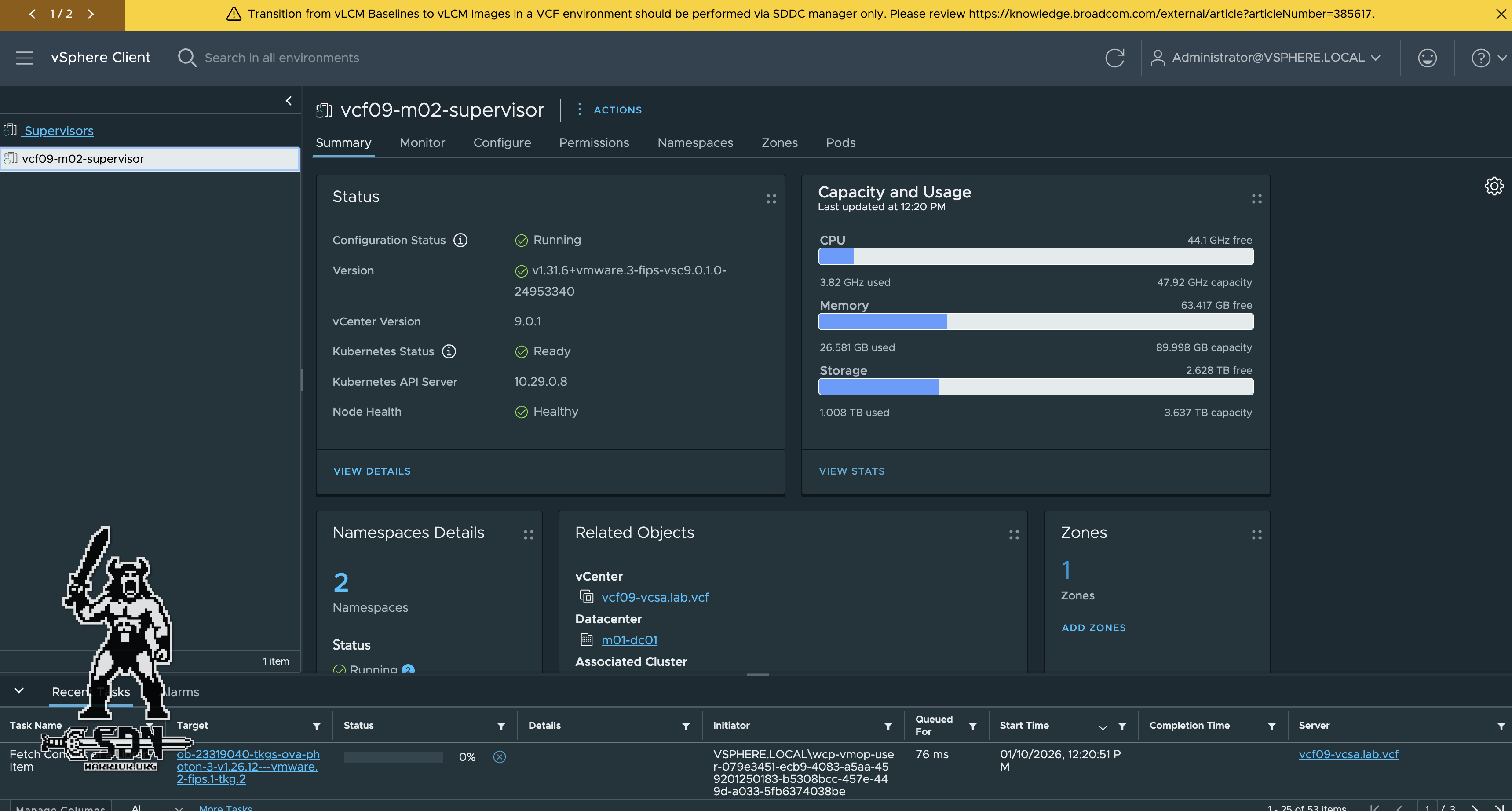

VKS Supervisor (click to enlarge)

The VKS setup takes quite a bit of time. I think it took me at least 1 to 2 coffees—so a good 20 minutes. Yes, I should probably reconsider my coffee consumption. And while we’re on the subject of consumption – what a transition – the LCI, or Local Consumption Interface, still needs to be installed.

The LCI can be found in the Broadcom Support Portal under the Free Software category. It is a simple YAML file. If you don’t want to search for it or deal with the Broadcom portal, you can copy version 9.0.2 (the current version at the time of publication) here.

apiVersion: data.packaging.carvel.dev/v1alpha1

kind: Package

metadata:

name: cci-ns.vmware.com.9.0.2+f943fb89

spec:

refName: cci-ns.vmware.com

version: 9.0.2+f943fb89

template:

spec:

fetch:

- imgpkgBundle:

image: projects.packages.broadcom.com/vsphere/iaas/lci-service/9.0.2/lci-service:9.0.2-f943fb89

template:

- ytt:

paths:

- config/

ignoreUnknownComments: true

- kbld:

paths:

- '-'

- .imgpkg/images.yml

deploy:

- kapp: {}

valuesSchema:

openAPIv3:

type: object

additionalProperties: false

description: OpenAPIv3 Schema for Consumption Interface

properties:

namespace:

type: string

description: Namespace of the component

default: cci-svc

podVMSupported:

type: boolean

description: This field indicates whether PodVMs are supported on the environment

default: false

virtualIP:

type: string

description: IP address of the Kubernetes LoadBalancer type service fronting the apiservers.

default: ""

---

apiVersion: data.packaging.carvel.dev/v1alpha1

kind: PackageMetadata

metadata:

name: cci-ns.vmware.com

spec:

displayName: Local Consumption Interface

shortDescription: Provides the Local Consumption Interface for Namespaces within vSphere Client.

longDescription: Provides the Local Consumption Interface for Namespaces within vSphere Client.

providerName: VMware

The LCI in VKS provides vSphere resources (VM Service) locally via the Kubernetes Supervisor. It makes virtual machines “consumable” for Kubernetes, as if they were native K8s objects. Automation uses the LCI for the deployment of VMs.

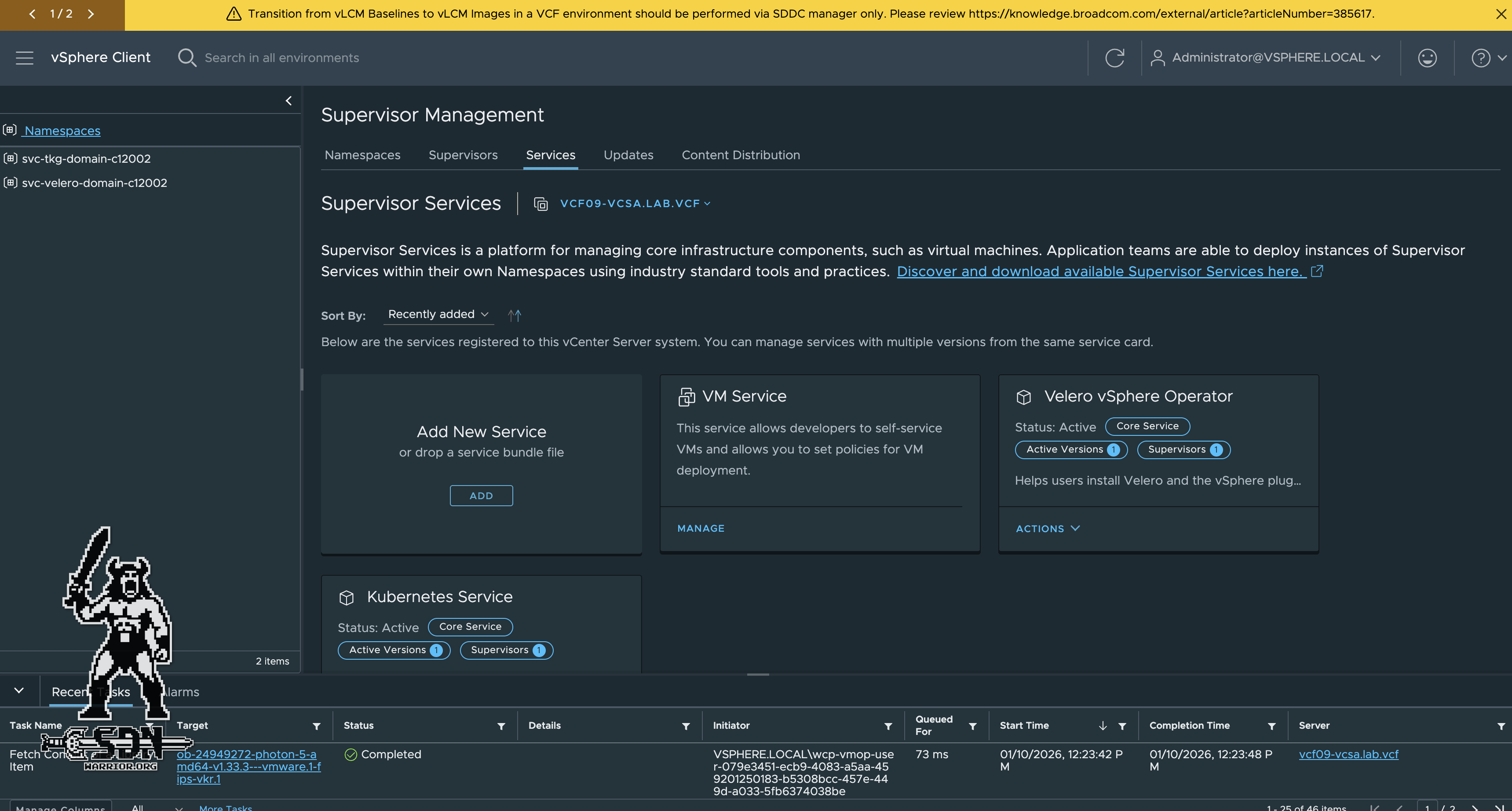

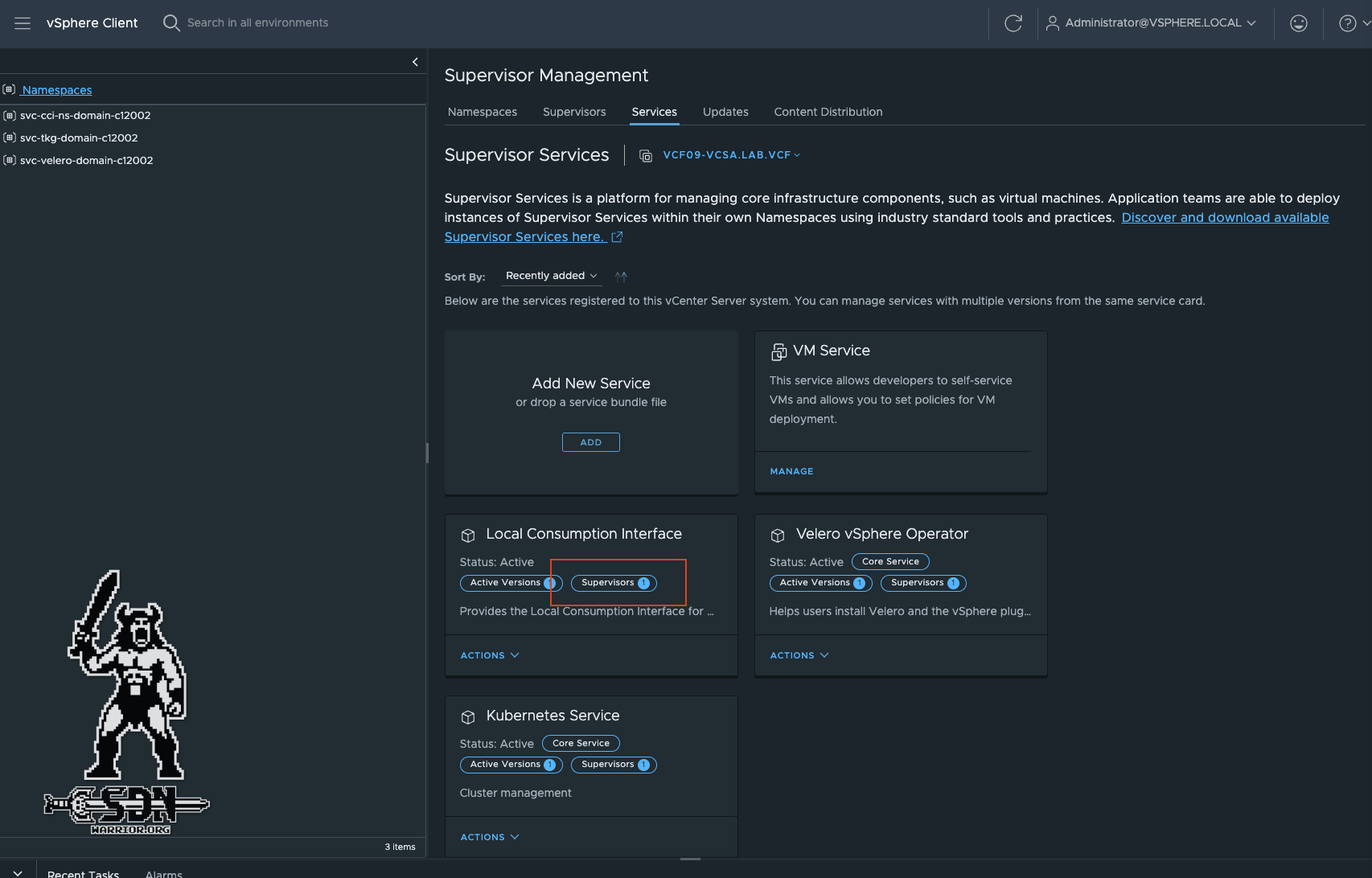

To install the LCI, it must first be created as a supervisor service. To do this, go to the vCenter burger menu and select Supervisor Management -> Namespaces -> Services -> Add.

LCI (click to enlarge)

After successful registration, another tile should now appear under Services, and we can roll out the service via Local Consumption Interface -> Actions -> Manage Service. The Supervisor Cluster must be selected, and then the dialog must be confirmed with Next until the end. No additional information is required. If everything has worked, the service is active on the Supervisor. This can be checked via Supervisor Management.

LCI Status (click to enlarge)

Troubleshooting VKS

Unfortunately, you cannot simply log in to the supervisor. Some of you may have noticed that we do not assign a single password. To access the supervisor’s root account, you must log in to vCenter via SSH and switch to the root shell. Then you run a Python script and get the supervisor’s password.

ssh root@vcf09-vcsa.lab.vcf

shell

/usr/lib/vmware-wcp/decryptK8Pwd.py

The output should look something like this:

root@vcf09-vcsa [ ~ ]# /usr/lib/vmware-wcp/decryptK8Pwd.py

Read key from file

Connected to PSQL

Cluster: domain-c12002:8f68f1b2-a1ac-4fce-a9bf-26c7492b2fcb

IP: 10.29.0.19

PWD: xxxxxxxxxxxxxxxxx

Since the supervisor is in a public VPC network, we should now be able to access the supervisor via SSH. This can be done from the vCenter or directly.

You can display the status of the pods using kubectl get pods -A. These should be in running or completed status.

root@42022d56cee15501c2f40bcedfcb23b5 [ ~ ]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-state-metrics-domain-c12002 kube-state-metrics-5df7f5548d-jr68x 2/2 Running 2 (4h20m ago) 30h

kube-system cns-storage-quota-extension-8fd45b984-2p4m6 1/1 Running 1 (4h20m ago) 30h

kube-system coredns-86688d758-2j2gr 1/1 Running 9 (4h20m ago) 30h

kube-system docker-registry-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 1 (4h20m ago) 30h

kube-system etcd-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 1 (4h20m ago) 30h

kube-system kube-apiserver-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 3 (4h19m ago) 30h

kube-system kube-controller-manager-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 3 (4h19m ago) 30h

kube-system kube-proxy-rdk2p 1/1 Running 1 (4h20m ago) 30h

kube-system kube-scheduler-42022d56cee15501c2f40bcedfcb23b5 2/2 Running 5 (4h19m ago) 30h

kube-system kubectl-plugin-vsphere-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 5 (4h19m ago) 30h

kube-system snapshot-controller-576bd689df-ff7jg 1/1 Running 1 (4h20m ago) 30h

kube-system storage-quota-webhook-6b8c9c45b9-c2pwx 1/1 Running 5 (4h19m ago) 30h

kube-system supervisor-authz-service-controller-manager-9c666c964-4g4t6 1/1 Running 1 (4h20m ago) 30h

kube-system wcp-authproxy-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 2 (4h19m ago) 30h

kube-system wcp-fip-42022d56cee15501c2f40bcedfcb23b5 1/1 Running 1 (4h20m ago) 30h

...

vmware-system-zoneop zone-operator-58bc66b7bb-wvpjf 1/1 Running 1 (4h20m ago) 30h

With kubectl get nodes, you can see whether the control plane and the agents on the ESX servers are ready.

root@42022d56cee15501c2f40bcedfcb23b5 [ ~ ]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

42022d56cee15501c2f40bcedfcb23b5 Ready control-plane,master 30h v1.31.6+vmware.3-fips

vcf09-m02-esx01.lab.vcf Ready agent 30h v1.31.6-sph-vmware-clustered-infravisor-trunk-85-g71ed1bf

vcf09-m02-esx02.lab.vcf Ready agent 30h v1.31.6-sph-vmware-clustered-infravisor-trunk-85-g71ed1bf

To obtain a log file for a single pod, you can use the following command:

kubectl logs -n namespace -p POD/name

An output from the Coredns Pod looks like this, for example:

root@42022d56cee15501c2f40bcedfcb23b5 [ ~ ]# kubectl logs -n kube-system -p POD/coredns-86688d758-2j2gr

.:53 on 172.26.0.3

[INFO] plugin/reload: Running configuration SHA512 = 0e63e439c10056457d08907176fbd8310056b76cf036ff455749731bc2e3eac256b51dfe7d2da9cddace06530a8353dbf6f9814cf475b3267f0f664f6d6b4241

CoreDNS-1.11.3

linux/amd64, go1.21.8 X:boringcrypto, v1.11.3+vmware.8-fips

[INFO] 172.26.0.3:39797 - 26176 "HINFO IN 234899636062536918.376157124480636184. udp 55 false 512" NXDOMAIN qr,rd,ra 130 0.013725101s

[INFO] 172.26.0.3:55311 - 31871 "AAAA IN 42022d56cee15501c2f40bcedfcb23b5.vmware-system-monitoring.svc.cluster.local. udp 93 false 512" NXDOMAIN qr,aa,rd 186 0.000161466s

[INFO] 172.26.0.3:49051 - 45168 "A IN 42022d56cee15501c2f40bcedfcb23b5.vmware-system-monitoring.svc.cluster.local. udp 93 false 512" NXDOMAIN qr,aa,rd 186 0.0000998s

[INFO] 172.26.0.3:37736 - 62871 "AAAA IN 42022d56cee15501c2f40bcedfcb23b5.svc.cluster.local. udp 68 false 512" NXDOMAIN qr,aa,rd 161 0.000090623s

[INFO] 172.26.0.3:59085 - 42593 "A IN 42022d56cee15501c2f40bcedfcb23b5.svc.cluster.local. udp 68 false 512" NXDOMAIN qr,aa,rd 161 0.000124409s

[INFO] 172.26.0.3:47017 - 52562 "AAAA IN 42022d56cee15501c2f40bcedfcb23b5.cluster.local. udp 64 false 512" NXDOMAIN qr,aa,rd 157 0.00005269s

[INFO] 172.26.0.3:56547 - 58782 "A IN 42022d56cee15501c2f40bcedfcb23b5.cluster.local. udp 64 false 512" NXDOMAIN qr,aa,rd 157 0.000023421s

...

If none of this helps, then you have to sacrifice your firstborn to the Kubernetes gods. Experience in the lab has shown that either the network is set up incorrectly or the control plane is too small.

Finally, we have made all the preparations to configure our automation.

Setup Automation - my first tenant

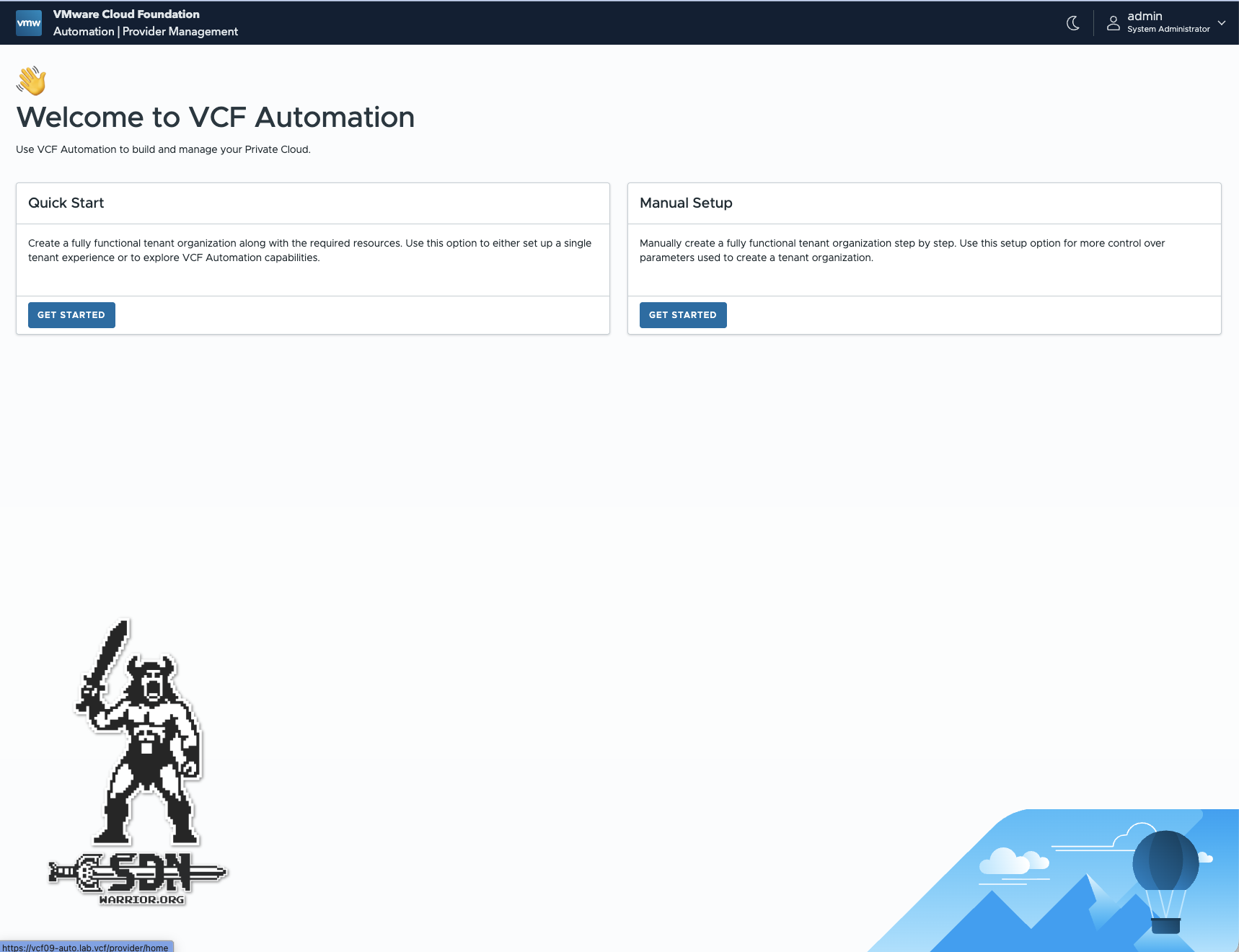

After redeploying my VKS three times due to some typos, I can now log in to the automation GUI. The organization name to log in to Provider Management is system. The username is admin and the password is the one you set during deployment. If you want, you can replace the TLS certificate with your own via VCF Operations. However, this is not a must.

Hello Automation (click to enlarge)

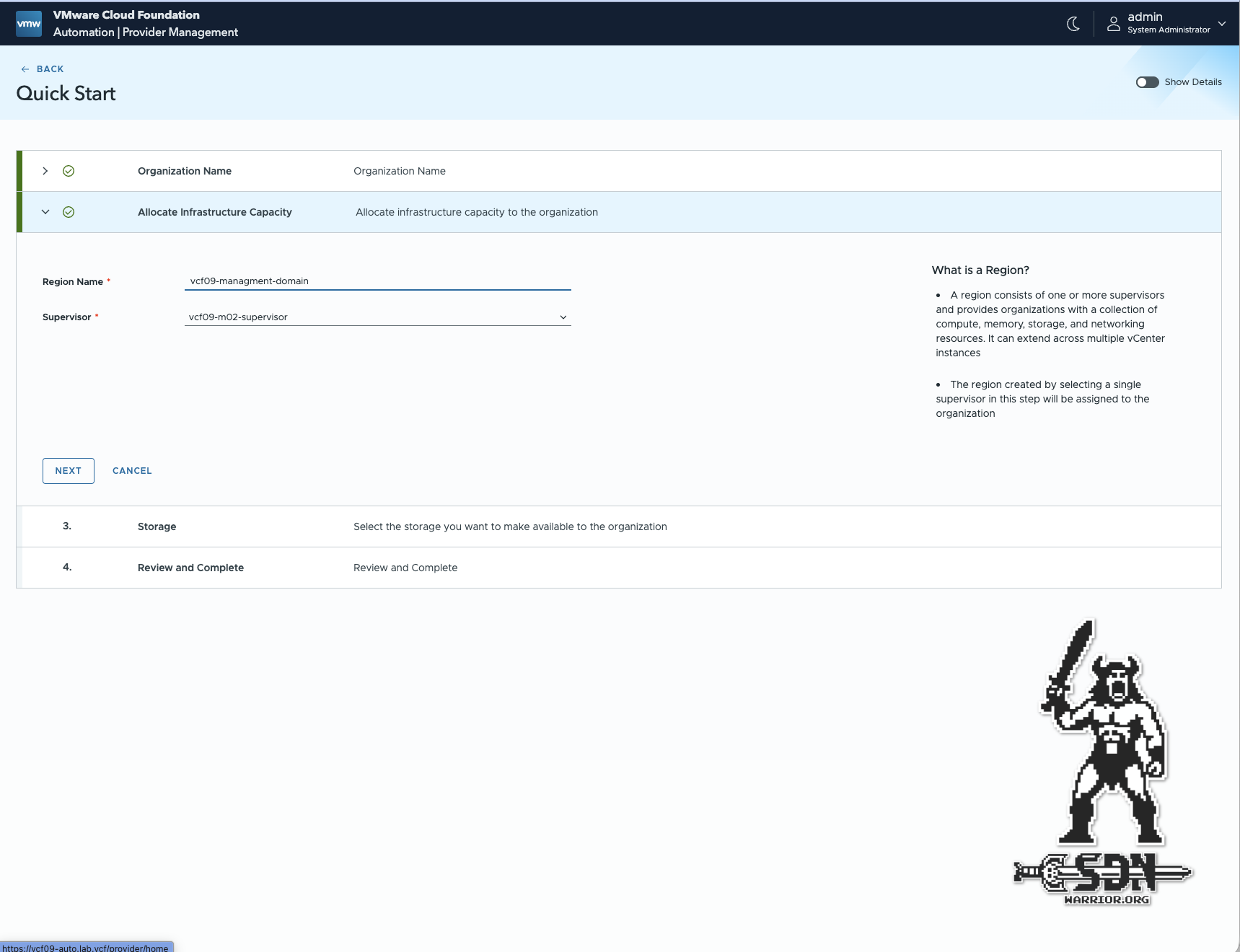

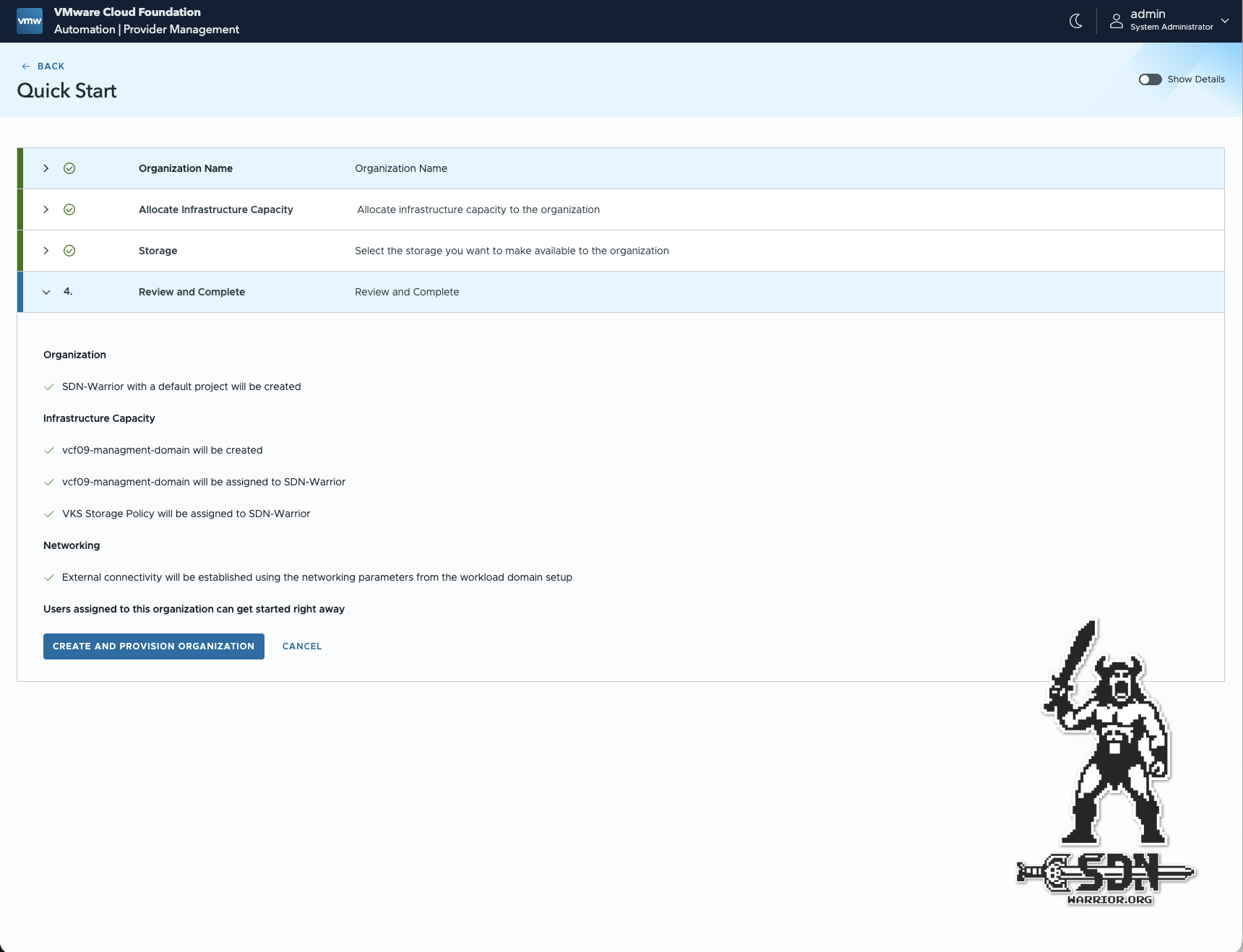

If the Automation start screen looks different after logging in to the provider, the VKS cluster was probably not recognized. An inventory sync can help here. I use Quick Start for setup because it creates a complete organization with useful settings in a very simple way. An organization is the top level in automation and could also be described as a customer or tenant. An organization is implemented as an NSX project, which ensures separation at the network level. Resources, cost control, and policies always apply across the entire organization. But more on that later.

Using the Quick Start Wizard, the first thing I have to do is choose the name of my organization. In my setup, I name the organization SDN Warrior. Next, I have to create a region and assign at least one VKS Supervisor cluster. A region consists of one or more supervisors and provides organizations with a collection of compute, memory, storage, and networking resources. It can extend across multiple vCenter instances. An organization can contain one or more regions.

Automation Region (click to enlarge)

Next, a storage policy must be selected. Here, I select my VKS policy. This can be adjusted later under Region Quota. That was it, basically. The rest is created completely automatically.

Automation Summary (click to enlarge)

Pretty simple. But we’re not quite done yet, and we should first go through the settings that were created automatically.

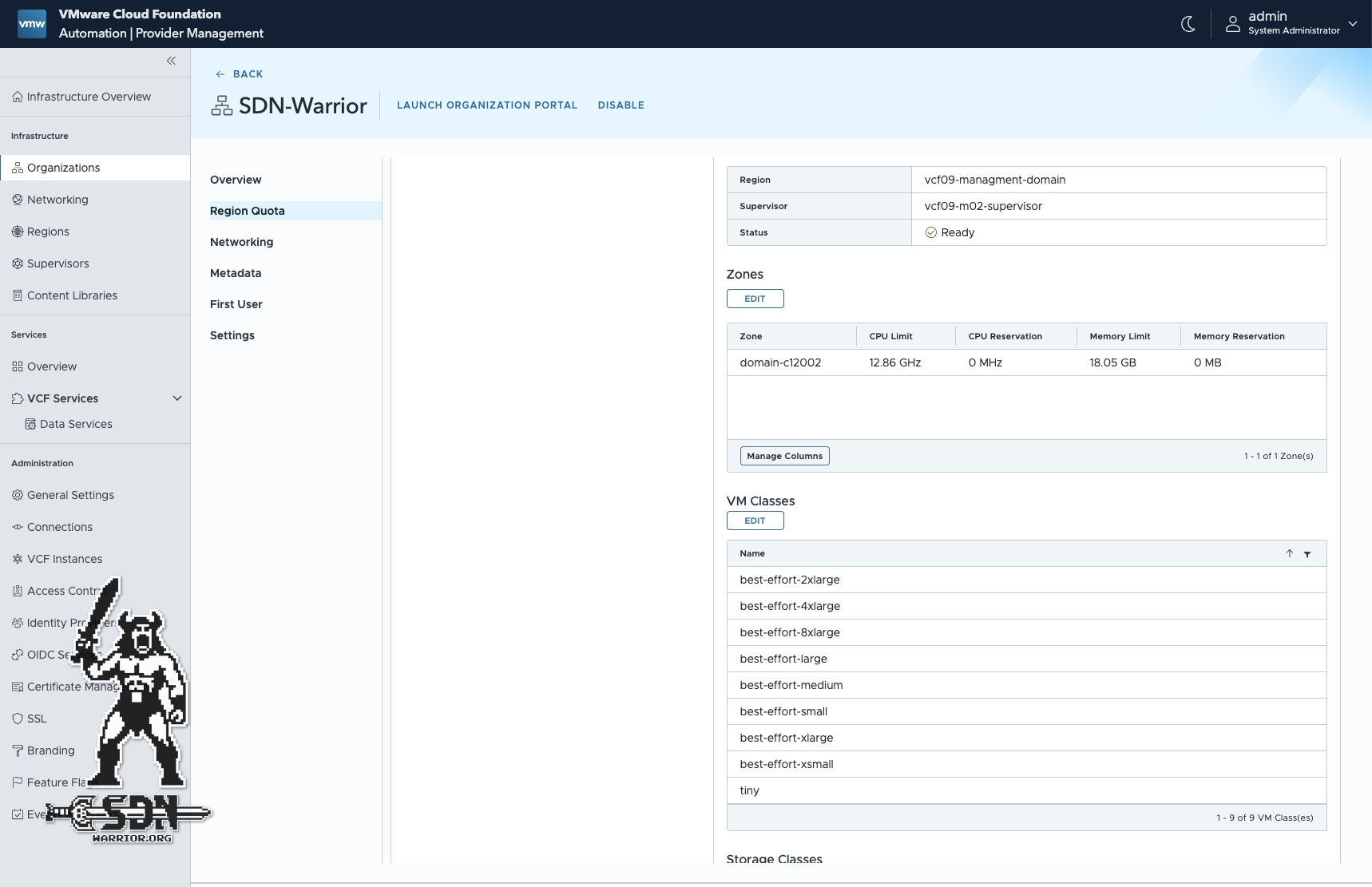

If we now click on Organization in the Automation menu, we get a good overview of what has been set up, and it quickly becomes apparent that Quickstart has set up significantly more than we had to configure. A region quota has been configured, which we can use to configure the permitted VM classes. By default, all default classes are permitted. These VM classes are our T-shirt sizes. The existing VM classes can be customized in vCenter under Supervisor Management -> Services. It takes some time before newly created VM classes can be selected in the Quota.

Quota with custom VM Class (click to enlarge)

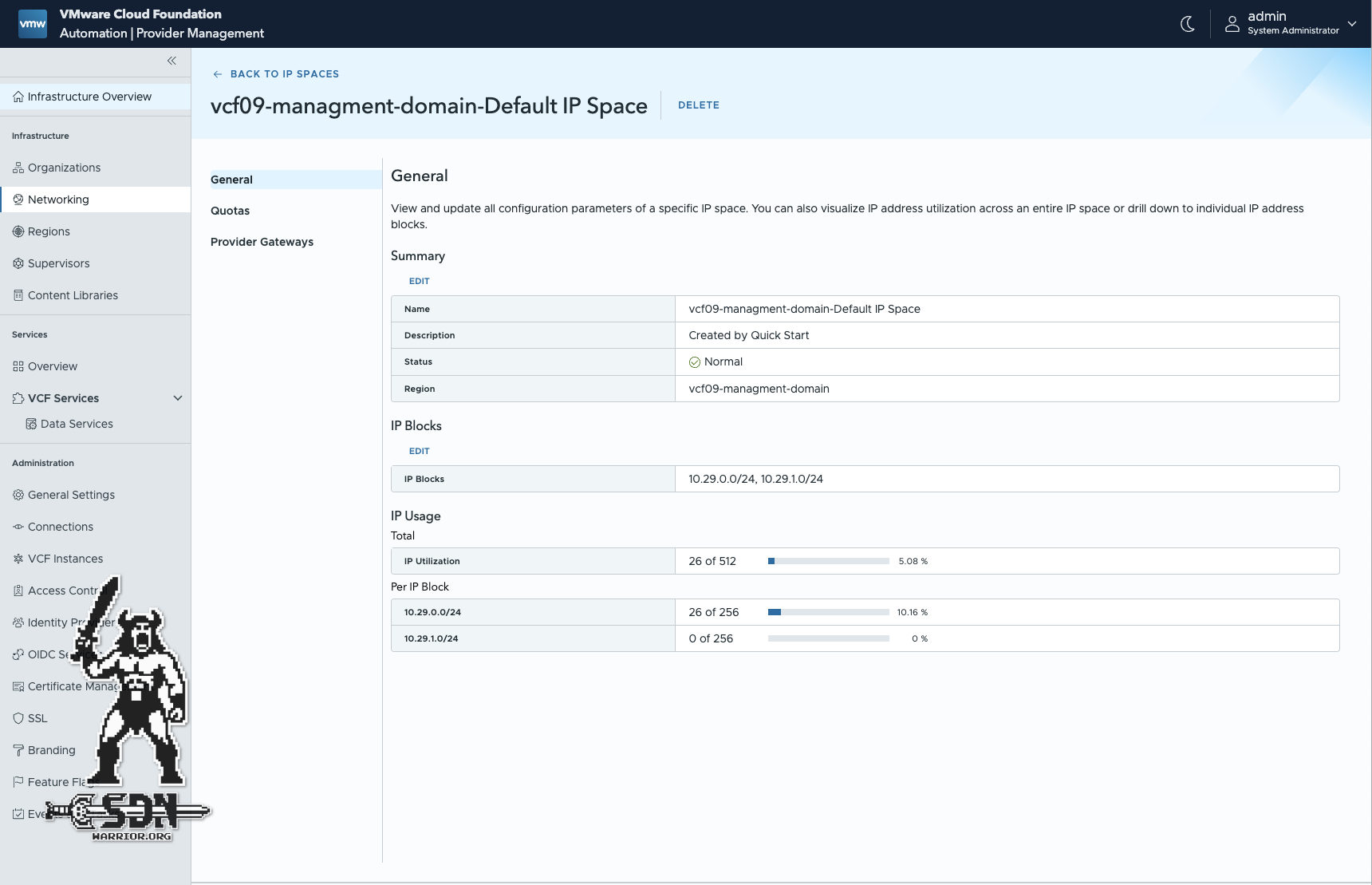

Under Networking in the organization, you can see that the VPC default settings have been used. If there are multiple edge clusters, a specific edge cluster can be selected. In the VPC Connectivity Profile, you can override the ingress and egress QoS settings. By default, these are unlimited. IP ranges cannot be configured in the Quota, but the public VPC network is used by default. However, these IP ranges are only used for external communication. IP ranges within the organization are managed by the organization itself and not by the provider.

By far the most important setting for our lab is the first user. Currently, no user is stored here. We should change that now. Only one user can be created; for everything else, a single sign-on solution is required, but that is worth its own blog article. I give my first user all rights. This should be the break class account. Normally, you will work with users from an identity source. But who cares about security in the lab?

After creating a user for the Organization Portal, we take a quick look at the network settings. Under Networking -> General -> IP Blocks, we can customize or expand the public IP block. IP blocks represent IPs used both inside and outside this local data center, north and south of the provider gateway. IPs within this scope are used for configuring services and networks.

Network settings (click to enlarge)

Under Regions, you can customize the storage class or add more. Going through all the settings in detail would go beyond the scope of this blog, and we want to get results first. But I have at least highlighted the most important settings.

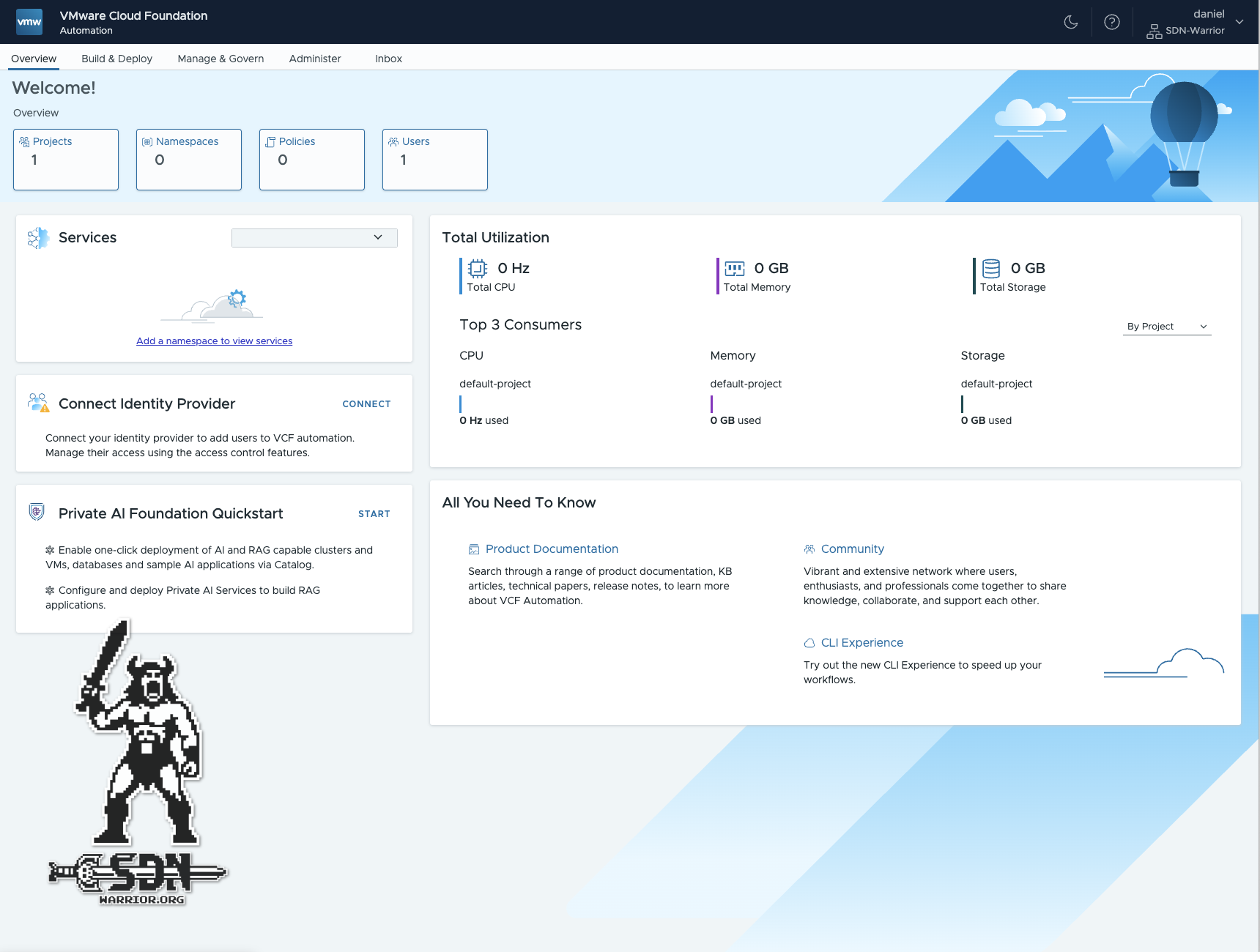

Organization Portal

Everything else is done in the Organization Portal. To do this, go to Organizations, select the organization, and click Launch Organization Portal. Alternatively, we log out of the provider portal, enter the name of our organization as the organization, and log in with the user we created earlier.

Organization Portal (click to enlarge)

Project

We see that a project has already been created by default. This is done automatically when creating an organization. We edit this by clicking on Projects and then on the project name. A brief word about what a project is. A project connects users with the resources they are entitled to and the restrictions they are subject to. A project typically defines an application development team, its users, how much and what type of infrastructure they can use, and which catalog items they can access.

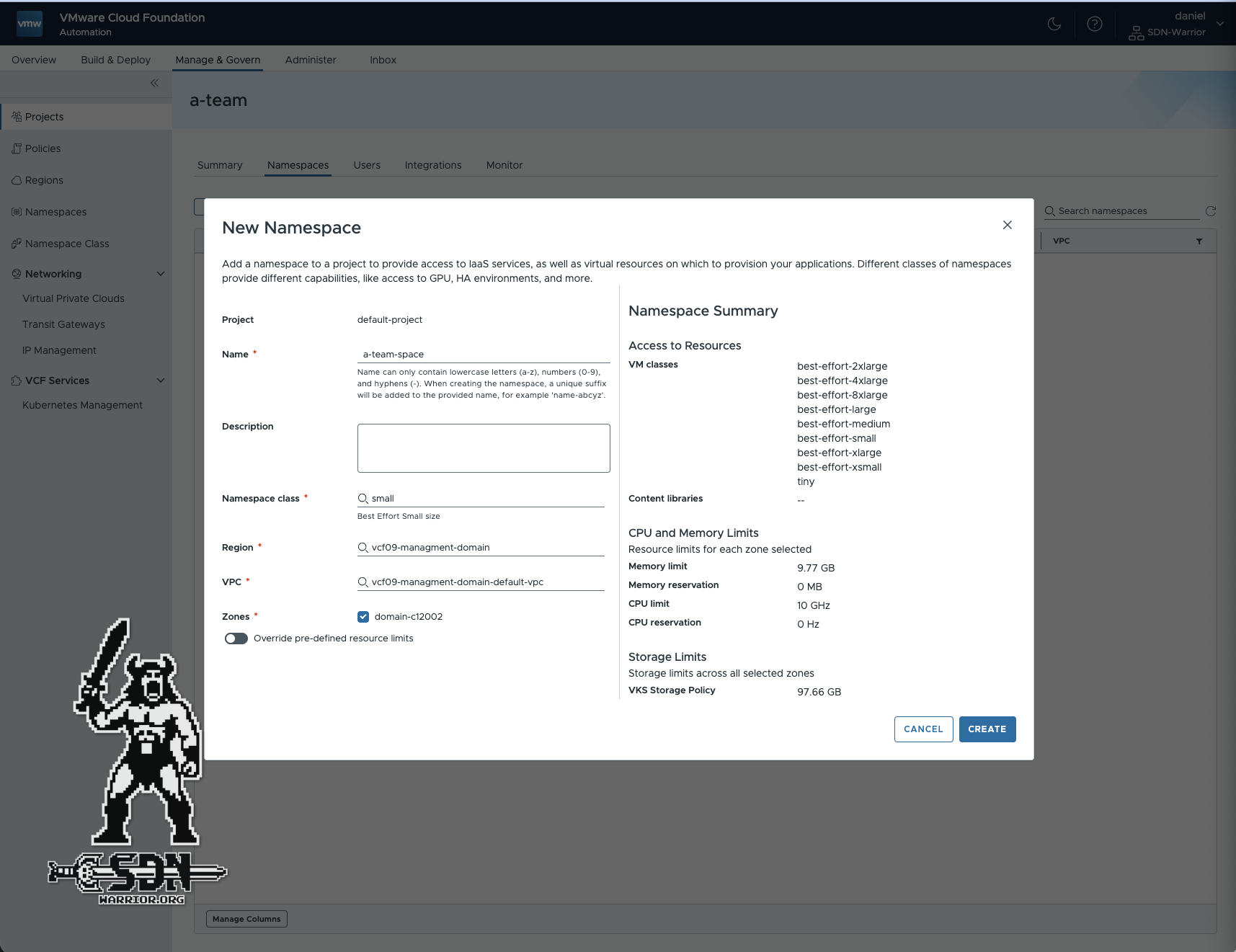

I am renaming my project to a-team. All projects must be written in lowercase. I save the project before creating the vSphere namespace. Then I edit the project again and create a namespace. This refers to a vSphere namespace from VKS. It consists of one of the predefined namespace classes and can be customized under Manage & Govern if necessary. In my case, small (best effort) is sufficient, which means that my project will not be allocated more than 10 GB of RAM and 10 GHz of CPU without reservations. Namespace classes can be used to manage resources effectively. In addition, the region, VPC, and zone must be specified. This is not difficult in my lab, as there is only one of each.

Project (click to enlarge)

DHCP and DNS

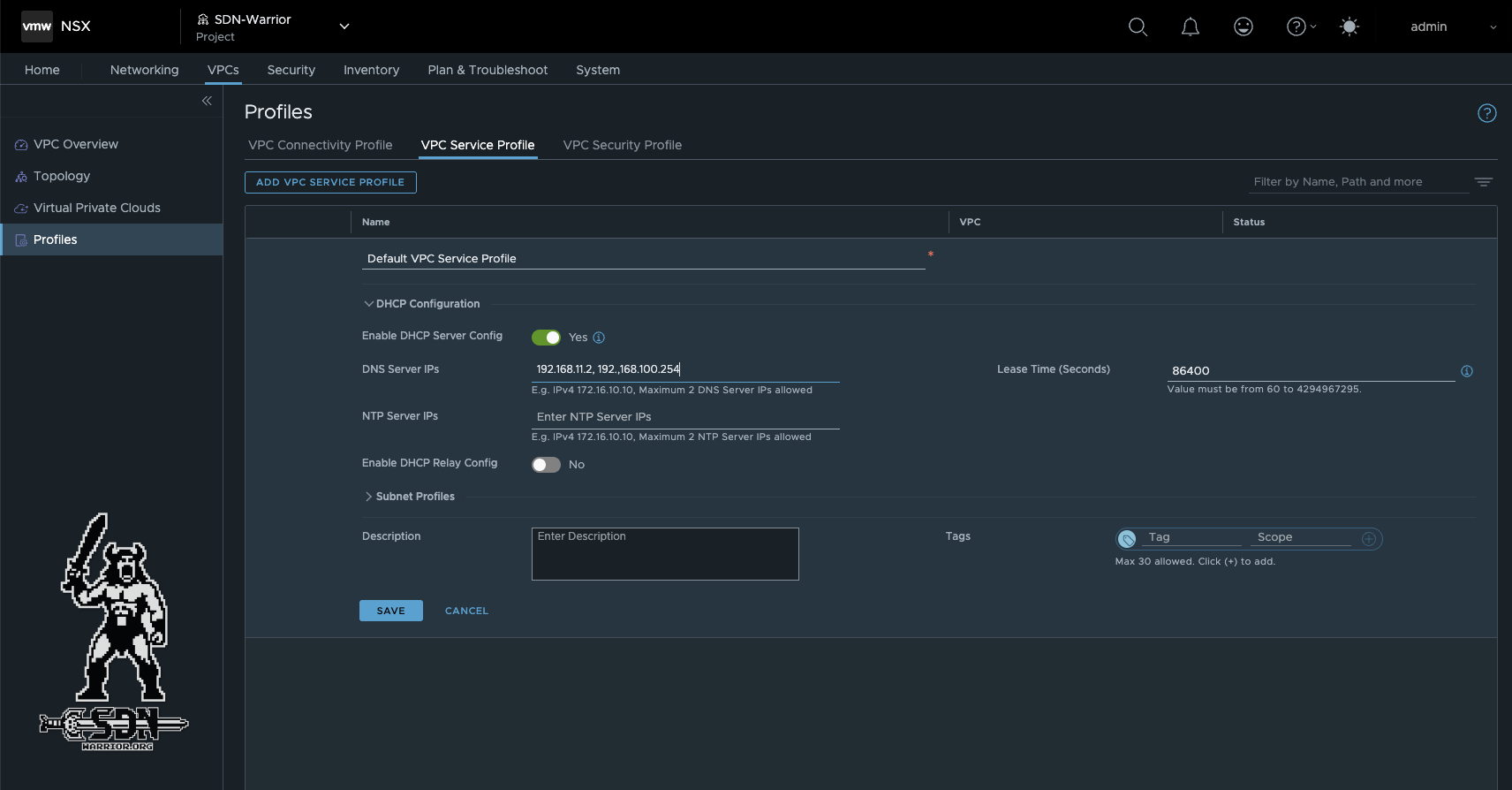

Now we have almost everything we need to deploy a VM. However, I want to use DHCP in my lab, so I need to make a small adjustment in NSX. By default, DHCP can be used with automation, but the VPC service profile does not have a DNS server stored. This can be changed quickly. To do this, I log in to NSX and switch to the SDN-Warrior project (click on Default in the upper left corner of NSX to switch projects). The profile can be found under VPCs -> Profiles -> VPC Service Profile -> Default VPC Service Profile. Here, I add my DNS server to the profile.

NSX VPC Profile (click to enlarge)

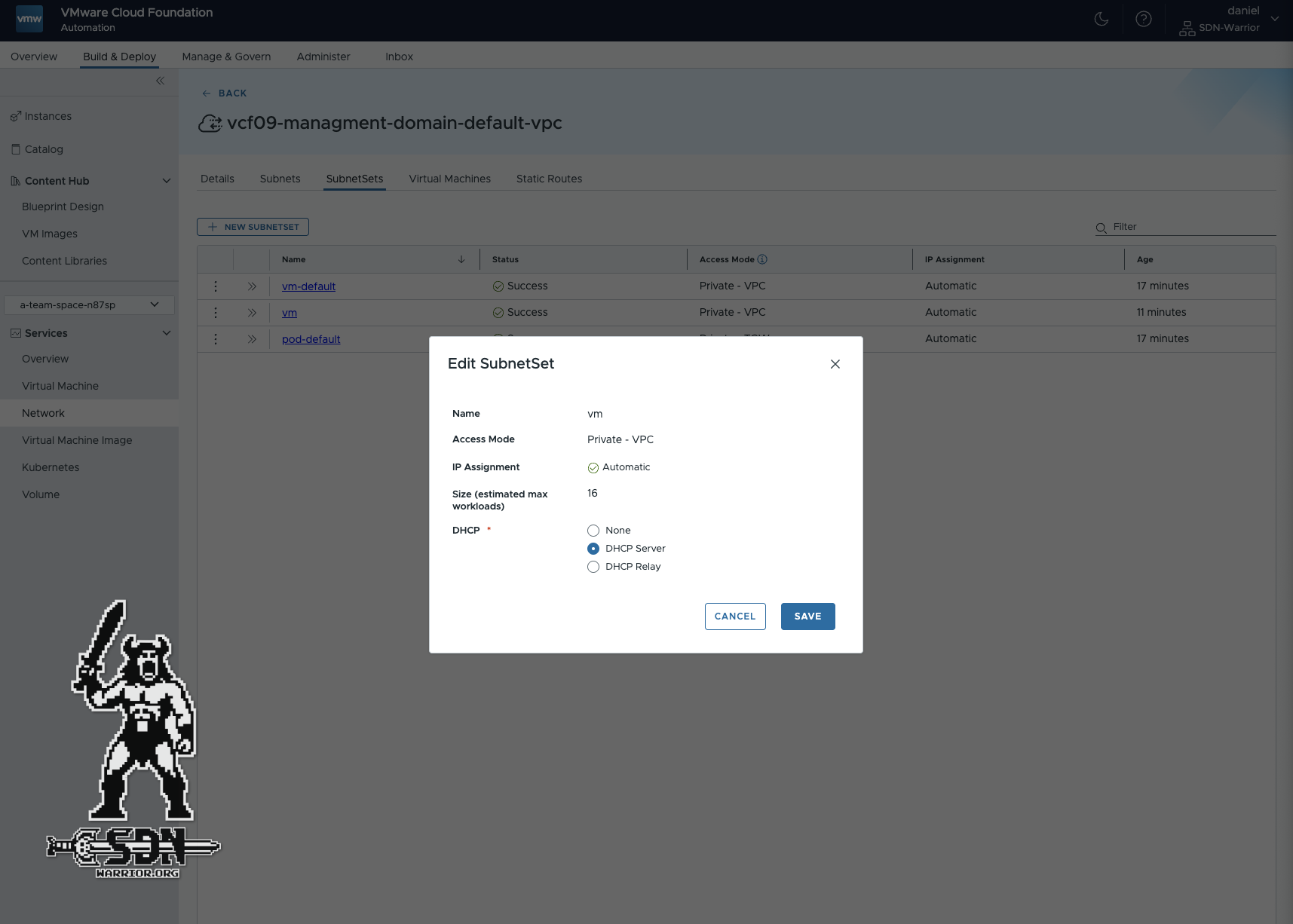

Once that has been changed, I create a new subnet set in the Organization Portal. Under Build & Deploy -> Services -> Network -> vcf09-management-domain-default-vpc -> SubnetSets, I create a new subnet set with the name vm and set the DHCP server. The subnet set is, so to speak, my blueprint for how I use my network. It determines the type of network the VM will be in (in this case, a private network) and how an IP address will be assigned.

Subnet Set (click to enlarge)

Content library

We also need a content library. I create this via Build & Deploy -> Content Libraries. The region must be specified and the storage policy selected. I can then upload my OVA template from Alpine Linux to the newly created content library. It is important to note that the space required is deducted from the configured quota created in the provider setup. You don’t have to use Alpine Linux here; any other VM template you have created previously will also work. I use Alpine because of its small size and because it is super easy to configure.

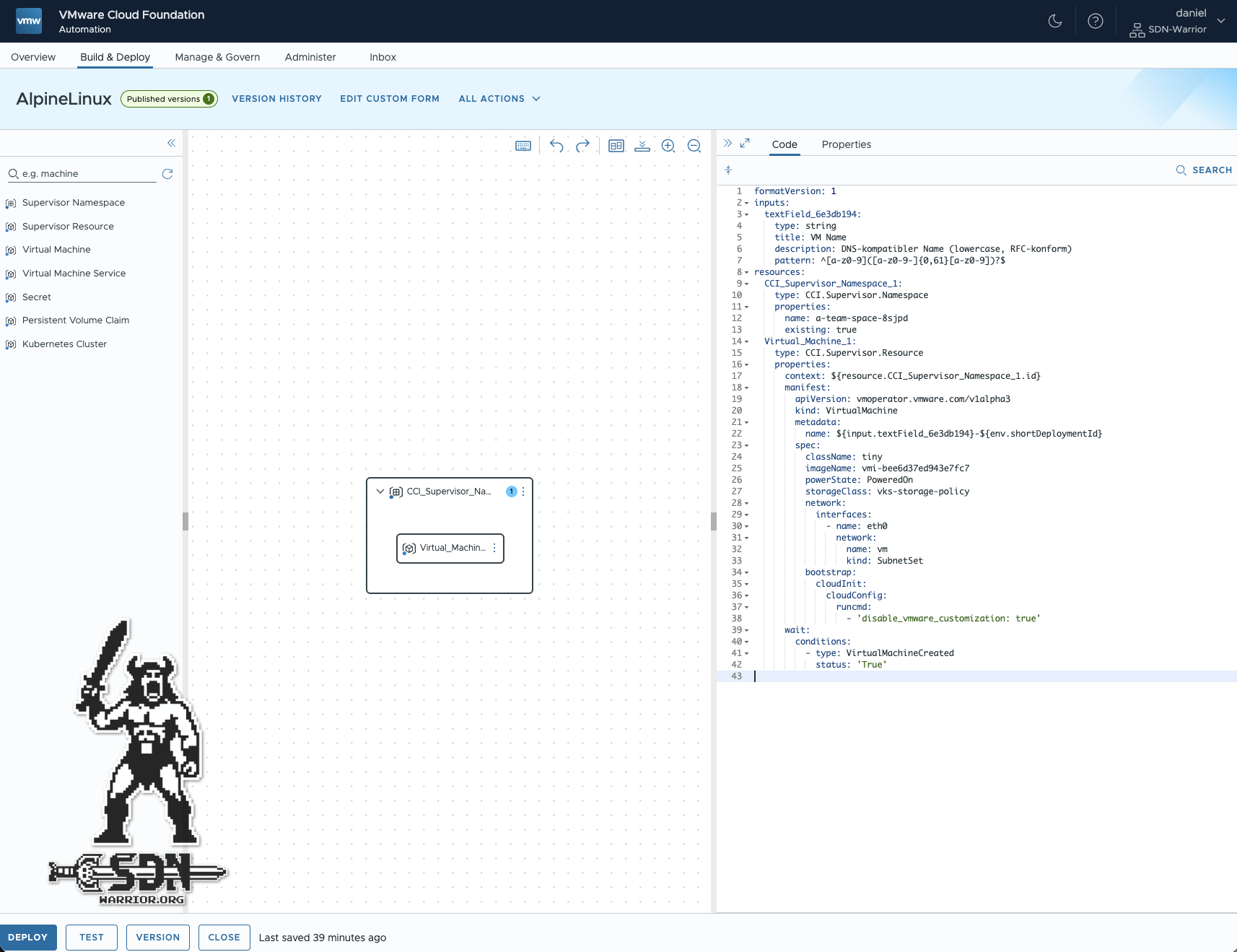

Blueprint Design

Now that we have a basic setup, a customized network, and an image in our content library, we can write the blueprint. A blueprint in VCF Automation is a standardized description of how a VM, service, or application should be automatically provisioned. To do this, we click on Build & Deploy -> Blueprint Design -> New from Blank Canvas. The blueprint needs a name, in my case AlpineLinux, and it must be assigned to a project.

The Blueprint designer appears, and the developer now has to familiarize themselves with YAML. To deploy a simple VM, the namespace must first be specified. To do this, we need a resource of type CCI.Supervisor.Namespace. Then another resource is needed for the actual VM. This is of type CCI.Supervisor.Resource. You can drag and drop the two resources into the canvas or type the YAML directly. I have a relatively simple YAML here that creates an Alpine Linux from a VM template. However, it can also be used with other VM templates. There is also a simple input form so that you can specify the VM name. This must be rfc dns compliant, and a simple regex is also included. The blueprint needs to be slightly modified so that it can be used.

formatVersion: 1

inputs:

textField_6e3db194:

type: string

title: VM Name

description: DNS-kompatibler Name (lowercase, RFC-konform)

pattern: ^[a-z0-9]([a-z0-9-]{0,61}[a-z0-9])?$

resources:

CCI_Supervisor_Namespace_1:

type: CCI.Supervisor.Namespace

properties:

name: a-team-space-8sjpd

existing: true

Virtual_Machine_1:

type: CCI.Supervisor.Resource

properties:

context: ${resource.CCI_Supervisor_Namespace_1.id}

manifest:

apiVersion: vmoperator.vmware.com/v1alpha3

kind: VirtualMachine

metadata:

name: ${input.textField_6e3db194}-${env.shortDeploymentId}

spec:

className: tiny

imageName: vmi-bee6d37ed943e7fc7

powerState: PoweredOn

storageClass: vks-storage-policy

network:

interfaces:

- name: eth0

network:

name: vm

kind: SubnetSet

bootstrap:

cloudInit:

cloudConfig:

runcmd:

- 'disable_vmware_customization: true'

wait:

conditions:

- type: VirtualMachineCreated

status: 'True'

To make it easier, I’ll split the YAML into sections and describe where changes need to be made. The vSphere namespace must be specified here. This can be found under Built & Deploy -> Services -> Overview. Alternatively, it can also be found in vCenter or under Manage & Govern -> Namespaces.

resources:

CCI_Supervisor_Namespace_1:

type: CCI.Supervisor.Namespace

properties:

name: a-team-space-8sjpd

existing: true

-

The VM class must be adjusted in the VM block, as I am using a custom class called Tiny. The existing VM classes can be found under Build & Deploy -> Services -> Virtual Machine -> VM Classes. One of the standard classes, for example, is best-effort-xsmall.

-

Image Name must be replaced with the image to be used. This can be found under Build & Deploy -> Content Hub -> VM Images, The image identifier must be used.

-

The storage class must be adapted to the existing one. This can be found under Build & Deploy -> Services -> Volume -> Storage Classes.

-

Finally, the network must be adjusted. If you delete the entire block from Network, the default SubnetSet will be used, on which DHCP is not active. In the previous steps, I created my own SubnetSet with the name vm.

spec:

className: tiny

imageName: vmi-bee6d37ed943e7fc7

powerState: PoweredOn

storageClass: vks-storage-policy

network:

interfaces:

- name: eth0

network:

name: vm

kind: SubnetSet

This part does not need to be adjusted, but I would still like to briefly explain why it is included. Without this adjustment, the VM is created and connected to the correct network, but the Alpine VM is always disconnected afterwards. This can be changed via the vCenter, but that defeats the purpose. The user who will later consume the VMs should not have access to the vCenter. I’ve had this problem with various Linux distributions, but I can’t say whether it’s a general Linux issue.

bootstrap:

cloudInit:

cloudConfig:

runcmd:

- 'disable_vmware_customization: true'

The Bluebrint should now look more or less like this.

Subnet Set (click to enlarge)

Using the Test button, you can validate the YAML to ensure that at least the formatting is correct. Using Deploy, you can run a deployment test. Once this has been successful, you can use Version to publish the current version in the catalog and make it accessible to users. I recorded a short test video to show how it all works in practice. To do this, I used an advanced project user with limited rights to the project.

Bonus Round - deploying a Kubernetes Cluster

Spoilers: this isn’t a perfect blueprint, but it just shows that you can do more than just deploy VMs with VCF Automation in All Apps mode.

formatVersion: 1

inputs:

textField_6e3db193:

type: string

title: clustername

description: DNS-kompatibler Name (lowercase, RFC-konform)

pattern: ^[a-z0-9]([a-z0-9-]{0,61}[a-z0-9])?$

resources:

CCI_Supervisor_Namespace_1:

type: CCI.Supervisor.Namespace

properties:

name: a-team-space-8sjpd

existing: true

Kubernetes_Cluster_1:

type: CCI.Supervisor.Resource

properties:

context: ${resource.CCI_Supervisor_Namespace_1.id}

manifest:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: ${input.textField_6e3db193}-${env.shortDeploymentId}

spec:

clusterNetwork:

pods:

cidrBlocks:

- 192.168.156.0/20

services:

cidrBlocks:

- 10.96.0.0/12

serviceDomain: cluster.local

topology:

class: builtin-generic-v3.4.0

classNamespace: vmware-system-vks-public

version: v1.33.3---vmware.1-fips-vkr.1

variables:

- name: vmClass

value: best-effort-small

- name: storageClass

value: vks-storage-policy

controlPlane:

replicas: 1

metadata:

annotations:

run.tanzu.vmware.com/resolve-os-image: os-name=photon, content-library=cl-7685ae58460e6c079

workers:

machineDeployments:

- class: node-pool

name: kubernetes-cluster-seil-np-1xf6

replicas: 1

To deploy the cluster, only the namespace needs to be adjusted. The rest should run in every automation instance, as I only use standards here.

CCI_Supervisor_Namespace_1:

type: CCI.Supervisor.Namespace

properties:

name: a-team-space-8sjpd

existing: true

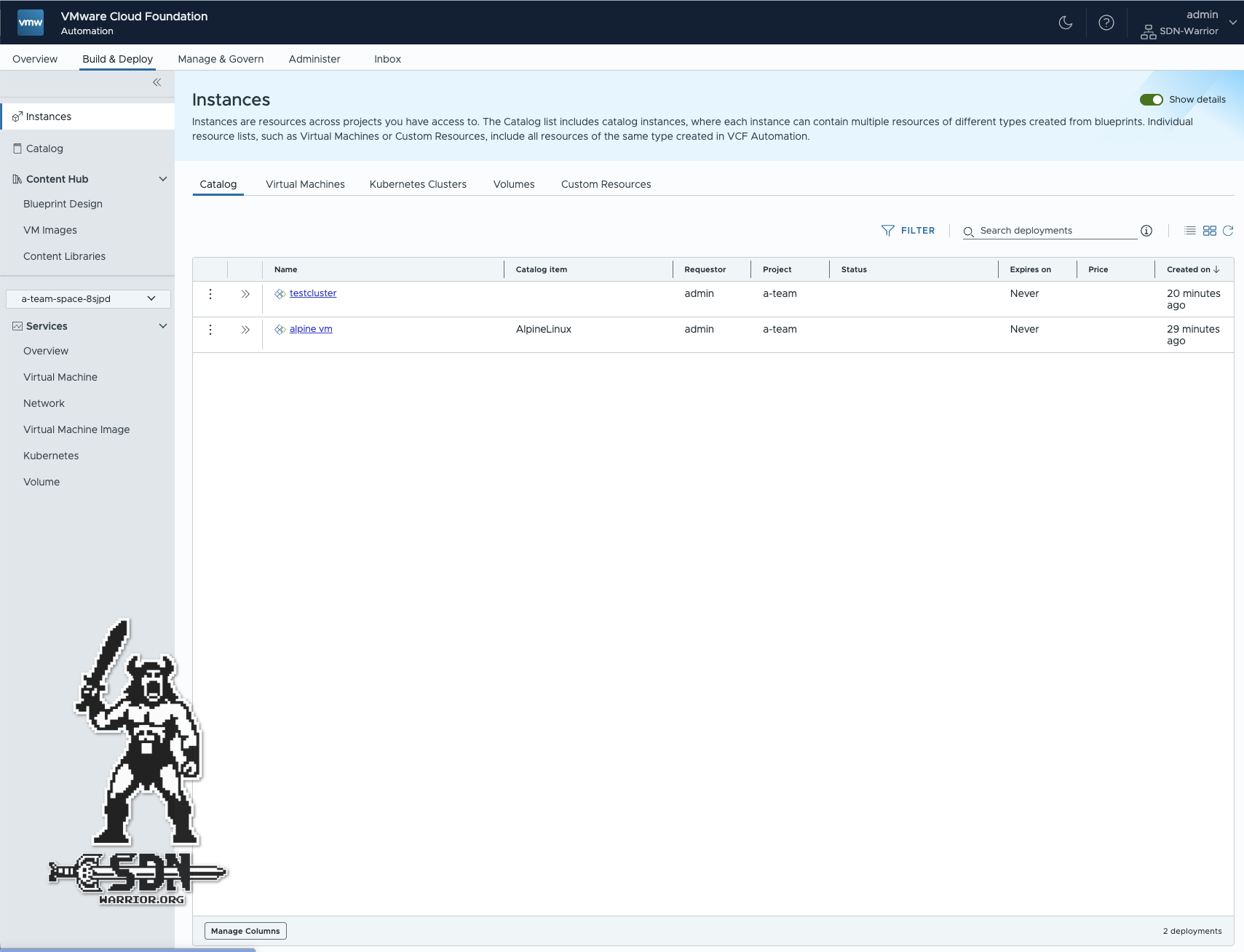

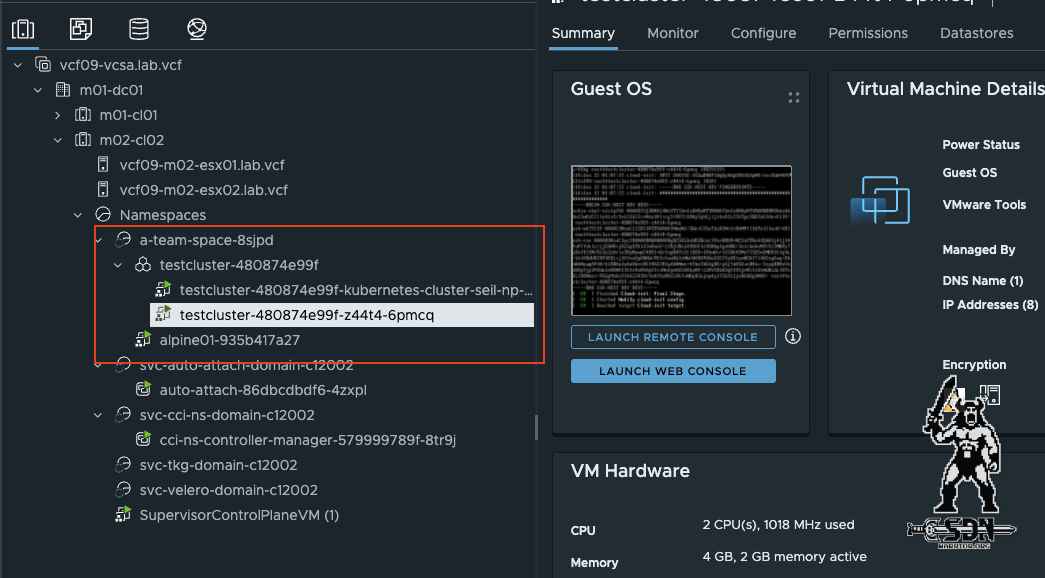

Automation Instances Set (click to enlarge)

Automation workload in vCenter (click to enlarge)

Conclusion

VCF 9 Automation is not just a simple update to the familiar automation solution; it is a new product. In this blog, I have covered some super basic tasks, but there is much more that can be done, and I have only scratched the surface. A general rethink of how VMs are managed is needed, because if my VM has been deployed in a vSphere namespace, it is no longer managed via vCenter. This should also make it clear that a simple migration from Automation 8.X to Automation 9 is not easily possible (unless you use Legacy Mode). However, this is the future of the VCF platform. True multitenancy can only be achieved with VCF Automation. A rethink is necessary, and I look forward to exciting new possibilities with Automation.