VCF9 - Dark Site Edge

A short blog on how to deploy a Dark Site Edge in VCF9

2536 Words // ReadTime 11 Minutes, 31 Seconds

2025-11-22 00:30 +0100

Introduction

I haven’t written anything in a while, and I need to change that here with another niche topic. I want to take a closer look at VCF9 Edge. This does not refer to the Edge cluster in NSX; no, VCF Edge is a special form of a VCF instance. VCF offers nine complete designs for this form, and I would like to take a closer look at one special version — the so-called Dak Site Edge.

Welcome to the dark si(d)te

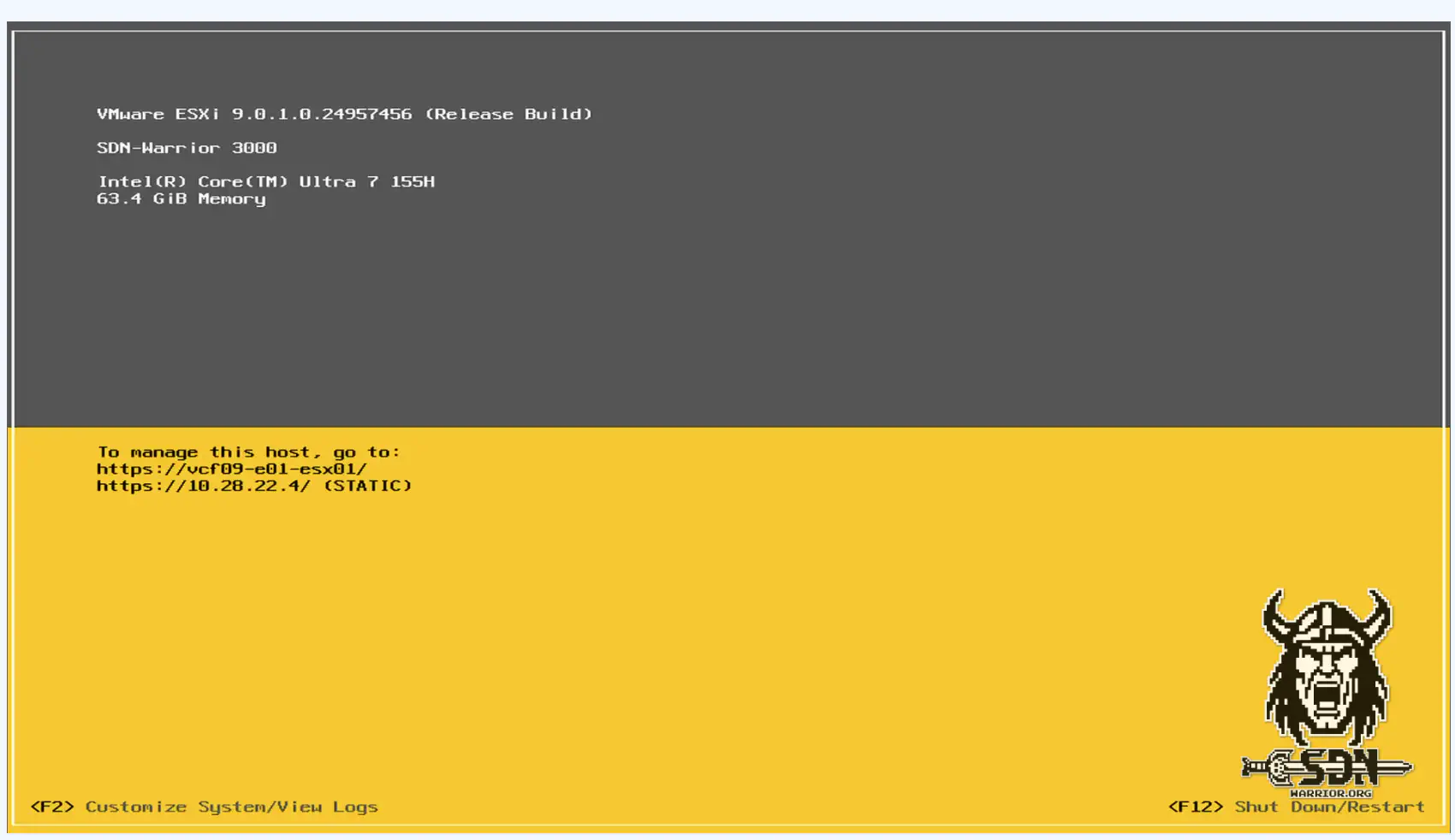

The “Dark Site” has its own independent VCF instance, separate from the primary VCF Fleet. A dark site has limited or no external network connectivity. An independent VCF instance ensures full functionality and management within the isolated environment without relying on external services. Okay, cool, so what do I do with it now? Well, my plan is to deploy a VCF9 instance on a single NUC 14 with an Ultra 7 CPU. I want to run a VKS Supervisor and deploy VM workloads on the dark site using automation. The cool thing is that Dark Site Edge can also be used without the fleet. So I have a VCF instance with VKS, NSX, and vCenter in a case measuring approximately 0.7 liters, or for our friends who don’t use the metric system, with the volume of ¾ of a standard coffee cup. (The volume specifications come from an AI; unfortunately, I can’t work with the non-metric system.)

Prerequisites

In order to set everything up, I first need my NUC with memory tiering. This will initially be loaded with ESX 9.0.1. If you want to know which workarounds are needed for an Intel NUC, you can check here.

Furthermore, an existing fleet is required. Even if the VCF instance works without a permanent connection to the fleet, the fleet is still required for licensing, and therefore the instance must also be in contact at least every 180 days.

In addition, an offline repository is required, as the software must be obtained from somewhere. In our scenario, we assume that I do not have a permanent or stable connection to the fleet.

Design

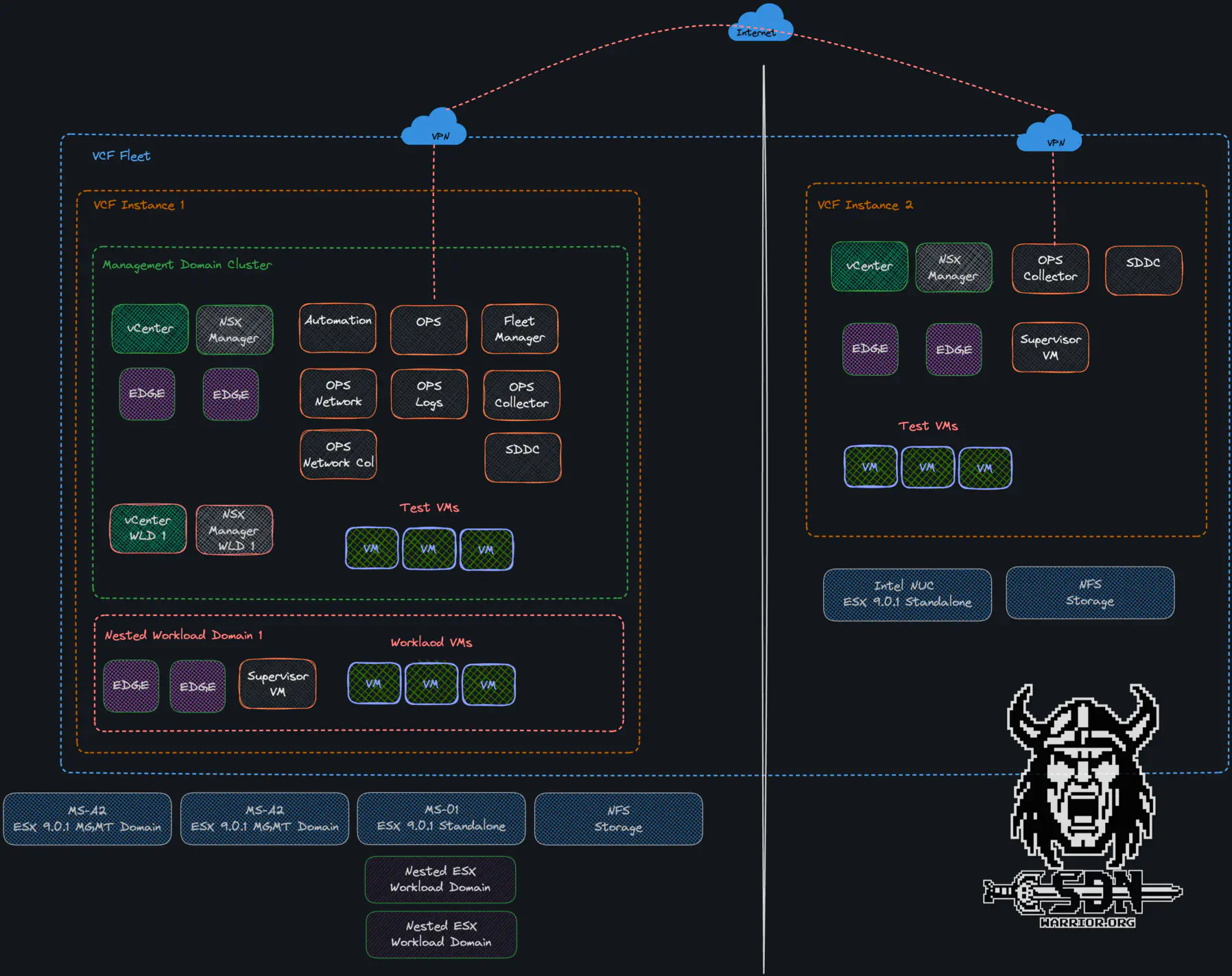

In terms of design, the setup is intended to complement my VCF 9 setup as described here.

Dark Site Design (click to enlarge)

-

Local Management Domain:

VCF Instance 2 includes its own Management Domain components (VCF Operation Collector, SDDC Manager, vCenter and NSX). Essential for the local management of the compute, network, and storage resources within the dark site’s VCF instance. It provides the necessary control plane for the dark site’s infrastructure.

-

Local Compute Cluster:

The VCF instance is set up as a single node cluster and thus shares resources for management and workload.

-

VCF Operations Collector:

Data collected locally during offline mode and synchronized with VCF operations, when connectivity is established with central site. here might be periodically exported or analyzed locally.

Usecase

- Strict Isolation and Security Requirements

- Unreliable or Intermittent Network Connectivity

- Autonomous Operations Requirement - Situations where the edge location needs to function independently for extended periods without external dependencies, including management, monitoring, and software updates.

At least, those are Broadcom’s official best-fit scenarios. Personally, I have other use case. Since the dark site comes with its own NSX instance, I can also test things in NSX without turning on my MS-A2, saving around 150 watts.

Okayyyyyyyy, let’s go!

The whole thing can’t be that difficult, I thought, and naively set to work, and what can I say—I had no idea what wouldn’t work and where I would have to improvise.

William Lam has an article online where he writes about how to deploy VCF with a single host. I tried for several hours and failed repeatedly. Maybe I’m too stupid, maybe I overlooked something, but in the end it doesn’t matter, because we’re in unsupported territory here. Slight foreshadowing—this won’t be the last time a plan doesn’t work out. At this point, I’ll count the workarounds so I can get the setup to work.

Workaround 1 and 2:

Activating E/P cores and memory tiering. These workarounds are well described and I use them all the time. You can find a description of the workaround in the introduction to the article.

Workaround 3:

Since my NUC only has only one 2.5 Gb/s network connection and the VCF installer actually requires 2x10Gb/s networking, workaround 3 is used. The VCF Installer checks in the pre-checks whether suitable network adapters are configured; fortunately, this can be disabled.

To do this, log in to the VCF Installer via SSH, obtain root privileges with su, and then simply add the following to /etc/vmware/vcf/domainmanager/application.properties

echo "enable.speed.of.physical.nics.validation=false" >> /etc/vmware/vcf/domainmanager/application.properties

Next, restart the service again and that’s it.

echo 'y' | /opt/vmware/vcf/operationsmanager/scripts/cli/sddcmanager_restart_services.sh

Workaround 3.5

This is not a software workaround but a hardware workaround/ Since I use NFS storage, the VCF installer attempts to mount the NFS share during the precheck. Even if you have configured the VDS in the JSON to use a single NIC, this precheck is always performed with the second NIC. If the server does not have a second NIC, this will always fail. I used an old 1 Gb/s USB network card for this. It has an Intel chip and was recognized without any problems. However, the adapter does not support jumbo frames, and as soon as more than one VLAN is configured, the adapter no longer works. But that’s enough for the precheck.

USB Nic (click to enlarge)

I just had to assign the USB adapter as an uplink to the temporarily created VDS on the ESX server at the right moment, and the NFS check was successful. The actual deployment ran via the onboard card.

I will realize later that all the trouble with the USB NIC was actually unnecessary. Like many other things, but I want to write down the entire journey here.

Workaround 4:

My first attempt was a greenfield deployment as a single host using the workaround described by William. The interactive GUI of the VCF installer does not allow greenfield deployment with a single host. This can be suppressed with two simple entries.

For VCF 9.0.1, the feature properties file must be modified on the VCF installer using the following command

echo "feature.vcf.vgl-29121.single.host.domain=true" >> /home/vcf/feature.properties

For VCF 9.0, the feature properties file must be modified on the VCF installer using the following command

echo "feature.vcf.internal.single.host.domain=true" >> /home/vcf/feature.properties

Finally, the services on the installer must be restarted.

echo 'y' | /opt/vmware/vcf/operationsmanager/scripts/cli/sddcmanager_restart_services.sh

The workaround does not allow the interactive installer to be used; it only allows a single cluster to be deployed via a JSON file. The deployment looked fine at first, but then stalled after deploying the SDDC Manager.

I found this lovely error message in the VCF Installer log.

"Failed to validate domain spec", "nestedErrors":

K"errorCode": "INVALID_NUMBER_OF_MINIMUM_HOSTS", "arguments":

['2", "vLCM"], message':"Minimum 2 hosts are required for vLCM cluster with external storage to be created."

Since it didn’t work after several attempts, I needed a new idea. What if I built a 2-node cluster? The problem was that I didn’t have a second NUC available, and everything was supposed to run on one NUC. Then I had the idea of building a nested host (on one of my MS-01s), deploying VCF, and then turning off the nested host. Nice idea, but it leads me to

Workaround 5:

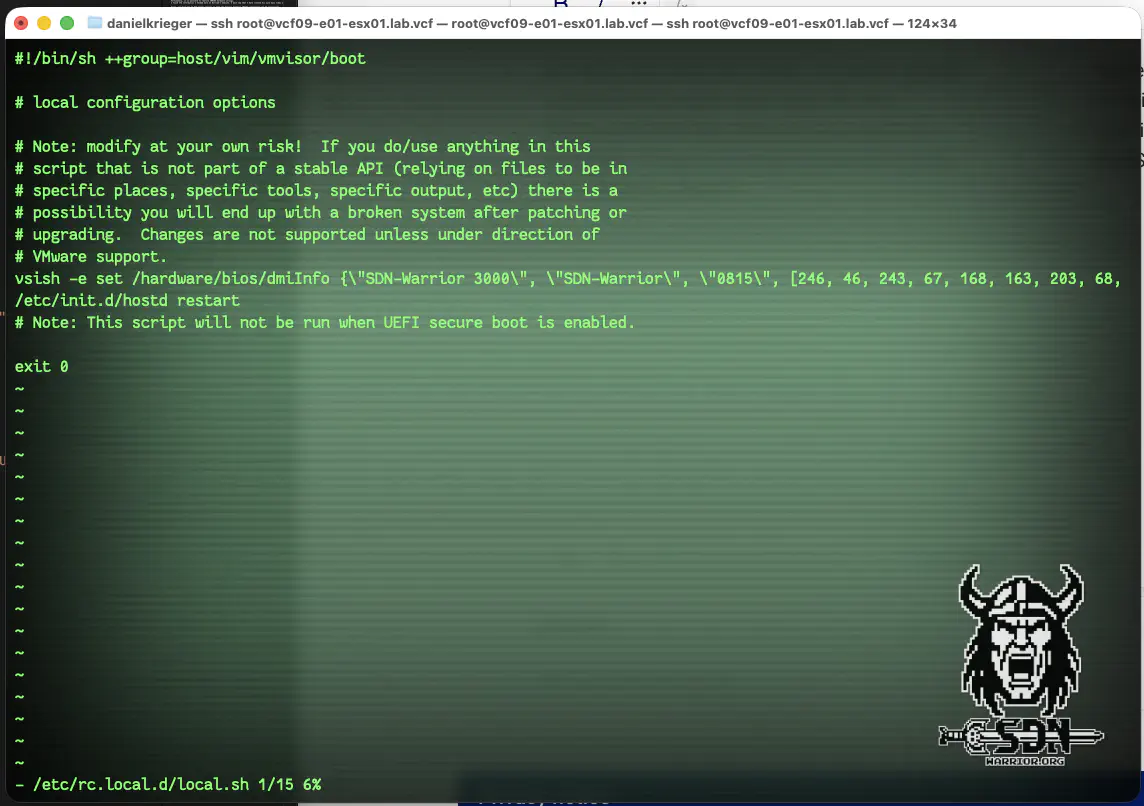

Since VCF does not allow you to create a cluster when different manufacturers are involved (in my case, ASUTEK NUC and Minisforum MS-01), I had to find a way around this. Fortunately, it is possible to rewrite SMBIOS hardware strings. I found the information I needed here on William’s website. I must say that I have visited his site many times over the last few days.

First, you must set an ESX kernel setting so that the default hardware SMBIOS information can be overwritten. To do this, edit the file /bootbank/boot.cfg and add the following to the line with kernelopt:

kernelopt=autoPartition=FALSE ignoreHwSMBIOSInfo=TRUE

After that, the server must be rebooted.

Next, I use Williams Powershell Script to generate a vsish command that I can use to rewrite my ESXiSMBIOS.

Function Generate-CustomESXiSMBIOS {

param(

[Parameter(Mandatory=$true)][String]$Uuid,

[Parameter(Mandatory=$true)][String]$Model,

[Parameter(Mandatory=$true)][String]$Vendor,

[Parameter(Mandatory=$true)][String]$Serial,

[Parameter(Mandatory=$true)][String]$SKU,

[Parameter(Mandatory=$true)][String]$Family

)

$guid = [Guid]$Uuid

$guidBytes = $guid.ToByteArray()

$decimalPairs = foreach ($byte in $guidBytes) {

"{0:D2}" -f $byte

}

$uuidPairs = $decimalPairs -join ', '

Write-Host -ForegroundColor Yellow "`nvsish -e set /hardware/bios/dmiInfo {\`"${Model}\`", \`"${Vendor}\`", \`"${Serial}\`", [${uuidPairs}], \`"1.0.0\`", 6, \`"SKU=${SKU}\`", \`"${Family}\`"}`n"

}

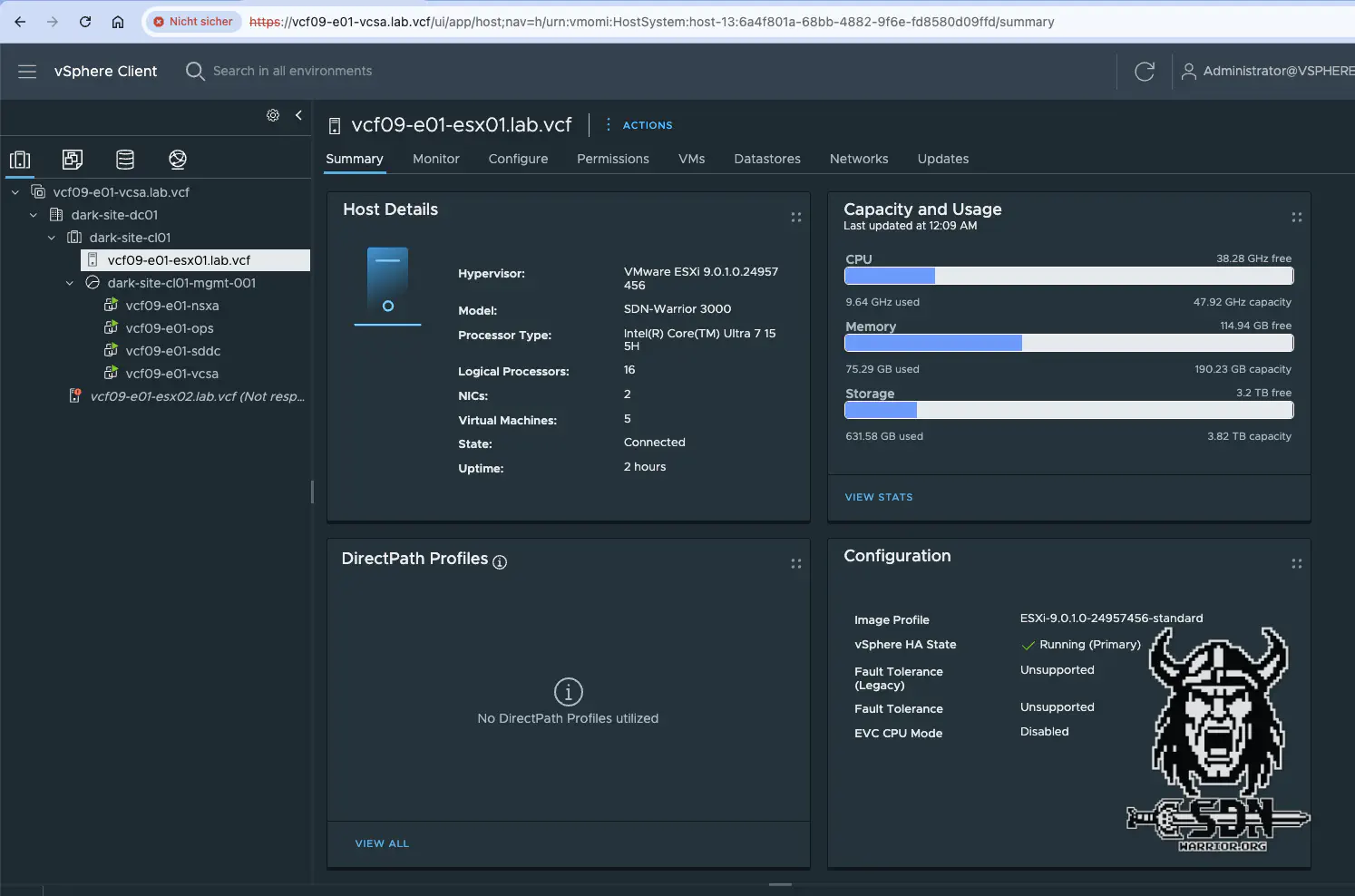

Generate-CustomESXiSMBIOS -Uuid "43f32ef6-a3a8-44cb-9137-31cb4c6c520b" -Model "SDN-Warrior 3000" -Vendor "SDN-Warrior" -Serial "0816" -SKU "NUC" -Family "sdn-warrior.org"

The UUID and serial number should be unique. As a result, I now receive a string that I can simply send to the ESX server via SSH

vsish -e set /hardware/bios/dmiInfo {\"SDN-Warrior 3000\", \"SDN-Warrior\", \"0815\", [246, 46, 243, 67, 168, 163, 203, 68, 145, 55, 49, 203, 76, 108, 82, 10], \"1.0.0\", 6, \"SKU=NUC\", \"sdn-warrior.org\"}

/etc/init.d/hostd restart

However, I have set the option to permanent, and for this to work, you simply need to insert the two lines into /etc/rc.local.d/local.sh. After that, ESXiSMBBios will be set after every reboot.

Make your own Vendor (click to enlarge)

Congratulations, I just became my own hardware manufacturer. May I introduce the SDN Warrior 3000!

SDN-Warrior 3000 (click to enlarge)

But now the deployment should work, right? - NOPE!

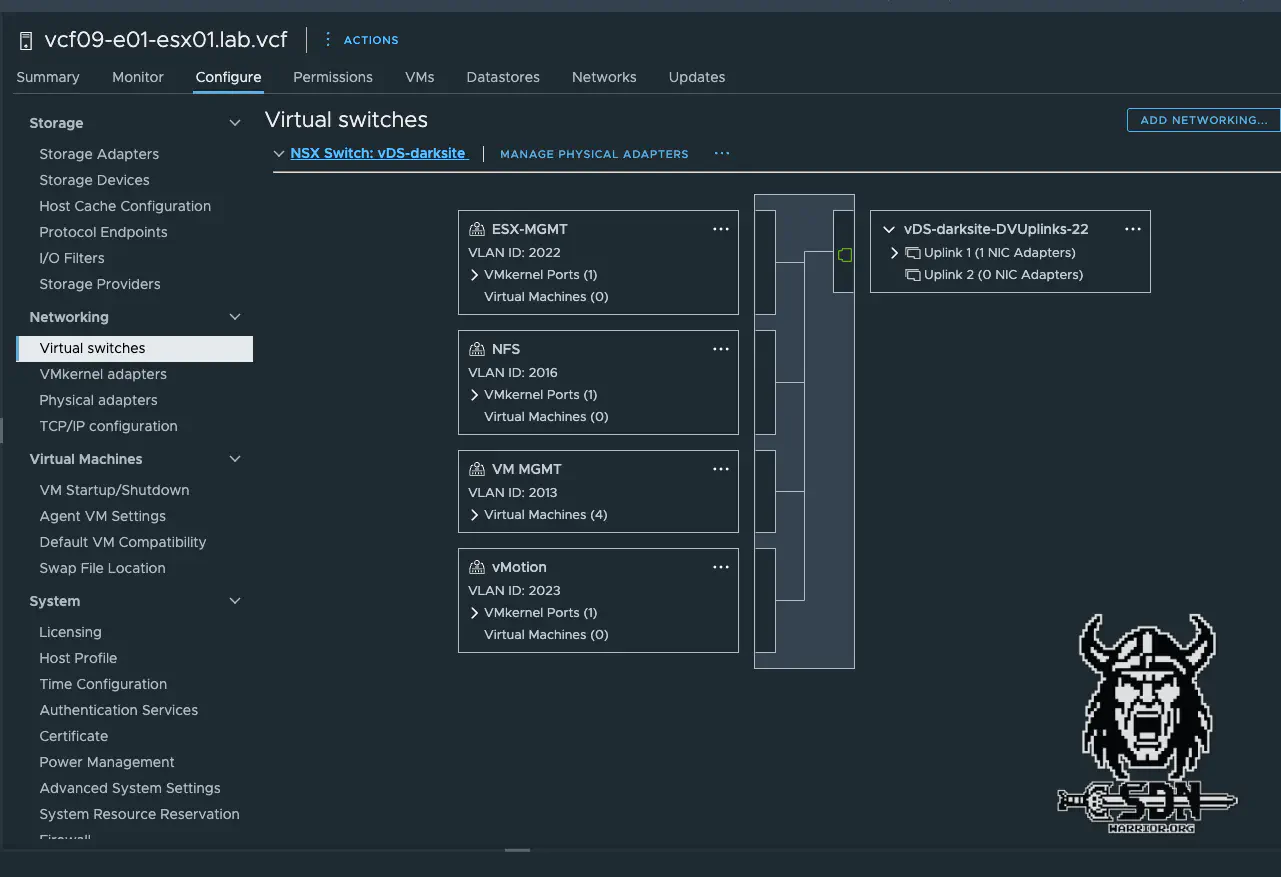

I hadn’t thought about the network. VCF actually requires two network adapters for good reason. The initial setup of ESX is with a standard switch and, after the SDDC has been deployed and the cluster has been formed, it is migrated to a VDS that is initially formed with the second network card. Of course, this is not possible without a second network card. That was also the moment when I decided against a greenfield deployment and switched to Converge.

Redesign and Converge

Actually, I’ve long since reached the point where the project should be considered a failure, but I really wanted a VCF cluster on a NUC. To make that work, however, I have to migrate to a VDS, otherwise Converge will fail. First, I manually deployed a vCenter and created my vSphere cluster with my two SDN Warrior 3000 servers (a NUC and a nested host—a dream team).

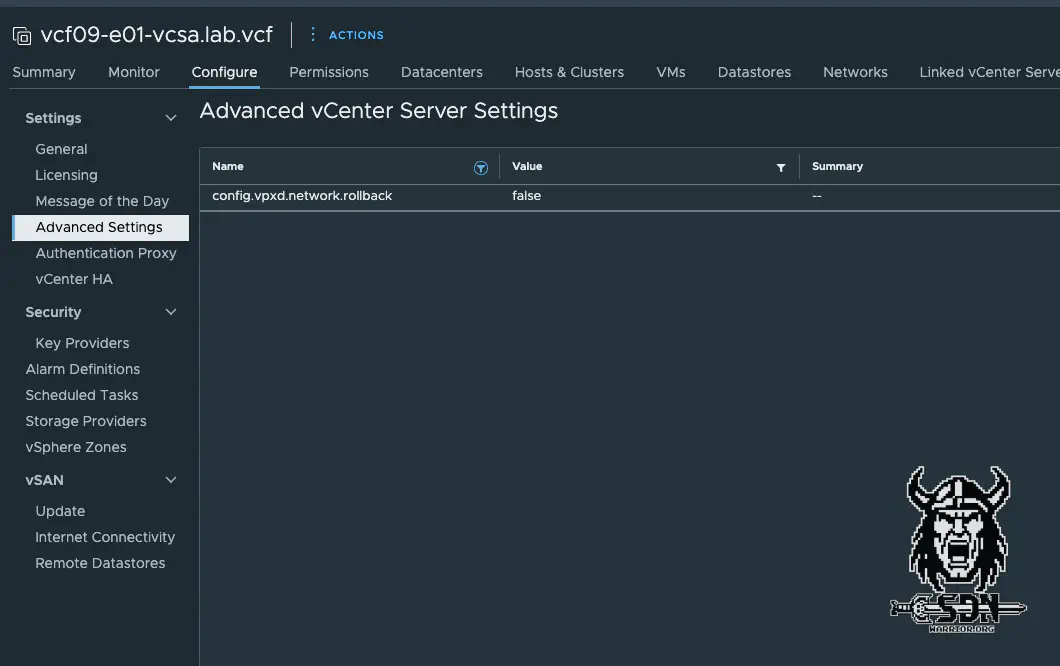

As far as migration to the VDS is concerned, it’s a chicken-and-egg problem. The VDS does offer the option of migrating all VMK adapters and the VM network at once, but as soon as I do that, there is a network interruption and shortly afterwards the vSphere protection mechanism kicks in and rolls back the configuration. Fortunately, you can disable this, which brings me to

Workaround 6

To disable this behavior, you can disable the function in vCenter under Advanced Settings. To do this, set config.vpxd.network.rollback to false.

vCenter Advanced Settings (click to enlarge)

Half of the work is done. At least I could now migrate the VMK to the VDS, just like the VMK for NFS. However, my vCenter is then suspended in a vacuum on the standard switch and can no longer be connected to another port group. To make this possible directly via the ESX server, you have to change the port group where the vCenter is to be migrated from static binding to ephemeral. This allows the vCenter to be migrated directly via the ESX to the port group on the DVS and is then accessible again. Wonderful, the migration to the DVS with an adapter was successful. Perhaps just a quick note: the distributed port group should be reconfigured before the vCenter becomes unavailable.

happy little VDS (click to enlarge)

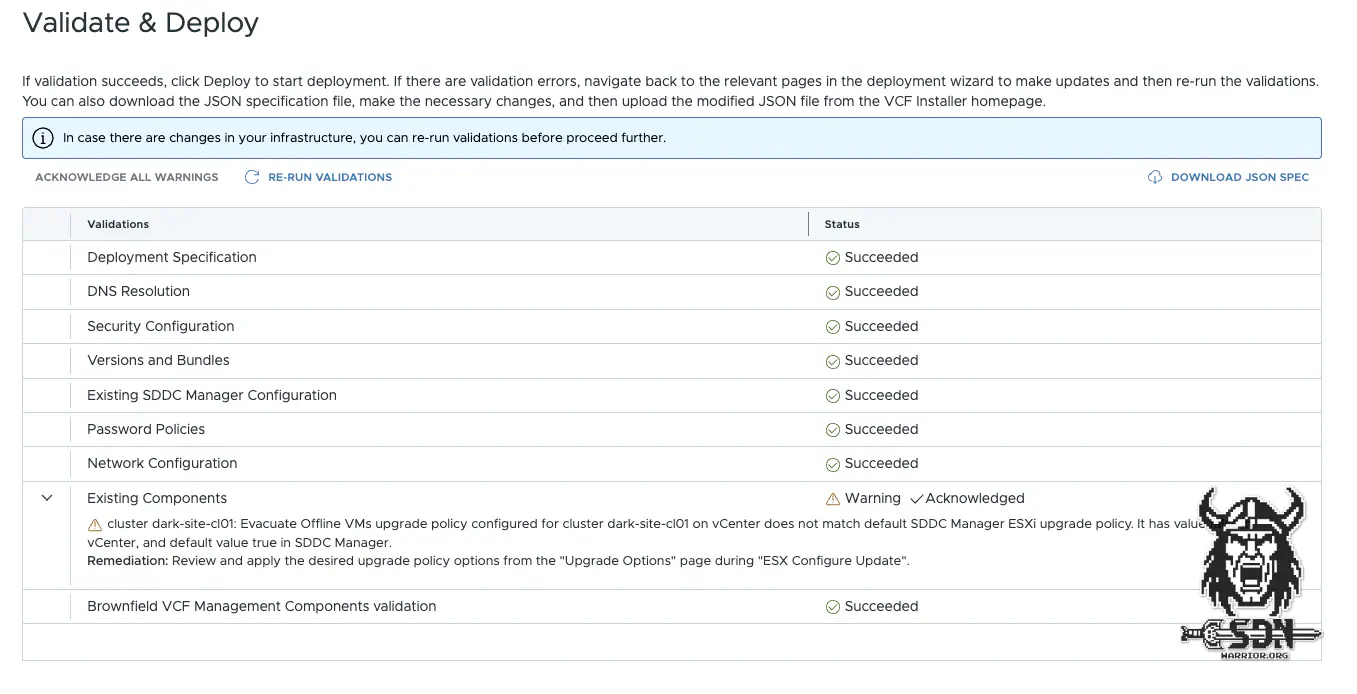

Now the Converge could begin. Since I now had a 2-node cluster with VDS, vMotion, and so on, the Converge could begin without any further hacks via the VCF installer, right? Well, not quite. There was still a small error, but it was easy to fix. The installer checks whether the VDS has 2 uplinks, but it does not check whether they actually have a link. So the VDS is quickly given an uplink and lo and behold, the validation runs successfully.

Converge precheck (click to enlarge)

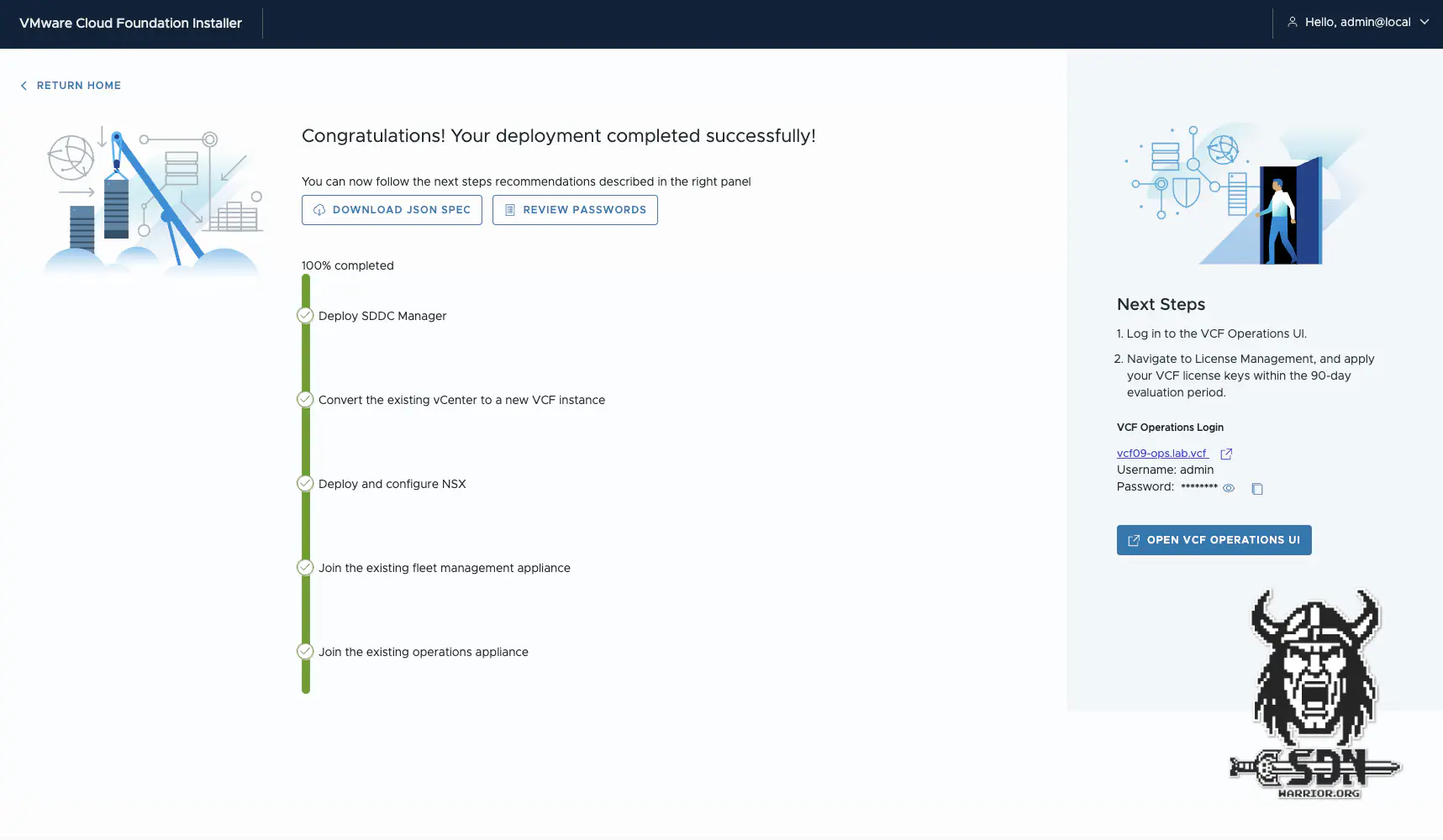

Converge

The actual Converge process is relatively unspectacular. Broadcom and VMware have done a lot to make it as easy as possible (unless you have an adventurous setup like mine). You no longer need Python scripts, as was the case with VCF 5.x for brownfield imports. The process is relatively simple: the VCF installer first deploys the SDDC, then converts the existing vCenter to the new VCF instance, rolls out NSX and a cloud proxy, and then joins the instance to Fleet and VCF Operations. It can be that simple.

Converge done! (click to enlarge)

Are you lying to us?

One might ask this question, because the plan was to build a single node cluster, and when it comes to that, I can only say mission successfully failed, but there is a but. The virtual ESX is only a temporary solution, and in future I will only activate it for the lifecycle, as the NUC’s performance is more than sufficient. I also simply removed the ESX server from the cluster and shut it down.

Single node cluster (click to enlarge)

So, technically speaking, I cheated a little bit, but as you can see in the screenshot, I deployed a working VCF instance. What’s still missing are the NSX Edge and my supervisor cluster, but looking at the clock, that’s a topic for another blog article.

just one more thing

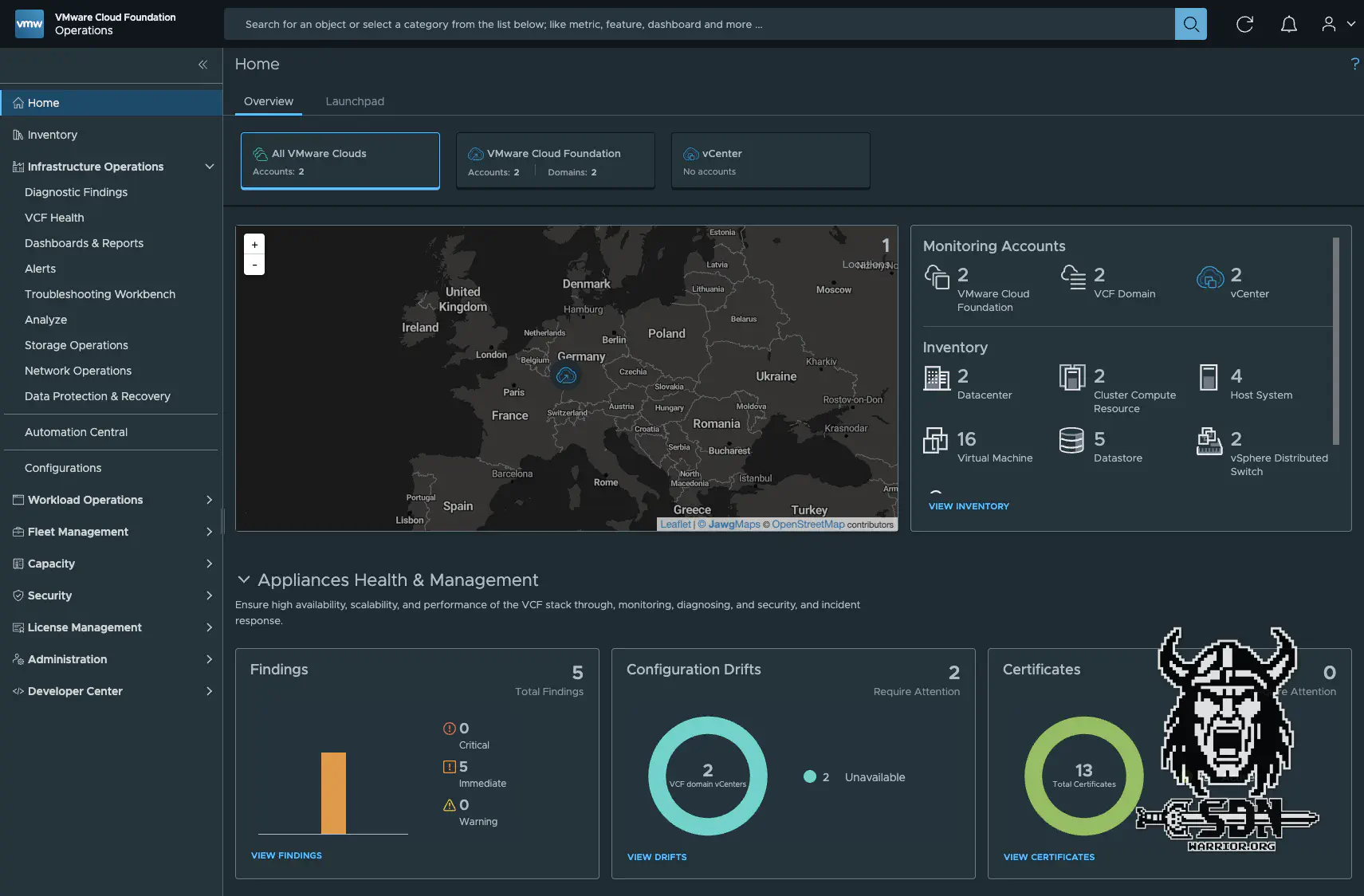

To ensure that I still have logs and metrics from my cluster in operation even when the cluster is disconnected or my operations are down, I have enabled data persistence on the cloud proxy. This means that logs are stored until the environment reaches my fleet again.

Final remarks and summary

It was an exciting and thrilling project that definitely cost me a lot of sleep, as I worked tirelessly for the last three days and definitely didn’t get enough sleep. However, I couldn’t accept that it wasn’t possible or that I couldn’t do it. It also gave me back the motivation I had been lacking to write something again. I must also say that I learned a lot. And as always, I have to express my gratitude to William, because at times I was on the verge of despair.

VCF Fleet (click to enlarge)

I hope you enjoyed the article and maybe it will help some of you, or you can laugh at me and my journey through the valley of tears.