VCF9 - Dark Site Edge - Part 2

A short blog on how to deploy a Dark Site Edge in VCF9 - Part 2

1671 Words // ReadTime 7 Minutes, 35 Seconds

2025-12-09 00:00 +0100

Introduction

In my last Dark Site article, I showed how to deploy a Dark Site Edge on a host (with a temporary second host). However, this didn’t sit well with me, as I believe it should also be possible with just one host and without any additional tools. Spoiler alert: it works, and today I’m going to write down how to do it.

Of course, we need a few workarounds here, and I was unable to create the setup with external storage such as NFS. So today I will use vSAN ESA, even though it requires significantly more resources than NFS.

So buckle up, we’re going in.

Hardware modification

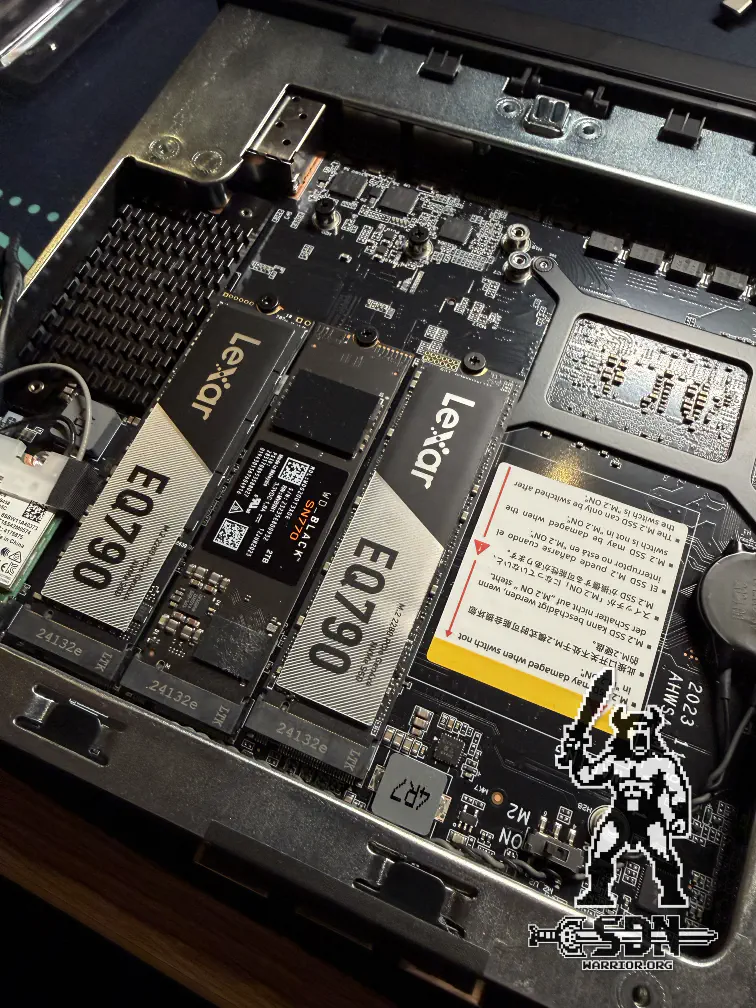

Since I want to use vSAN for this deployment, because a greenfield deployment with external storage is not possible with only one node, I first need to expand my hardware. Fortunately, I purchased my NVMes before the price chaos. I need a total of three NVMes. I have a 1 TB NVMe (PCIe4) for memory tiering, a 2 TB NVMe (PCIe3) for local storage and the actual ESX. This could be a much smaller disk, but I had it anyway. And finally, another 2 TB NVMe (PCIe3) for vSAN.

NVMe - MS-01 (click to enlarge)

I could have saved myself an NVMe and booted the ESX via a USB stick, because then you can share the memory tiering disk with the system disk, but I simply only have one USB stick left and I need it for firmware updates, plus I don’t particularly like USB sticks. Now you might ask why I have two PCIe3 disks—well, I don’t. However, the MS-01 only uses one slot with PCIe4 by default BIOS settings, and the others run with PCIe3, as otherwise there is a risk of overheating. In addition, the MS-01 will serve another purpose later on, as it will sooner or later become part of my Nutanix cluster, and I will need the disks for that anyway.

Prerequisites

As with my first attempt, a deployed fleet is required. This must consist of at least one VCF Operations, vCenter, NSX Manager, VCF Operations Cloud Proxy, and one Fleet Manager.

Workarounds

What would an SDN-warrior deployment be without workarounds? That’s right, work and no fun.

Workaround 1 (E&P Cores):

Since I use the MS-01, the first thing that comes into play is the workaround for the Big.LITTLE architecture. I have described this here.

Workaround 2 (Memory Tiering):

Memory tiering is also used, as described in the same article like the Big.LITTLE workaround.

Workaround 3 (Single host deployment):

I have described this here. However, with my 9.0.1 setup, I needed both entries in the feature.properties file.

echo "feature.vcf.vgl-29121.single.host.domain=true" >> /home/vcf/feature.properties

echo "feature.vcf.internal.single.host.domain=true" >> /home/vcf/feature.properties

Workaround 4 (Bypass vSAN HCL):

Now we finally come to something new—at least, something new for me. In VCF 9.0.1, you can finally bypass the HCL check for vSAN ESA. Gone are the days of custom JSON vSAN files and VIB installation on ESX.

To do this, simply log in to the installer via SSH and enter the following in the shell.

echo "vsan.esa.sddc.managed.disk.claim=true" >> /etc/vmware/vcf/domainmanager/application-prod.properties

systemctl restart domainmanager

Now that most of the work has been done, we can proceed with the deployment. Just a quick heads-up: there will be a small workaround after the SDDC Manager has been successfully deployed.

Deployment

Now comes the really exciting part. Unfortunately, deployment via the guided GUI is not possible with vSAN and single host, but that’s what JSON is for. There are a few good VCF JSON generators available online, such as this one, or you can simply use my JSON and modify it. As with the first Dark Site setup, I am using my existing Fleet, which means I don’t have to deploy VCF Operations, Automation, Operations for Logs, or even the Fleet Manager.

To obtain the SSL thumbprints, simply log in to the ESX server and use the following command. Adjust the FQDN in the query for each thumbprint.

echo | openssl s_client -connect vcf09-e01-esx01.lab.vcf:443 2>/dev/null | openssl x509 -noout -fingerprint -sha256 | cut -d= -f2

Here is the JSON file I used for VCF 9.0.1. Maybe I should create a GitHub repository for things like this.

{

"sddcId": "dark-site03",

"vcfInstanceName": "dark-site03",

"workflowType": "VCF",

"version": "9.0.1.0",

"ceipEnabled": false,

"skipEsxThumbprintValidation": true,

"dnsSpec": {

"nameservers": [

"192.168.11.2",

" 192.168.100.254"

],

"subdomain": "lab.vcf"

},

"ntpServers": [

"192.168.12.1"

],

"vcenterSpec": {

"vcenterHostname": "vcf09-e03-vcsa.lab.vcf",

"rootVcenterPassword": "xxx",

"vmSize": "small",

"storageSize": "",

"dminUserSsoUserName": "administrator@vsphere.local",

"adminUserSsoPassword": "xxx",

"ssoDomain": "vsphere.local",

"useExistingDeployment": false

},

"clusterSpec": {

"clusterName": "dark-site03",

"datacenterName": "e03"

},

"datastoreSpec":

{

"vsanSpec": {

"failuresToTolerate": 0,

"vsanDedup": false,

"esaConfig": {

"enabled": true

},

"datastoreName": "vsanDatastore"

}

},

"nsxtSpec": {

"nsxtManagerSize": "medium",

"nsxtManagers": [

{

"hostname": "vcf09-e03-nsxa.lab.vcf"

}

],

"vipFqdn": "vcf09-e03-nsx.lab.vcf",

"useExistingDeployment": false,

"nsxtAdminPassword": "xxx",

"nsxtAuditPassword": "xxx",

"rootNsxtManagerPassword": "xxx",

"skipNsxOverlayOverManagementNetwork": true,

"ipAddressPoolSpec": {

"name": "host-overlay",

"description": "",

"subnets": [

{

"cidr": "10.28.24.0/24",

"gateway": "10.28.24.1",

"ipAddressPoolRanges": [

{

"start": "10.28.24.21",

"end": "10.28.24.30"

}

]

}

]

},

"transportVlanId": "2024"

},

"vcfOperationsSpec": {

"nodes": [

{

"hostname": "vcf09-ops.lab.vcf",

"rootUserPassword": "xxx",

"type": "master",

"sslThumbprint": "XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX"

}

],

"adminUserPassword": "xxx",

"applianceSize": "xsmall",

"useExistingDeployment": true,

"loadBalancerFqdn": null

},

"vcfOperationsFleetManagementSpec": {

"hostname": "fleet.lab.vcf",

"rootUserPassword": "xxx",

"adminUserPassword": "xxx",

"useExistingDeployment": true,

"sslThumbprint": "XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX"

},

"vcfOperationsCollectorSpec": {

"hostname": "vcf09-e03-ops.lab.vcf",

"applianceSize": "small",

"rootUserPassword": "xxx",

"useExistingDeployment": false

},

"hostSpecs": [

{

"hostname": "vcf09-e03-esx01.lab.vcf",

"credentials": {

"username": "root",

"password": "xxx"

},

"sslThumbprint": "XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX:XX"

}

],

"networkSpecs": [

{

"networkType": "MANAGEMENT",

"subnet": "10.28.22.0/24",

"gateway": "10.28.22.1",

"subnetMask": null,

"includeIpAddress": null,

"includeIpAddressRanges": null,

"vlanId": "2022",

"mtu": "1500",

"teamingPolicy": "loadbalance_loadbased",

"activeUplinks": [

"uplink1",

"uplink2"

],

"standbyUplinks": null,

"portGroupKey": "e03-cl01-vds01-pg-esx-mgmt"

},

{

"networkType": "VM_MANAGEMENT",

"subnet": "10.28.13.0/24",

"gateway": "10.28.13.1",

"subnetMask": null,

"includeIpAddress": null,

"includeIpAddressRanges": null,

"vlanId": "2013",

"mtu": "1500",

"teamingPolicy": "loadbalance_loadbased",

"activeUplinks": [

"uplink1",

"uplink2"

],

"standbyUplinks": null,

"portGroupKey": "e03-cl01-vds01-pg-vm-mgmt"

},

{

"networkType": "VMOTION",

"subnet": "10.28.23.0/24",

"gateway": "10.28.23.1",

"subnetMask": null,

"includeIpAddress": null,

"includeIpAddressRanges": [

{

"startIpAddress": "10.28.23.20",

"endIpAddress": "10.28.23.29"

}

],

"vlanId": "2023",

"mtu": "1500",

"teamingPolicy": "loadbalance_loadbased",

"activeUplinks": [

"uplink1",

"uplink2"

],

"standbyUplinks": null,

"portGroupKey": "e03-cl01-vds01-pg-vmotion"

},

{

"networkType": "VSAN",

"subnet": "10.28.16.0/24",

"gateway": "10.28.16.1",

"subnetMask": null,

"includeIpAddress": null,

"includeIpAddressRanges": [

{

"startIpAddress": "10.28.16.40",

"endIpAddress": "10.28.16.50"

}

],

"vlanId": "2016",

"mtu": "9000",

"teamingPolicy": "loadbalance_loadbased",

"activeUplinks": [

"uplink1",

"uplink2"

],

"standbyUplinks": null,

"portGroupKey": "e03-cl01-vds01-pg-vsan"

}

],

"dvsSpecs": [

{

"dvsName": "e03-cl02-vds01",

"networks": [

"MANAGEMENT",

"VM_MANAGEMENT",

"VMOTION",

"VSAN"

],

"mtu": 9000,

"nsxtSwitchConfig": {

"transportZones": [

{

"transportType": "OVERLAY",

"name": "VCF-Created-Overlay-Zone"

}

]

},

"vmnicsToUplinks": [

{

"id": "vmnic0",

"uplink": "uplink1"

},

{

"id": "vmnic1",

"uplink": "uplink2"

}

],

"nsxTeamings": [

{

"policy": "LOADBALANCE_SRCID",

"activeUplinks": [

"uplink1",

"uplink2"

],

"standByUplinks": []

}

],

"lagSpecs": null

}

],

"sddcManagerSpec": {

"hostname": "vcf09-e03-sddc.lab.vcf",

"useExistingDeployment": false,

"rootPassword": "xxx",

"sshPassword": "xxx",

"localUserPassword": "xxx"

}

}

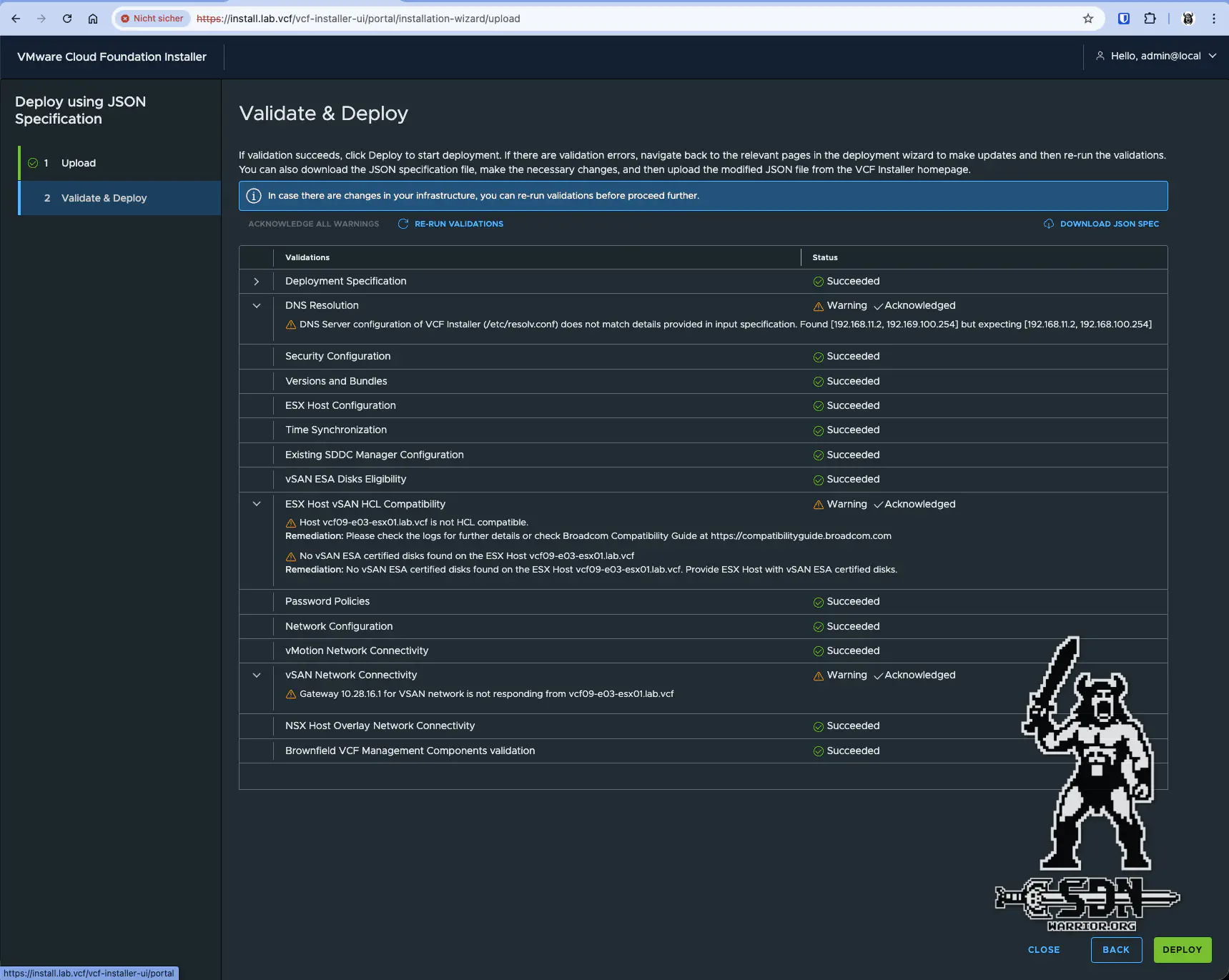

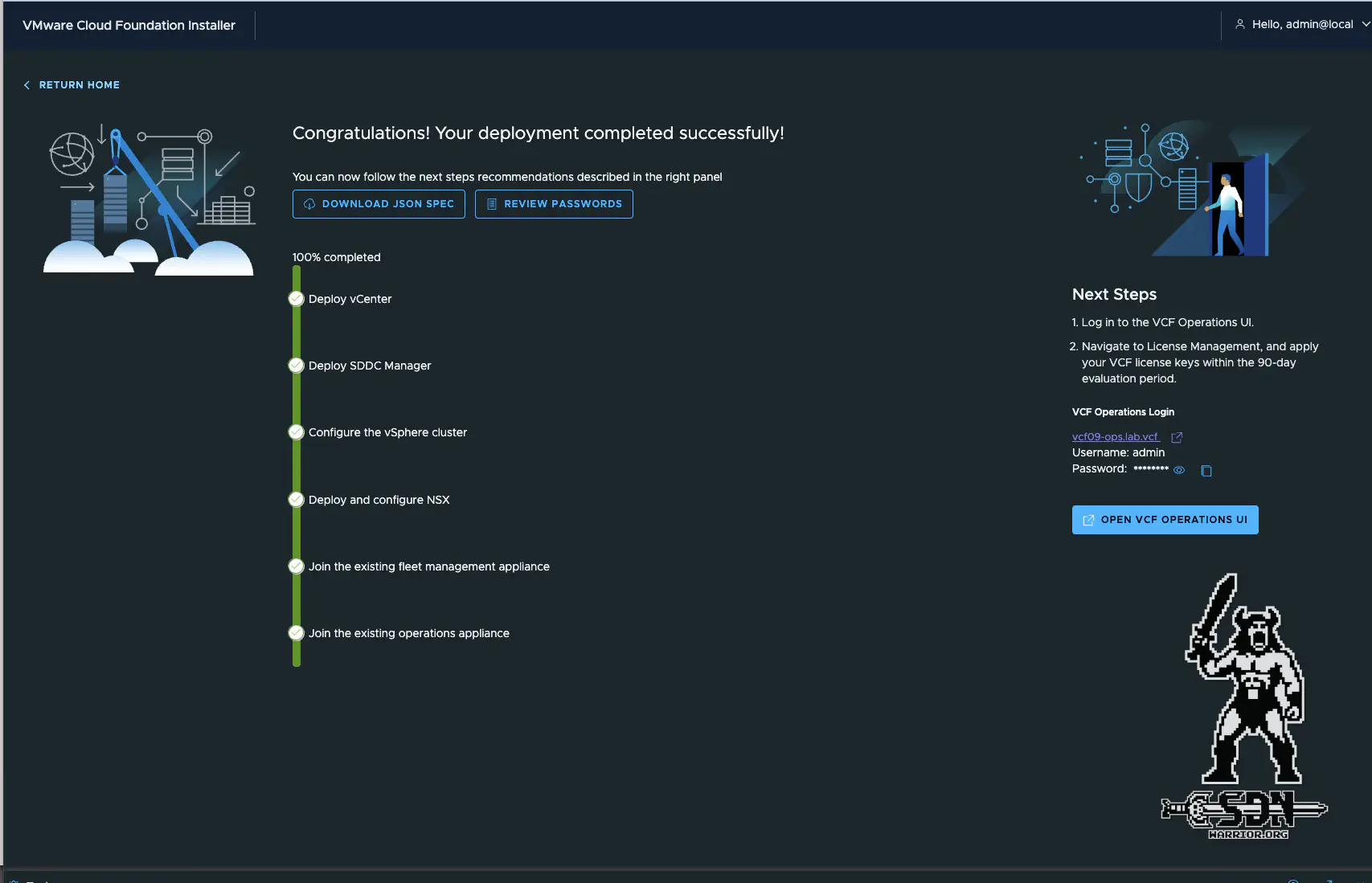

Once the typing is done, you can simply upload the JSON file via the installer’s GUI and start the validation. In the best case, it should now look like this.

VCF Installer (click to enlarge)

You can ignore the DNS warning. I simply made a fat-finger mistake when deploying my vcf installer and mistyped one of the two DNS servers. The ESX Host vSAN warning is expected, as I am using 100% unsupported consumer hardware—server vendors hate this trick. The same applies to my vSAN Network Gateway. Since this network does not need to route, it does not have a gateway, so this message can also be ignored. So fill up your coffee cup and press deploy.

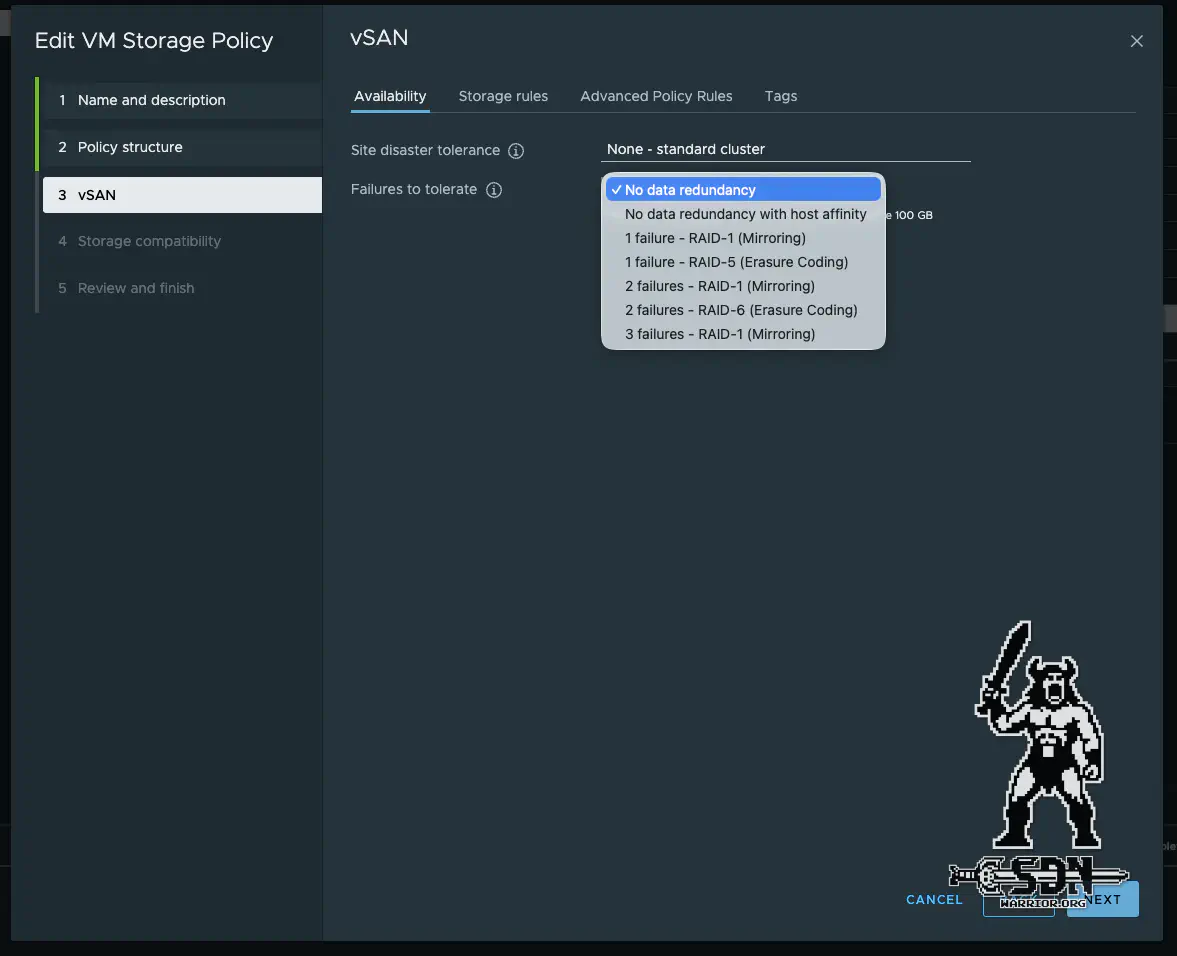

As I mentioned earlier, the installation will fail and we will have to perform a final workaround. The installer will abort at the “Configure the vSphere cluster” step because a Raid 1 vSAN policy is set by default, which we obviously cannot fulfill.

(Final) Workaround 5 (vSAN Policy):

The solution is super simple. Go to the Policies and Profiles settings via the burger menu in the vCenter and find the vSAN policy that the installer has set as default. This is usually a policy with the name of the VCF instance and Optimal Datasore Default Policy -Raid1. Lovely name. Edit this policy and set Failures to tolerate to “No data redundancy.” I don’t need to mention that this setting should never be used in production 99.9% of the time…

Dangerzone - vSAN Policy (click to enlarge)

After that, you can simply restart the deployment in the VCF installer using retry. The deployment should now run without any problems. For me, the whole process took about 2 hours.

VCF Installer (click to enlarge)

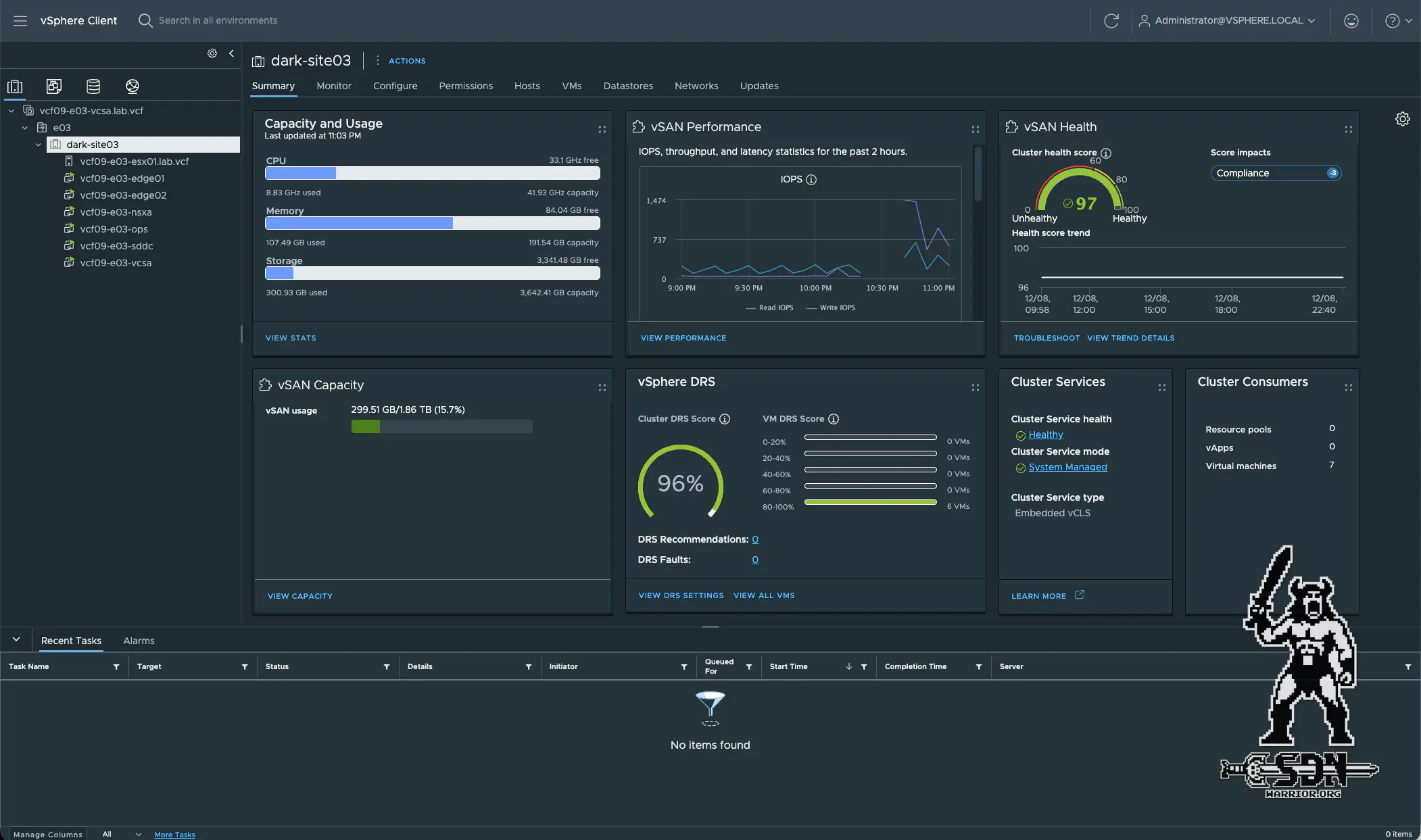

Nice, another successful deployment completed after a few workarounds, and this time really only on one host. I then deployed two more edge VMs and built my BGP peering. The Kubernetes cluster is still missing, but that’s a story for another blog. The finished VCF instance now looks like this.

VCF Instance (click to enlarge)

Summary

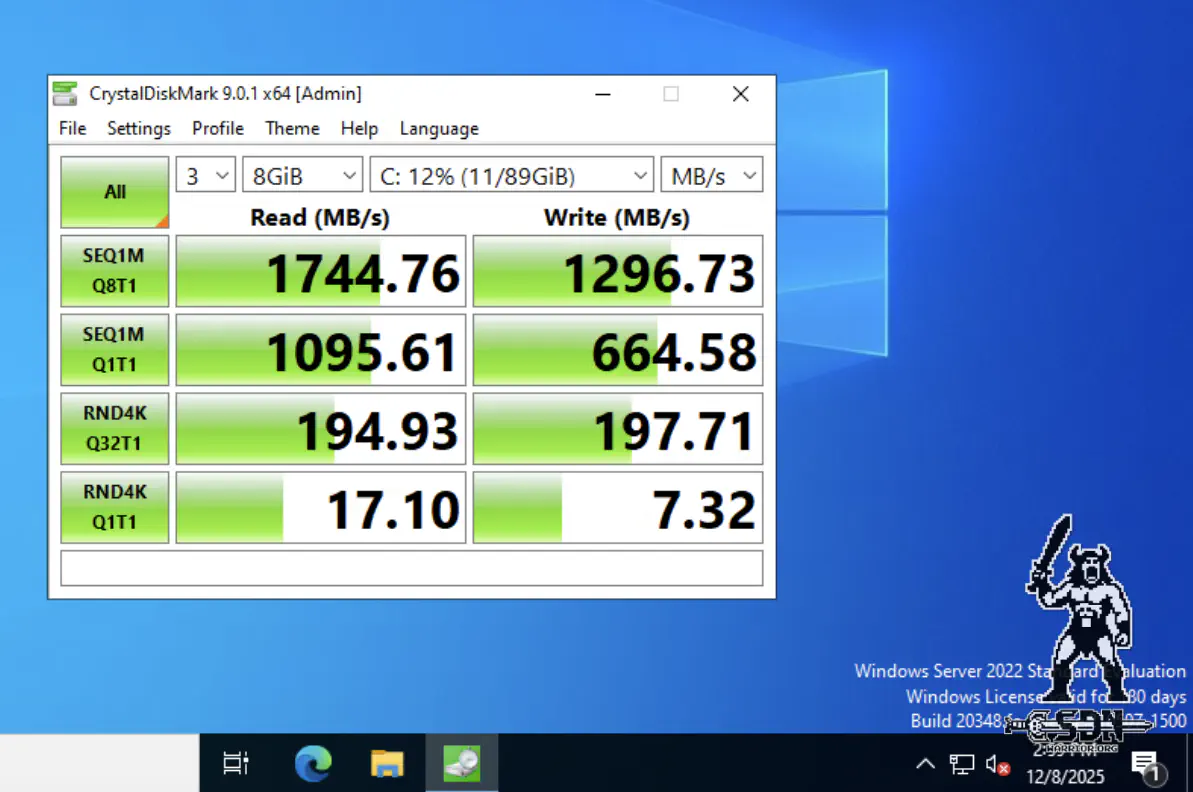

Significantly fewer workarounds but also more RAM required than with NFS. That’s my feedback in a nutshell. Perhaps I should say a few words about performance, as I am using NVMes that are unfamiliar to me in this setup. At first glance, the Lexar EQ790s perform quite well. They have similar read values to a Samsung 990 Pro and slightly weaker write values than the aforementioned drive, but the bottom line is that I couldn’t get a Samsung 990 Pro at an affordable price.

vSAN Crystal Disk Mark Performance (click to enlarge)

I also did a quick test with a Windows Server VM and Crystal Disk Mark. As always, this isn’t a 100% accurate test, but it just to show that this setup is perfectly usable for a lab environment to run a few test VMs and Kubernetes workloads without me always having to turn on multiple servers. So I achieved my goal 100%. I hope I was able to give you some helpful ideas for your setups.