VCF9 - Full-blown VCF 9 on 2 MS-A2 PCs

A short article containing all the workarounds for rolling out a complete VCF9 on 2 MS-A2s.

2727 Words // ReadTime 12 Minutes, 23 Seconds

2025-10-24 21:00 +0200

Introduction

In the past, I built all my labs in a nested structure, which worked well, but now the demands on me and my lab are increasing because I want more. I want to try more, even in areas I previously neglected. The idea of a redesign took shape in my head, and I knew there had to be more possibilities with my hardware. Above all, I wanted to work with VCF Automation, because it is essential for multi-tenancy environments and replaces vCloud Director. And let’s be honest, automation is a resource hog.

The idea was quite simple: I would take my two MS-A2 servers and install a VCF management domain on them. Both servers have 128 GB of RAM—that should be enough, right? Unfortunately not really, but there is still memory tiering. And that could be the end of the blog article—could be, if it weren’t for AMD Ryzen. Fortunately, there are workarounds, and thanks to William Lam, I found them.

But let’s take it slowly, one step at a time.

Hardware Setup

My plan is to build a two-node cluster without vSAN and with memory tiering. NFS will be provided via my good old Unraid storage system. I also started directly with VCF 9.0.1 as a greenfield deployment. I will continue to build my workload domains nested. This allows me to set up a complete VCF9 setup with three physical hosts, including VCF Operations for Network, Operations for Logs, and Automation.

MS-A2 (click to enlarge)

First, I install a 1 Tb Samsung 990 Pro in the first slot of each MS-A2. The first slot in the MS-A2 is set to PCIe4x4 in the BIOS by default. The other slots are set to PCIe3x4 by default. You can change this in the BIOS, but you risk the NVMes overheating. The active cooler does not seem to be sufficient to cool all NVMes. Here is an excerpt from the official FAQ:

To prevent the SSD from overheating, the two SSDs on the right side of the back support a

maximum speed of PCIE4.0x4, but are set to PCIE3.0x4 by default. You can adjust it to PCIe Gen4

speed in OnBoard Setting in BIOS, but it may cause the SSD to overheat, which may result in blue

screen, freeze, or data loss.

In the middle slot, I have a 2 TB NVMe for booting and as local storage. The third slot is free in case I ever want to use vSAN.

Memory Tiering and first steps after setup ESX

For VCF, SSH and NTP must be enabled after setting up the ESX server. It is also important that only the first 10Gb/s adapter is used. The second must be left unconfigured, as VCF deployment involves migrating from a standard switch to a distributed switch. Also, don’t forget to recreate the TLS certificate, otherwise the VCF installer will throw an error when adding the hosts.

- Cert regeneration

/sbin/generate-certificates

To enable memory tiering, the following must now be entered in the ESX CLI.

- This command turns on memory tiering

esxcli system settings kernel set -s MemoryTiering -v TRUE

- This command selects the NVMe

esxcli system tierdevice create -d /vmfs/devices/disks/<Your NVME>

- Enter the factor here (0-400%)

esxcli system settings advanced set -o /Mem/TierNvmePct -i 200

I use a factor of 200% because I need enough reserve to be able to move everything to one host for the entire lifecycle. But what’s the problem? The commands are well known, and I already described them in an article from 2024.

Ryzen Workaroud No1.

The problem lies with the AMD Ryzen processor and does not affect AMD EPYC or Intel Core, Ultra, or Xeon CPUs. To be fair, it must be said that Ryzen is not on the compatibility list and that VMware Homelabs has often insisted on NUC in the past, with many VMware employees themselves building labs with Intel NUCs. Whether this is the reason why Intel NUCs work so well is something I can only speculate about. But what exactly are the problems?

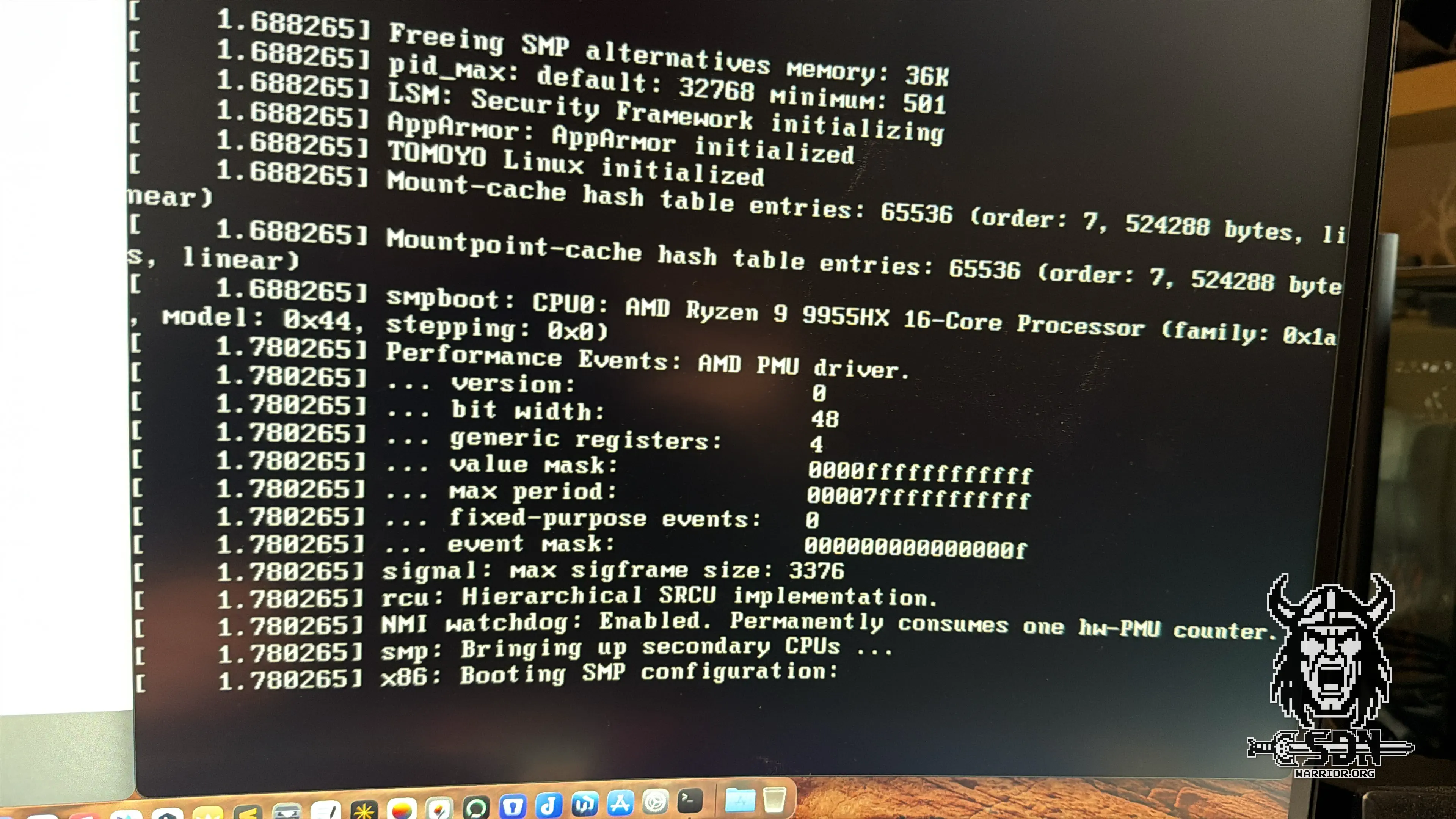

Well, the error was a bit strange. The VCF installer first installed vCenter and everything ran smoothly so far. The first problems arose with the SDDC Manager, which was deployed after vCenter. It simply refused to boot up. The VM was successfully turned on, and you could see the Photon OS splash screen, but after that, everything remained dark. My first suspicion was my NFS, as I had had a lot of problems with the reliability of my NFS shares after an Unraid update before my vacation. However, that turned out to be a dead end.

I then tried to install the VCF Installer (aka SDDC Manager) manually on local storage and got the same result. The same thing happened with NSX Manager. It boots a little further than SDDC, but also stops quite early on with no error message.

MSX Manager stops booting (click to enlarge)

After a long search on Google, I found the solution at William’s blog.

For memory tiering to work properly with AMD Ryzen, a VM advanced setting must be configured. Of course, this is not scalable if it has to be set for each individual VM, and it also does not work with the automatic deployment process of VCF. Fortunately, it can also be set globally. To do this, you must log in to each ESX host via SSH and execute the following command:

echo 'monitor_control.disable_apichv ="TRUE"' >> /etc/vmware/config

The workaround takes effect immediately and the ESX server does not need to be rebooted. If VMs were running, they must first be shut down for the workaround to take effect for these VMs.

Ryzen Workaroud No2.

The next workaround concerns the problem with the Data Plane Development Kit (DPDK) and the lack of support for Ryzen CPUs. I already described how to solve this problem manually in my MS-A2 test.

However, William has a better solution here too, and I am using his Powershell script, which he has kindly made publicly available. Nothing has changed in terms of the actual fix; you simply comment out the CPU check and the Edge VM will then work as it should. I also didn’t notice any performance issues. I get 10 Gb/s north-south over my edges without any problems.

Connect-VIServer -Server vc01.vcf.lab -User administrator@vsphere.local -Password VMware1!VMware1!

$edges = @("edge01a","edge01b")

$edgeUser = "root"

$edgePass = "VMware1!VMware1!"

### DO NOT EDIT BEYOND HEREx

$edgeScript = "sed -i `'/if `"AMD`" in vendor_info and `"AMD EPYC`" not in model_name:/s/^/ #/;/self.error_exit(`"Unsupported CPU: %s`" % model_name)/s/^/ #/`' /opt/vmware/nsx-edge/bin/config.py"

foreach ($edge in $edges) {

Invoke-VMScript -VM (Get-VM $edge) -ScriptText $edgeScript -GuestUser $edgeUser -GuestPassword $edgePass

}

Disconnect-VIServer * -Confirm:$false

The script is part of Williams Script Collection, which I highly recommend to everyone.

VCF Setup

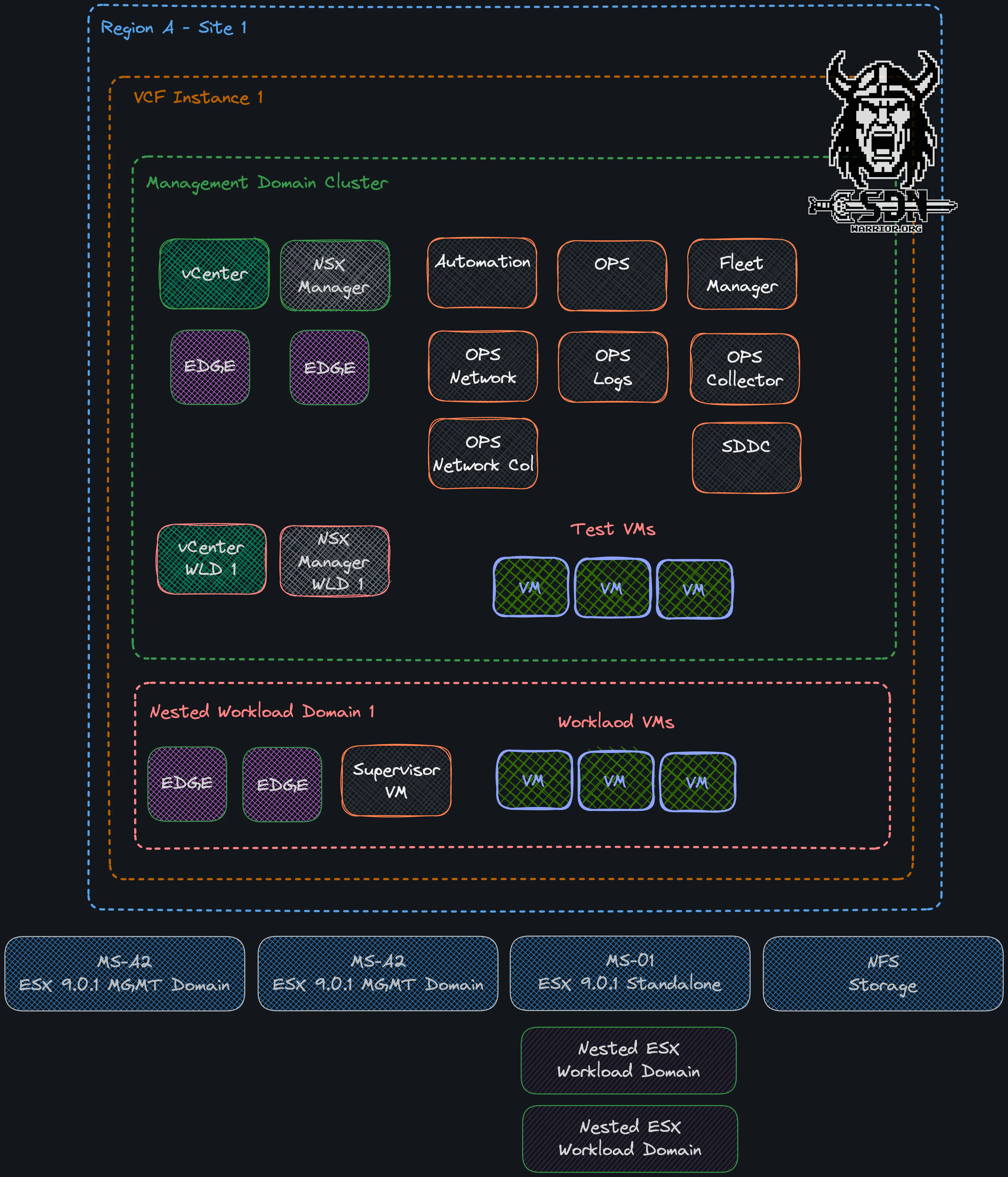

Now we come to the exciting part. After all the workarounds have been implemented and I was able to successfully complete the deployment, the question remains as to what exactly my design looks like now. Because I think that’s what most readers are interested in.

I currently have three physical hosts in use for my full-blown VCF9 setup. Two MS-A2s form my management domain and one MS-01 is a standalone host (without memory tiering, but with P/E cores enabled – the workaround can be found here and has nothing directly to do with the MS-A2).

Why do I need the standalone host? Quite simply, I have two nested ESX servers running on it, which form my workload domain. Since the workload domain requires significantly fewer resources (in a lab), I can easily map it with an MS-01.

Since pictures say more than a thousand words, I have created a design drawing here. The whole thing is based on Broadcom’s official design blueprints.

Lab Design (click to enlarge)

And here are the deployed components in the Managment Domain and the required resources:

| Name | virt. CPUs | Memory Size | Provisioned Space | Used Space | Usage |

|---|---|---|---|---|---|

| vcfa-mgmt-7s24b | 16 | 96 GB | 529 GB | 134 GB | Automation |

| vcf09-w01-nsxa | 6 | 24 GB | 300 GB | 37 GB | NSX WLD01 |

| vcf09-nsxa | 6 | 24 GB | 300 GB | 46 GB | NSX MGMT |

| vcf09-w01-vcsa | 4 | 21 GB | 941 GB | 50 GB | VCSA WLD |

| vcf09-ni | 8 | 32 GB | 1000 GB | 63 GB | Ops for Network |

| vcf09-vcsa | 4 | 21 GB | 742 GB | 50 GB | VCSA MGMT |

| vcf09-m01-edge02 | 4 | 8 GB | 197 GB | 24 GB | Edge MGMT |

| vcf09-m01-edge01 | 4 | 8 GB | 197 GB | 22 GB | Edge MGMT |

| fleet | 4 | 12 GB | 193 GB | 113 GB | Fleet Manager |

| vcf09-ops | 4 | 16 GB | 274 GB | 23 GB | VCF Operation |

| vcf09-li-master | 4 | 8 GB | 530 GB | 182 GB | Ops for Logs |

| vcf09-sddc | 4 | 16 GB | 914 GB | 74 GB | SDDC |

| vcf09-ni-col.lab | 4 | 12 GB | 250 GB | 38 GB | Ops for Network collector |

| vcfopscol | 4 | 16 GB | 264 GB | 19 GB | Cloud Proxy |

| Summe | 76 | 314 GB | 6631 GB | 875 GB |

CPU resources

Wow, that’s a lot of appliances and a lot of power required. First, the good news: when I turn off the automation appliance, the entire setup runs smoothly and quickly on an MS-A2 without any problems.

With automation turned on, the vCenter times out and many components no longer work properly. However, without test VMs in my management domain, I have an overbooking factor of 2.4 to 1, and the actual bottleneck is neither RAM nor storage, but actually the CPU.

The recommendation is to overbook a management domain by a maximum of 2 to 1. If I move the management domain to a host, I have an overbooking factor of 4.75 to 1 with automation and 3.75 to 1 without automation. So it’s not surprising that a host only works properly without automation.

Storage resources

As usual, I use an NFS 3 share from my self-built Unraid NAS, which is connected with 2x10Gb/s, for storage. All VMs are thin deployed, and I have provided 2 shares with 4 TB NVMe storage each. My VMs from the management domain go into the first share, and my VMs from the workload domain go into the second share.

Since my Unraid also has a 2x10 Gb/s network and I have 2 VLANs for NFS, both the management domain and the workload domain can access the full 10 Gb/s network performance, as they each have a different physical path to the storage and therefore cannot slow each other down.

The actual storage usage of less than one terabyte is actually not that much. In addition, I would also have a third 4 TB share that I could connect if space becomes scarce in the future.

RAM resources

My MS-A2s are each equipped with a 128 GB DDR5 kit. I also selected a factor of 200% for memory tiering. This means that each ESX server has a theoretical 384 GB of RAM. However, I am somewhat surprised that no Tier 1 memory (memory tiering) is currently being used in my management domain.

RAM usage in Managment Domain (click to enlarge)

Let’s take a closer look. If you look in vCenter, you can see that I currently have an approximate Tier 0 RAM allocation of 188 GB. A large part of this comes from automation. But even with automation, I still have physical Tier 0 RAM left over, which is why you can see in the screenshot that Tier 1 RAM is listed as -1 MB everywhere.

Without the operation appliance, I have a RAM utilization of 130 GB, so I don’t really need the factor of 200%. I chose it anyway because, among other things, SSP is to be deployed in the future and SSP requires 2x16 vCPUs and 64 GB RAM. So it’s another little resource hog and I have more leeway to run everything on one host. You also have to think about the future lifecycle. In addition, another nested workload domain is to be added, which will also bring with it another vCenter.

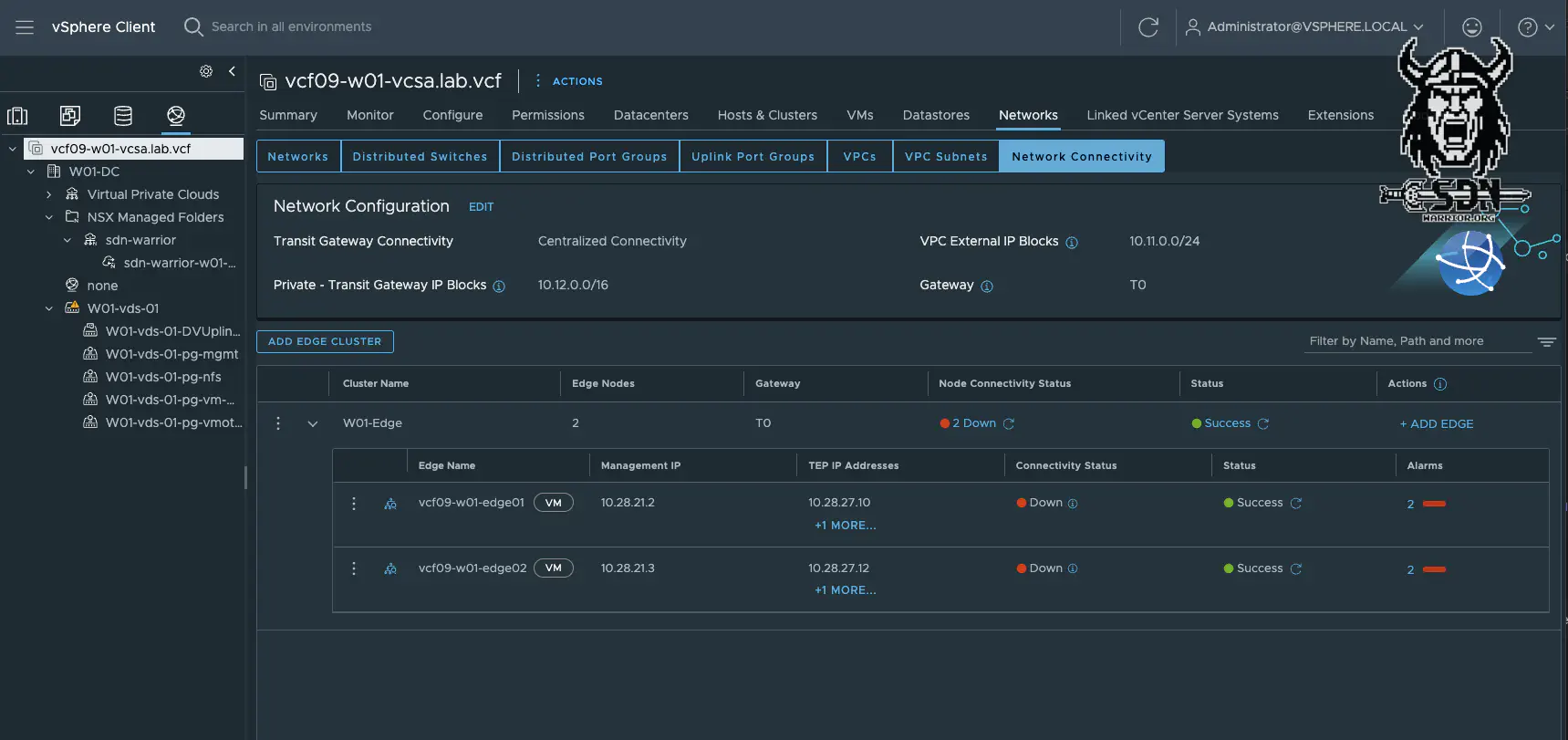

VCF 9.0.1 NSX Edge Workaround

My colleague Christian and I encountered a spontaneous error. If, like him or me, you don’t have the lab running 24/7, it can happen that the uplink port groups are deleted from the Edge VM. This should only happen if the environment is on and the port groups are 24 hours without a connected Edge VM.

What is the result? Well, a huge mountain of error messages. The Edge VMs no longer have an uplink or a TEP network, meaning that all north-south communication is dead.

NSX Edge trouble (click to enlarge)

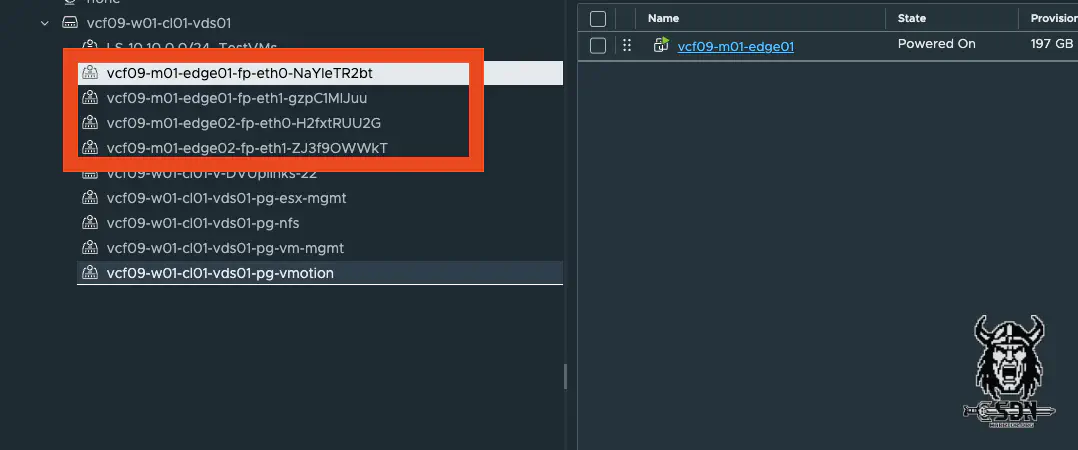

But first, let’s take a step back. In VCF 9, a separate trunk port group is created for each Fastpath interface of the Edge VM. With two edges, each with two uplinks, you therefore have four port groups.

NSX Edge Uplinks (click to enlarge)

If you take a closer look at the port groups, you will see that they are completely normal trunk port groups. To fix this error, you simply need to create two new trunk port groups (note the teaming policy—trunk 1 uplink 1 active/uplink 2 standby and vice versa for trunk 2). These port groups must then be assigned as Adapter 1 and Adapter 2 in the vCenter of the Edge VM. Apparently, only Edge VMs are affected by the error if they were created via the vCenter.

Conclusion

With the new setup, I am prepared for all eventualities. I have enough space in my physical lab to create additional nested labs, and I have a complete solution lab where I can test all aspects of VCF 9. Best of all, I only need three physical hosts, which means I can get by with about 500 watts of power consumption. My always-on equipment requires about 200 watts, bringing the total power consumption of the VCF 9 setup to 300 watts. Not bad at all, to be honest. So, in total, I have 5 more MS-01s that I can use to test things out alongside VCF 9.