VCF Import Tool - Run VCF with NFS as principal Storage

Importing from a vSphere cluster with NFS 3 as principal storage without losing support.

2114 Words // ReadTime 9 Minutes, 36 Seconds

2025-02-23 16:00 +0100

Introduction

VCF is a powerful platform designed to simplify the deployment of vSphere, NSX and the Aria product family. This is both a blessing and a curse. On the one hand, the Cloudbuilder and SDDC Manager significantly simplify deployment, but this also takes away a certain amount of flexibility. In addition, not every customer starts on a greenfield site. Another point is the need for resources, which affects us home labbers in particular. Many want to prepare for the new VCP exams and are now forced to deploy VCF in their Homelab. There are already many solutions for this, such as the Holodeck, the Automated Lab Deployment Scrip) or the Automated VMware Cloud Foundation Import Lab Deployment script (both from the great William Lam), but in my view this is too far removed from the kind of deployment that a customer will experience. Therefore I will show several ways how to create VCF as close as possible to a real deployment without needing the real resources. In this article I would like to show you how to do a convert with the VCF Import Tool. I will write more articles about VCF deployment in the future.

Why use the import tool?

As indicated in the title, the import tool allows a VCF deployment with NFS as principal storage, which means that we do not need vSAN. As much as I like vSAN, vSAN in Homelab always means additional resource consumption. I need fast networking, local NVMes and the overall performance is still not great as I usually set up my LABs nested in my homelab. In addition, the use of vSAN consumer NVMes wears out very quickly. Thanks to VCF 5.2 and the VCF Import Tool, we can get around this relatively easily. Of course, there are always a few limitations.

| Storage Type | Consolidated Workload Domain | Management Domain | VI Workload Domain |

|---|---|---|---|

| Principal Storage | No | Only for a management domain converted from vSphere infrastructure | Yes |

| Supplemental Storage | Yes | Yes | Yes |

What can we take from the table now? It is possible to deploy a standard VCF design with NFS3 and without vSAN, and the best part is that the whole thing is officially supported and is not a homemade solution.

Deployment and preparation of the management domain

First of all, we need to prepare our future management domain. To do this, I am deploying four virtual ESXi servers. I am using the Flings OVA from William Lam and adapting it for my lab. My 4 ESX Nested Servers have the following hardware:

- 10 vCPUs

- 45 GB RAM

- 16 GB Thin Storage

- 2 Network Cards

NTP service and DNS including reverse lookup must be available and working. In addition, all VLANs, gateways and, if necessary, firewall rules must be in place. To keep this article short, I won’t go into this part in detail. The required networks can be found in the VCF Guide.

Of course, there are a few restrictions and things that we have to consider here as well.

-

Cluster - Storage:

Default cluster must be one of vSAN, NFS v3, VMFS-FC, or VMFS-FCoE (only supported with VCF 5.2.1 and later). NFS 4.1, VVOLs, and native iSCSI are not supported

Clusters cannot be stretched vSAN (and we will not be using vSAN either)

VCF 5.2: All clusters (vSAN, NFS v3, FC) must be 4 nodes minimum.

VCF 5.2.1.x When using NFS or FC and vLCM images, the default cluster must be 2 nodes minimum. When using NFS or FC and vLCM baselines, the default cluster must be 4 nodes minimum.

-

Cluster - Network:

vCenter Server must not have an existing NSX instance registered

LACP - VCF Import Tool 5.2.1.2 with SDDC Manager 5.2.1.1: Supported other Version are Not supported

Use vSphere Distributed Switches only. Standard or Cisco virtual switches are not supported.

VMkernel IP addresses must be statically assigned

Multiple VMkernels for a single traffic type (vSAN , vMotion) are not supported

VCF Import Tool 5.2.1.2 with SDDC Manager 5.2.1.1: ESXi hosts can have a different number of physical uplinks (minimum 2) assigned to a vSphere distributed switch. Each uplink must be a minimum of 10Gb.

Ealier versions: ESXi hosts must have the same number of physical uplinks (minimum 2) assigned to a vSphere distributed switch. Each uplink must be a minimum of 10Gb.

vSphere distributed switch teaming policies must match VMware Cloud Foundation standards

Dedicated vMotion network must be configured

-

Cluster - Compute:

Clusters must not be VxRail managed

Clusters that use vSphere Configuration Profiles are not supported

All clusters must be running vSphere 8.0U3 or later

DRS must be fully automated

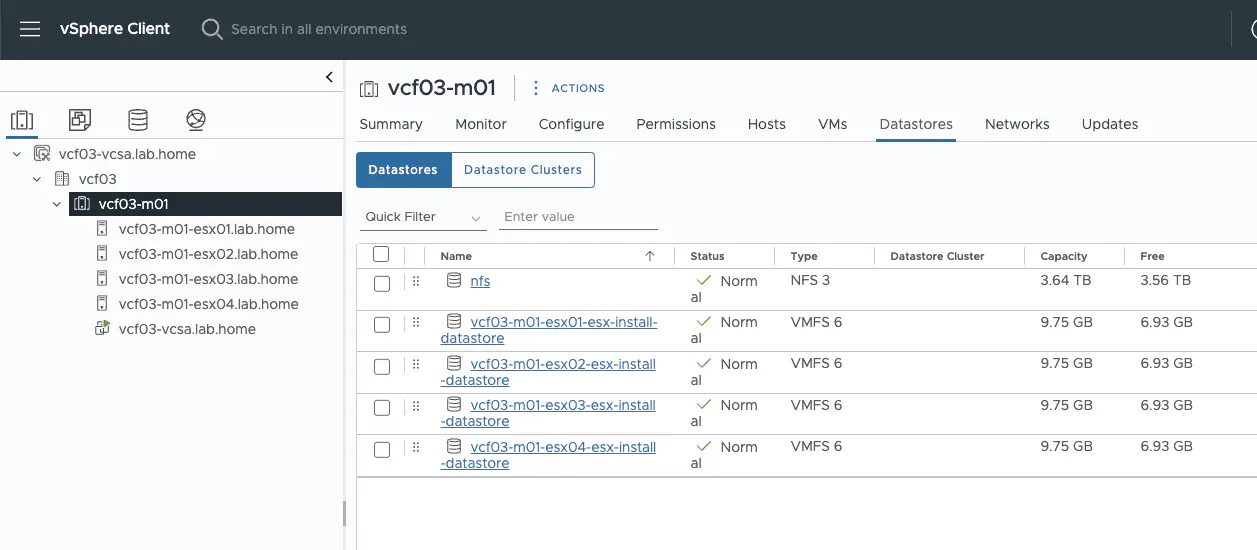

So after I have prepared my future management domain, it should look similar to the screenshot below.

ESXi Cluster with NFS Storage (click to enlarge)

Convert the cluster to a management domain

After I have prepared my cluster, the real fun can begin. To do this, I first need to download the SDDC Manager, the VCF Import Tool and the appropriate NSX bundle. In my case, it was the following software versions:

- VCF-SDDC-Manager-Appliance-5.2.1.1-24397777.ova

- VMware Software Install Bundle - NSX_T_MANAGER 4.2.1.0

- VCF Import Tool 5.2.1.2

The software can be found in the Broadcom support portal under Tools and Drivers in the corresponding VCF 5.2.1 category. First, I deploy the SDDC Manager appliance to my cluster. The SDDC Manager will later deploy our NSX managers. The SDDC Manager will deploy NSX to the same network and storage where it is located. Network and storage cannot be customized during the deployment of the NSX managers. After that, I create a JSON file for the future NSX cluster. Here is my example file:

{

"license_key": "XXXX-XXXX-XXXX-XXXX-XXXX",

"form_factor": "medium",

"admin_password": "xxx",

"install_bundle_path": "/nfs/vmware/vcf/nfs-mount/bundle/bundle-133764.zip",

"cluster_ip": "10.28.0.10",

"cluster_fqdn": "vcf03-m01-nsx01.lab.home",

"manager_specs": [

{

"fqdn": "vcf03-m01-nsx01a.lab.home",

"name": "vcf03-m01-nsx01a",

"ip_address": "10.28.0.11",

"gateway": "10.28.0.1",

"subnet_mask": "255.255.255.0"

},

{

"fqdn": "vcf03-m01-nsx01b.lab.home",

"name": "vcf03-m01-nsx01b",

"ip_address": "10.28.0.14",

"gateway": "10.28.0.1",

"subnet_mask": "255.255.255.0"

},

{

"fqdn": "vcf03-m01-nsx01c.lab.home",

"name": "vcf03-m01-nsx01c",

"ip_address": "10.28.0.15",

"gateway": "10.28.0.1",

"subnet_mask": "255.255.255.0"

}

]

}

Tipp: Customizing the NSX cluster size

First, I log in to my SDDC manager with the VCF user via ssh and switch to the root context. Then I add the application-prod.properties file with the following addition.

cat >> /etc/vmware/vcf/domainmanager/application-prod.properties << EOF

nsxt.manager.cluster.size=1

EOF

systemctl restart domainmanager.service

watch 'systemctl status domainmanager.service'

After restarting the domain manager services, the changes should take effect and it is possible to deploy an NSX single node cluster. In my current test I did not do this, but in the past I have often done single node nsx cluster vcf deployments in the lab.

Upload Software and run precheck

The next step is relatively simple: I use WinSCP to upload the import tool, the NSX upgrade bundle and the NSX deployment json to my SDDC Manager. The upgrade bundle is placed in the directory specified in the NSX spec. In my case, it is

/nfs/vmware/vcf/nfs-mount/bundle/bundle-133764.zip

and I upload the NSX spec and the import tool to

/home/vcf

Next, uncompress the import tool and you’re ready to go.

tar xvf vcf-brownfield-import-5.2.1.2-24494579.tar.gz

To run the precheck, we change to the vcf-browndield-toolset directory and run the precheck with the following command.

python3 vcf_brownfield.py check --vcenter '<my-vcenter-address>' --sso-user '<my-sso-username>'

Should there be any errors in the precheck, the tool generates guardrails files in which you can read about the problem. In my case, the precheck went cleanly and I can now start the actual convert of my management domain.

The command is more or less the same, only a few parameters have to be changed.

python3 vcf_brownfield.py convert --vcenter '<vcenter-fqdn>' --sso-user '<sso-user>' --domain-name '<wld-domain-name>' --nsx-deployment-spec-path '<nsx-deployment-json-spec-path>'

Actions:

| Action | Additional Information |

|---|---|

-h, --help |

Shows the VCF Import Tool help. |

-v, --version |

Displays the VCF Import Tool version. |

convert |

Converts existing vSphere infrastructure into the management domain in SDDC Manager. |

check |

Checks whether a vCenter is suitable to be imported into SDDC Manager as a workload domain. |

import |

Imports a vCenter as a VI workload domain into SDDC Manager. |

sync |

Syncs an imported VI workload domain or a VI workload domain deployed from SDDC Manager. See Manage Workload Domain Configuration Drift Between vCenter Server and SDDC Manager. |

deploy-nsx |

Deploys NSX Manager as a standalone operation. See Deploy NSX Manager for Workload Domains. |

precheck |

Runs prechecks on vCenter. |

Parameter:

| Parameter | Additional Information |

|---|---|

--vcenter |

Target vCenter Server for the current operation. |

--sso-user |

SSO administrator user for the target vCenter Server. |

--sso-password |

SSO administrator password for the target vCenter Server. Used for prevalidation only. |

--domain-name |

Workload domain name to be assigned to the target environment during convert/import. |

--nsx-deployment-spec-path |

Absolute path to the NSX deployment spec JSON file. |

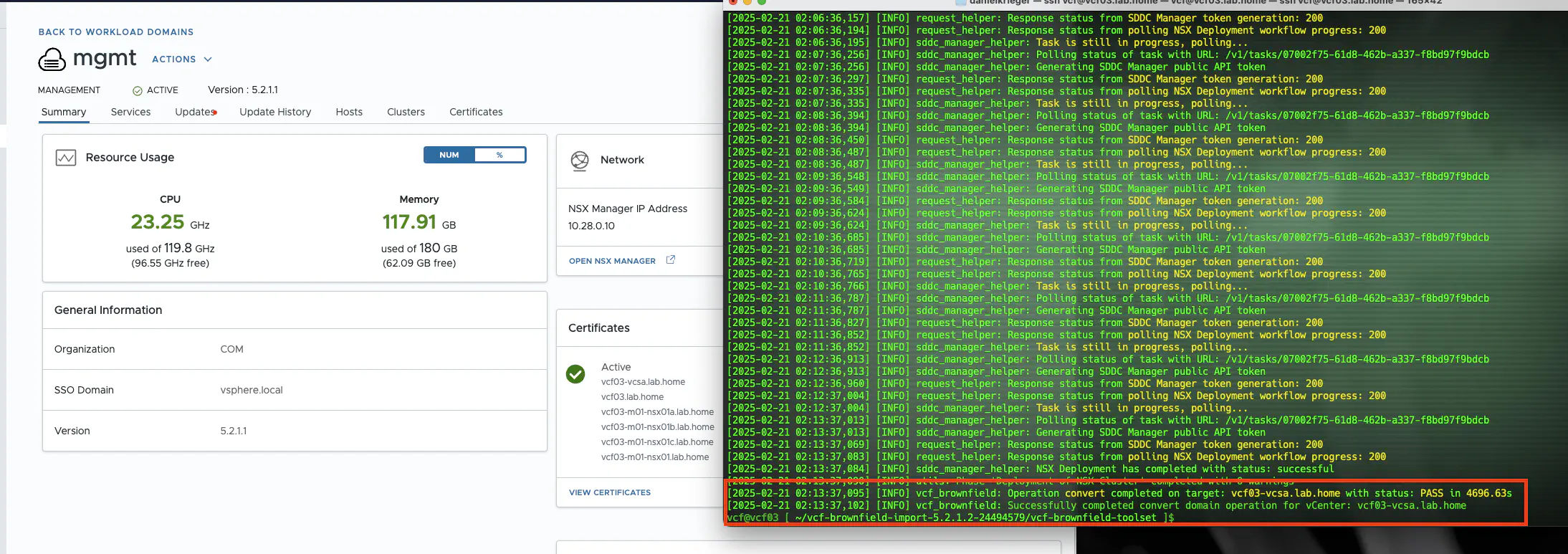

After the convert has hopefully been successful, the whole thing should now look like on my screenshot.

Convert successfully(click to enlarge)

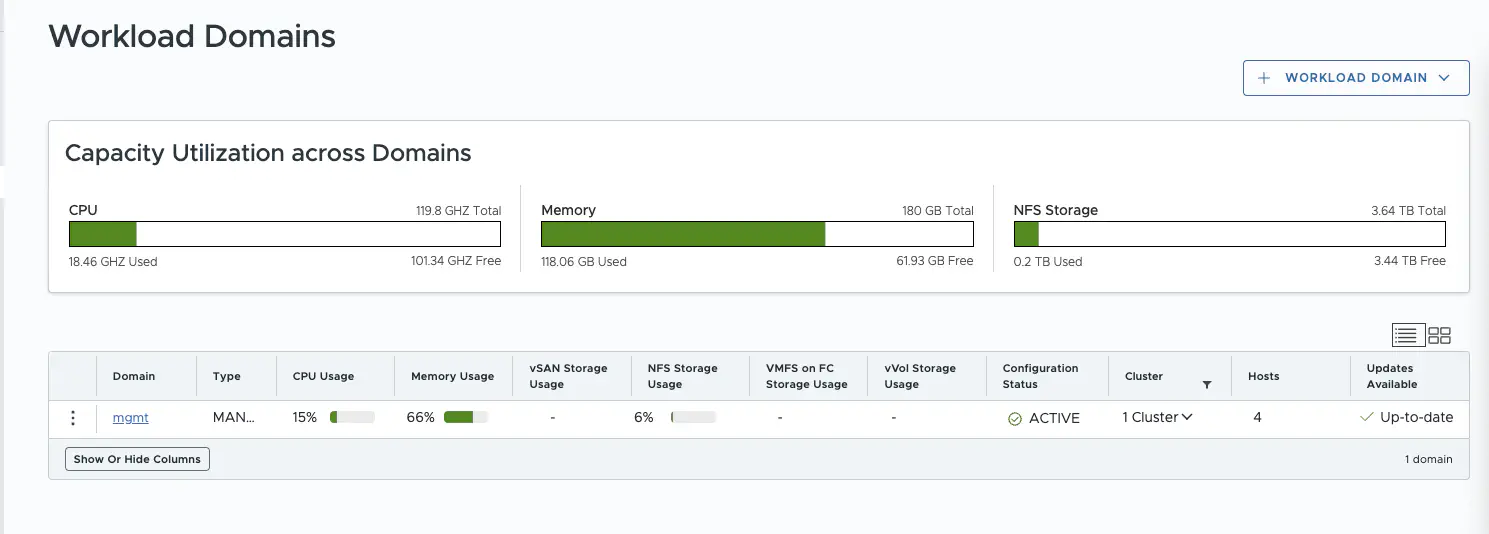

MGMT Domain with NFS Storage(click to enlarge)

Congratulations, we now have a supported workload domain with NFS storage. Of course, we still have some fine-tuning to do. For example, no NSX Edges have been deployed yet and the ESX servers have been made transport nodes, but they do not yet have TEP addresses. This is work that we have to do manually in the NSX Manager. We also still need to install licenses in the SDDC manager and setup the software repository so that we can install updates directly via the SDDC if necessary.

There are now two options for the future workload domains: 1. import via the import tool (the process is analogous to the convert) or 2. normal deployment via the SDDC manager.

Conclusion

Importing or converting existing ESXi clusters enables us to deploy VCF in a resource-efficient way in the lab and also offers the possibility for PoCs for customers who would like to test VCF but do not have vSAN Ready nodes. The import tool in VCF 5.2 extends the possibilities of VCF and gives us a bit more flexibility in deploying VCF. From my point of view, this is the right way to make VCF more widely accepted by customers. It also gives us the opportunity to become familiar with the product with fewer resources.