VCF Import Tool - Enable Overlay in an imported VCF Domain

Part 2 VCF Import Cluster with NFS and activating the overlay.

1377 Words // ReadTime 6 Minutes, 15 Seconds

2025-03-05 21:00 +0100

Introduction

My blog is a follow-up to my article “VCF Import Tool - Run VCF with NFS as principal Storage” and covers the activation of the overlay network after I have successfully converted an ESXi cluster to a VCF management domain using the VCF Import Tool.

The VCF import tool still has a few limitations. Among other things, no NSX TEP interface is configured in VCF 5.2.1 after the convert or import. Without the tunnel endpoints it is not possible to use NSX overlay networks. In this blog I will show which steps you have to take to prepare NSX so that we can create and use overlay networks.

Creating an IP pool

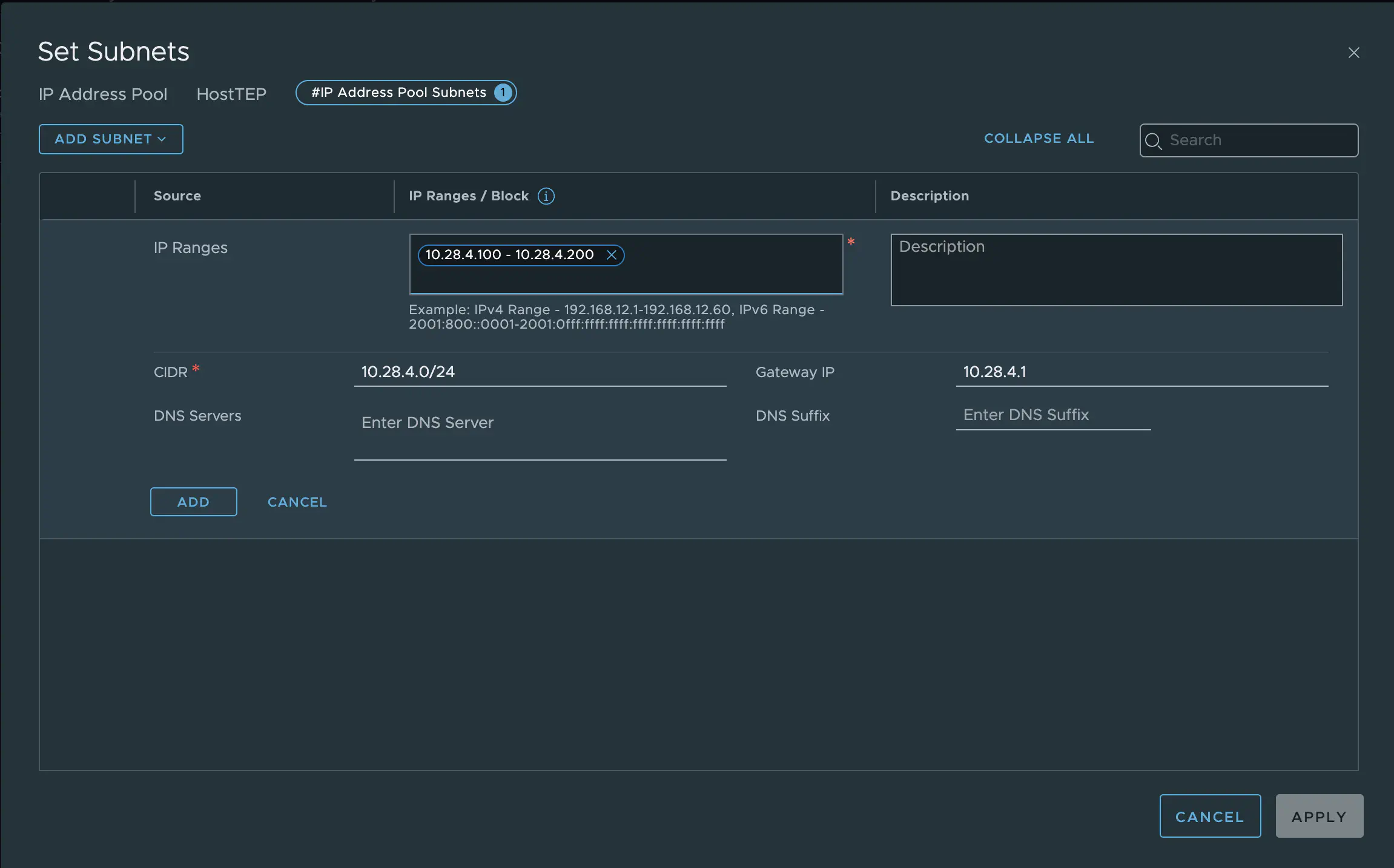

For our TEP network we can either use a DHCP server or the IP pool variant preferred by VMware. I personally find the DHCP server more flexible and easier for environments that need to grow quickly, but there is no right or wrong at this point. However, when creating the pool, you should make sure that our subnet has a sufficient size, as this can only be changed to a limited extent after successful allocation.

To create an IP pool, we go to IP Address Pools under Networking in the NSX Gui and click the ADD IP ADDRESS POOL button.

Under Subnet we click on the IP RANGES button and configure our IP range, our network CIDR and a gateway. The TEP network must be routed, as the Edge Transport Nodes normally have their TEP IP addresses in a different network and VLAN. While it is possible for the Edge Transport Nodes to be on the same VLAN and network as our Host Transport Nodes, this is a less common scenario and requires the Edge Transport Nodes to either run on non-NSX enabled hosts or use NSX backed VLAN segments. I have already writte a blog for Evoila about this.

IP Pool configuration (click to enlarge)

Finally, we define a name for the IP pool and save the configuration.

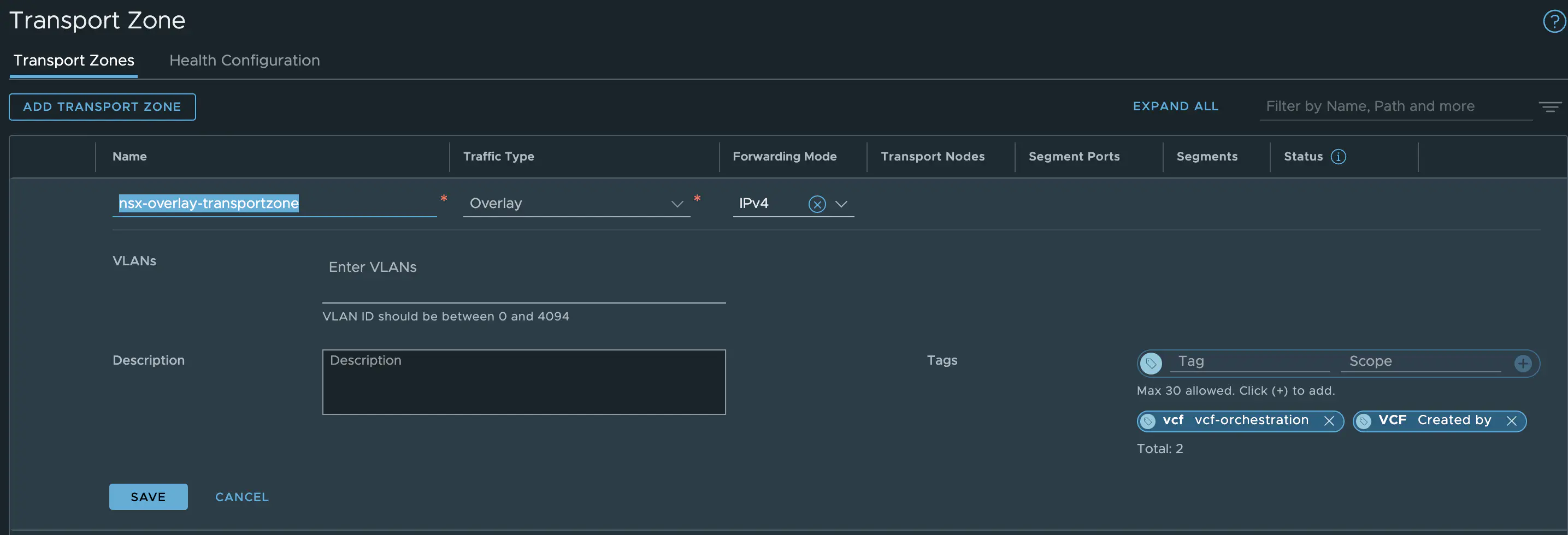

Update the default Transport Zone

In NSX, a transport zone (TZ for short) is a logical grouping of transport nodes that defines which network segments can exist on these nodes. It determines the scope of the overlay and VLAN networks and is not a security feature. There are two types of TZ: an overlay and a VLAN transport zone. For the imported or converted VCF environment, we only need to make changes to the overlay TZ. The VLAN TZ is created when the Edge Nodes are deployed via the SDDC Manager and no longer needs to be customized. VCF uses the default transport zone nsx-overlay-transportzone. This must also be assigned later in the transport node profile.

We first need to add two tags to the TZ. These tags are used internally by the SDDC manager to identify the TZ as being used by VCF.

The transport zones can be found in the NSX GUI under System - Transport Zone. There should be exactly one overlay transport zone that also has the default flag. The following TAGs must be attached to the overlay transport zone:

| Tag Name | Scope |

|---|---|

| VCF | Created by |

| vcf | vcf-orchestration |

The transport zone type cannot be changed afterwards. We can and must only add tags here.

Transport Zone configuration (click to enlarge)

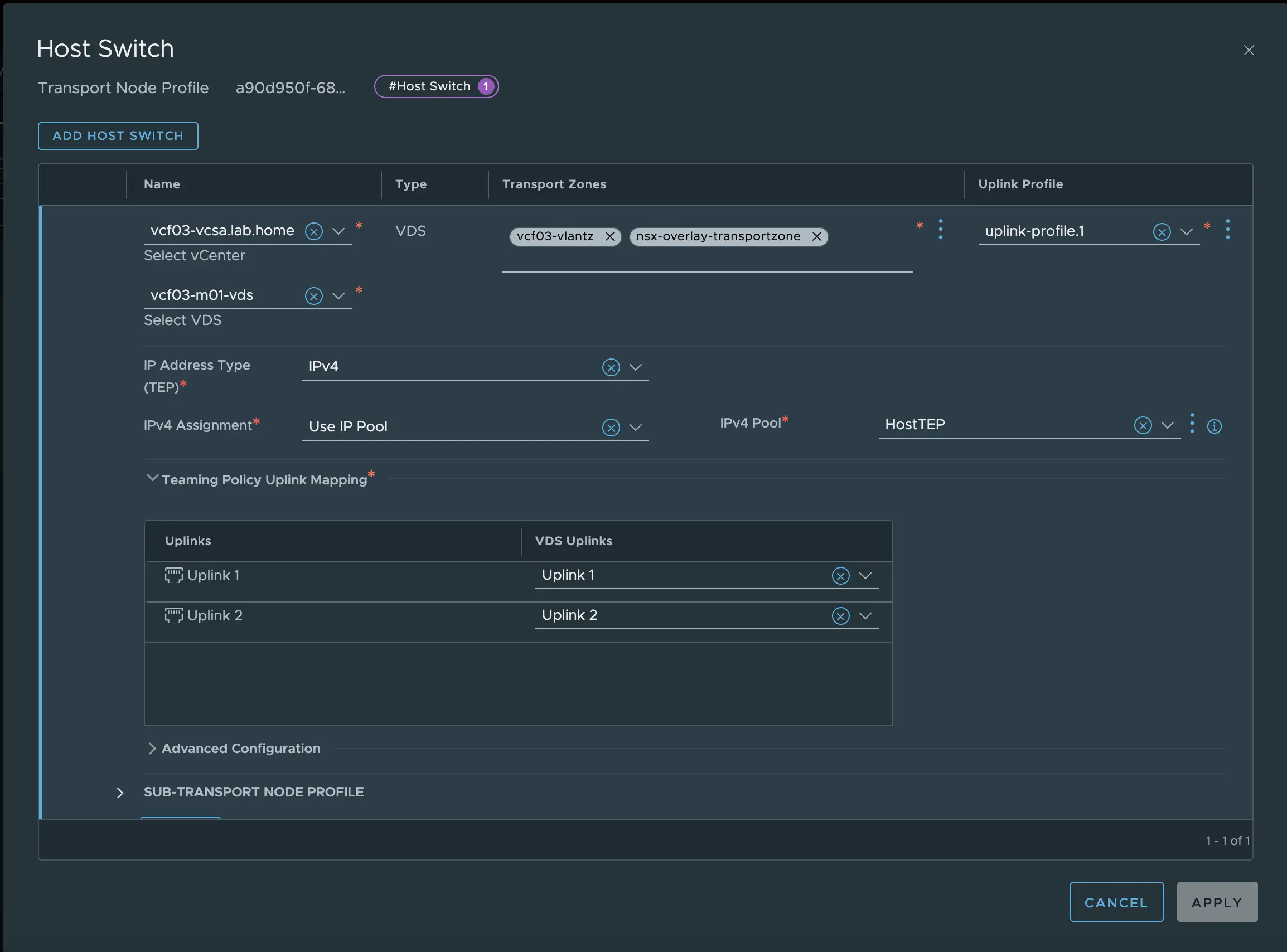

Edit the Transport Node Profiles

The next step is to customize the transport node profile so that we can use overlay networks and create NSX TEP interfaces on our ESXi servers.

Since NSX 3.1.x, the Transport Node profile can be found under Fabric - Hosts - Transport Node Profile. The VCF Import Tool has already created a profile for us. As is so often the case with VCF, the profile has a cryptic name that consists of an ID + domain and the text autoconf-tnp. You can safely ignore the name. We need to go to the profile’s host switch config and add the overlay transport zone to the profile. As soon as we have added this TZ, additional options for IP assignment will appear. The previously created IP pool is selected here. We save the whole thing and that’s it for the changes in the NSX.

Transport Node Profile configuration (click to enlarge)

After everything has been saved, NSX starts customizing the cluster and configuring TEP IP addresses for each of our ESXi servers.

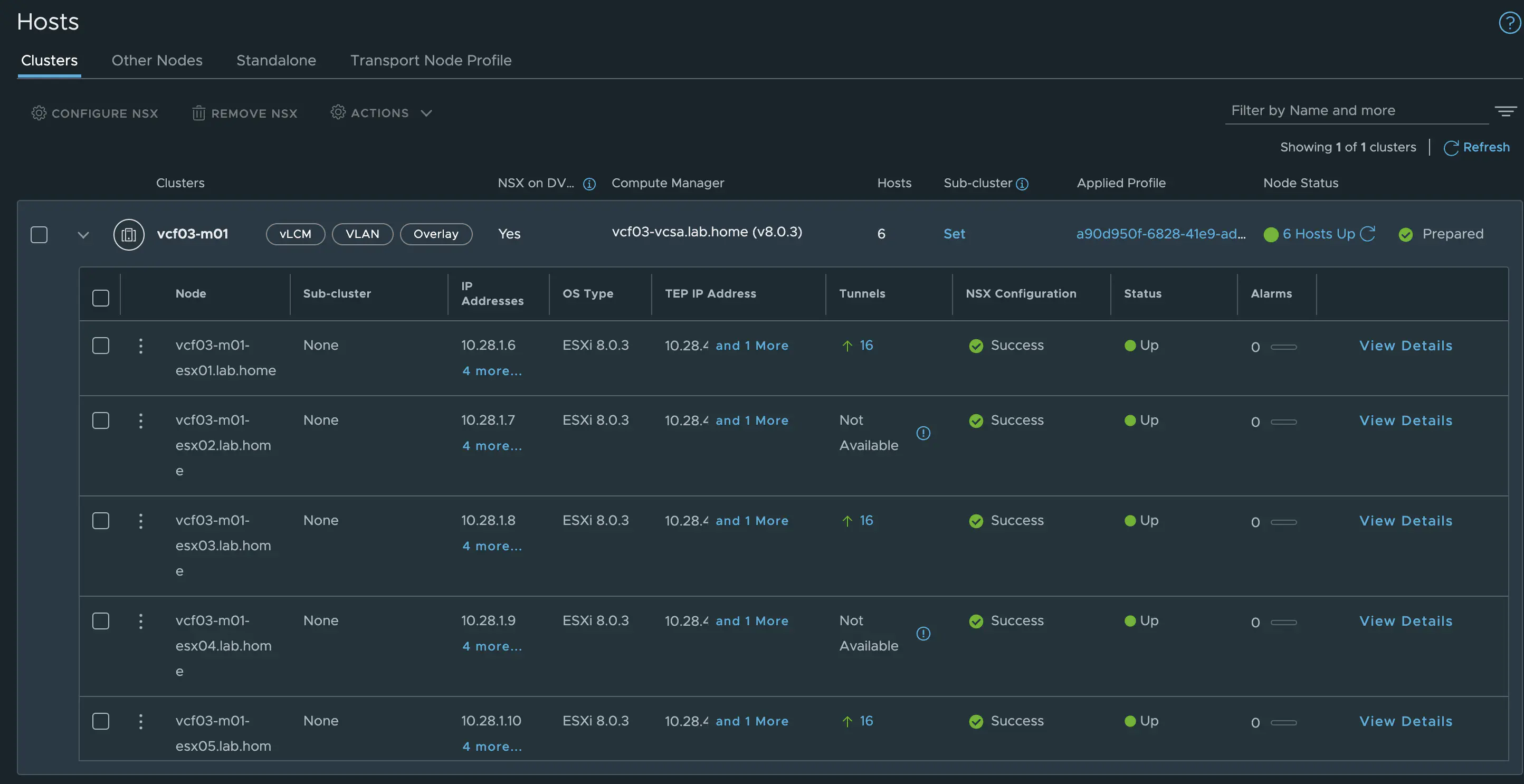

Verify TEP Network

There are several ways to check the TEP network. We can look it up in the vCenter, where each host should now have 2 VMK adapters with IP addresses from our TEP network. VKM10 and VMK11 are responsible for processing our TEP traffic in the dual TEP setup. The second option is in NSX itself. To do this, go to System - Fabric - Hosts and look at our cluster. In the TEP IP Addresses column, each ESXi in the cluster should have two IP addresses.

Transport Node TEP IPs (click to enlarge)

The third option is to ping from one ESXi server to another. To do this, the VMK ping command must be executed as follows:

[root@vcf03-m01-esx01:~] vmkping -I vmk10 -S vxlan 10.28.4.105 -d -s 1972

PING 10.28.4.105 (10.28.4.105): 1972 data bytes

1980 bytes from 10.28.4.105: icmp_seq=0 ttl=64 time=0.274 ms

1980 bytes from 10.28.4.105: icmp_seq=1 ttl=64 time=0.113 ms

1980 bytes from 10.28.4.105: icmp_seq=2 ttl=64 time=0.267 ms

I use an MTU of 2000 in my lab, so the maximum payload is 1972 bytes.

-d sets the don’t fragment bit, and -S vxlan uses the VXLAN socket interface for the ping.

This is used to test the transport over the NSX Geneve overlay.

Update the SDDC Manager

We need to use our VCF import tool again so that our SDDC manager also knows that we now have an overlay transport zone and that we can deploy our AVN for the Aria Suite, for example. To do this, we perform a sync and wait for it to complete.

python3 vcf_brownfield.py sync --vcenter 'vcf03-vcsa.lab.home' --sso-user 'administrator@vsphere.local' --domain-name 'mgmt'

Don’t be surprised if you get an error after the sync. However, you can ignore it and it is totally logical that it occurs.

Status: VALIDATION_FAILED

Check Name: vCenter Server no NSX Manager present

Description: Check that the vCenter Server does not have an NSX Manager connected to it

Details: Detected an NSX Manager connected to the vCenter Server

Remediation: Please ensure that no NSX Manager is connected to the vCenter Server to be imported

So our converted MGMT domain would be ready for use. Next, I deployed my Edge Cluster and then the Aria Suite. But that’s not what this article is about.

Conclusion

I really like the VCF Import tool, even though it still needs some fine-tuning here and there. The sync function is quite practical and is a good way to bring changes made outside of the SDDC Manager back into the SDDC Manager. Hopefully Broadcom will also provide the sync for a regularly deployed VCF, which from NSX’s point of view would significantly enrich the VCF in terms of flexibility.